This time of year always brings pause for reflection. But the end of this year is special in that we're not just bidding farewell to the last 365 days but to the last 3,650! The last decade has brought tremendous change for CHM and our blog has documented it all.

Since 2009, we've celebrated milestone anniversaries, like Moore's Law at 50 in 2015 and the 40th and 50th anniversaries of the first ARPANET connection—the predecessor to today's internet—in 2009 and 2019 respectively. We've explored and contemplated questions, like "Who Named Silicon Valley?" "Who Invented the Transistor?" and even "Why a Computer Museum?"

We've remembered the legends we lost, including Apple's Steve Jobs in 2011 and Intel's Andy Grove in 2016. We've shared collection highlights and important acquisitions, like Community Memory records from Lee Felsenstein in 2016, the Fairchild Notebooks in 2013, and the scanning of 18,000 pages of SRI’s Augmentation Research Center (ARC) journal in 2013.

We’ve welcomed special visitors, like IBM's Watson to Revolution: The First 2000 Years of Computing in 2013, and we’ve traveled to Kenya, Japan, and let’s not forgot Hollywood, where CHM’s ENIAC graced the pages of Time magazine with The Imitation Game star Benedict Cumberbatch. We’ve ushered in new exhibits, welcomed guest bloggers, welcomed our CEO Dan’l Lewin, shared insights from our own CHM Live programs, and expanded our breadth of topics as we continue to develop our own voice.

What better way to commemorate our journey these last 10 years than with a look back at our 10 most-read blogs? Beginning with our earliest, enjoy the best of CHM’s blog this decade.

The IBM 1401 restoration project began in 2004 when CHM acquired two complete IBM 1401 systems from Germany and the United States. A team of 20 Museum volunteers of mostly retired IBM engineers restored the machines after 20,000 hours in 500 works sessions over 10 years.

I often hear people use the terms preservation, conservation, and restoration interchangeably. In everyday use, that’s fine. However, these terms actually mean something quite different inside the walls of a museum. Here’s the insider perspective, examples for understanding the differences, and even some ways that visitors can help.

When brothers Thomas and John Knoll began designing and writing an image editing program in the late 1980s, they could not have imagined that they would be adding a word to the dictionary.

The original IBM PC. © Mark Richards/Computer History Museum.

Although IBM had prodigious internal software development resources, for the new PC they supported only operating systems that they did not themselves write, like CP/M-86 from Digital Research in Pacific Grove CA, and the Pascal-based P-System from the University of California in San Diego. But their favored OS was the newly-written PC DOS, commissioned by IBM from the five-year-old Seattle-based software company Microsoft.

When Microsoft signed the contract with IBM in November 1980, they had no such operating system. They too outsourced it, by first licensing then purchasing an operating system from Seattle Computer Products variously called QDOS (Quick and Dirty Operating System”) and 86-DOS.

Flying Carpet, by Viktor M. Vasnetsov, 1880. By 130 BC, a magic carpet supposedly flew King Phraates II of Parthia to battle. Flying carpets have graced folktales from Russia to Iraq. They combine two once-fantastic dreams: autonomous vehicles, and flight. Credit: Wikimedia Commons

When Robert Whitehead invented the self-propelled torpedo in the 1860s, the early guidance system for maintaining depth was so new and essential he called it “The Secret.” Airplanes got autopilots just a decade after the Wright brothers. Today, sailboats have auto-tillers. Semi-autonomous military drones kill from the air, and robot vacuum cleaners confuse our pets.

Yet one deceptively modest dream has rarely ventured beyond the pages of science fiction since our grandparent’s youth: the self-driving family car.

The NeXT Cube was a masterpiece of engineering… but too expensive. NeXT evolved into a software company after the Cube and several other NeXT hardware products failed in the marketplace. The company’s greatest innovation was the NeXTSTEP operating environment, including its rapid prototyping tools. CHM# 102626734

Since 2008, over a hundred billion apps have been downloaded from Apple’s App Store onto users’ iPhones or iPads. Thousands of software developers have written these apps for Apple’s “iOS” mobile platform. However, the technology and tools powering the mobile “app revolution” are not themselves new, but rather have a long history spanning over thirty years, one which connects back to not only NeXT, the company Steve Jobs started in 1985, but to the beginnings of software engineering and object-oriented programming in the late 1960s.

Dorothy and Gary, ca 1978.

In 1993, the year before his untimely death, Gary wrote a draft of a memoir titled Computer Connections: People, Places, and Events in the Evolution of the Personal Computer Industry. He distributed bound copies to family and friends, with a note that it “will go to print in final form early next year.” It never did. The ownership of that manuscript passed to Gary’s children, Scott and Kristin. With their permission, we are pleased to make available the first portion of that memoir, along with their introduction to it and previously unpublished family photos.

“Our Brain’s Development in a Technological World: Neuroscientist Adam Gazzaley, Professor Mary Helen Immordino-Yang & Research Psychologist Larry Rosen in Conversation with Lisa Krieger of the San Jose Mercury News,” CHM Live, February 15, 2018.

We live in really extraordinary times. We’re witnessing an explosion in the diversity and the accessibility of these amazing computers that we carry in our pockets and have on our desks,” said Lisa Krieger of the San Jose Mercury News. The CHM Live event, “Our Brain’s Development in a Technological World,” held at CHM on February 15, consisted of a panel discussion about how technology affects our brains and learning, with a focus on its impact on youth.

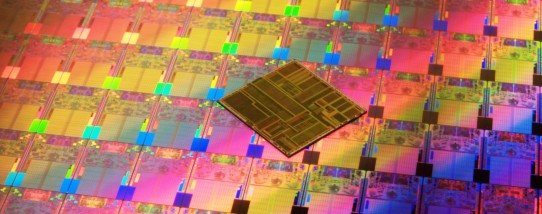

Intel Pentium microprocessor die and wafer.

The microprocessor is hailed as one of the most significant engineering milestones of all time. The lack of a generally agreed definition of the term has supported many claims to be the inventor of the microprocessor. The original use of the word “microprocessor” described a computer that employed a microprogrammed architecture—a technique first described by Maurice Wilkes in 1951. Viatron Computer Systems used the term microprocessor to describe its compact System 21 machines for small business use in 1968. Its modern usage is an abbreviation of micro-processing unit (MPU), the silicon device in a computer that performs all the essential logical operations of a computing system. Popular media stories call it a “computer-on-a-chip.”

Eventually many email clients were written for personal computers, but few became as successful as Eudora. Available both for the IBM PC and the Apple Macintosh, in its heyday Eudora had tens of millions of happy users. Eudora was elegant, fast, feature-rich, and could cope with mail repositories containing hundreds of thousands of messages. In my opinion it was the finest email client ever written, and it has yet to be surpassed.

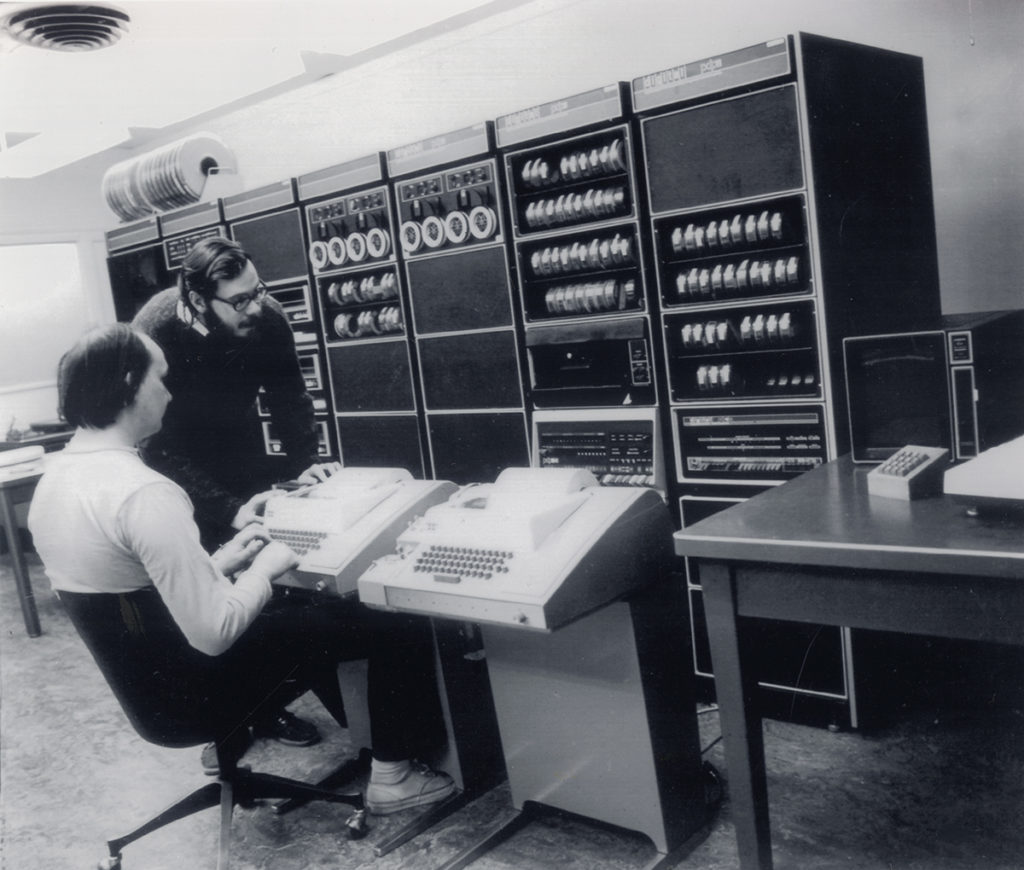

Ken Thompson (seated) and Dennis Ritchie (standing) with the DEC PDP-11 to which they migrated the Unix effort in 1971. Collection of the Computer History Museum, 102685442.

What is it that runs the servers that hold our online world, be it the web or the cloud? What enables the mobile apps that are at the center of increasingly on-demand lives in the developed world and of mobile banking and messaging in the developing world? The answer is the operating system Unix and its many descendants: Linux, Android, BSD Unix, MacOS, iOS—the list goes on and on. 2019 marks the 50th anniversary of the start of Unix. In the summer of 1969, that same summer that saw humankind’s first steps on the surface of the Moon, computer scientists at the Bell Telephone Laboratories—most centrally Ken Thompson and Dennis Ritchie—began the construction of a new operating system, using a then-aging DEC PDP-7 computer at the labs.