In partnership with Google, CHM has released the source code to AlexNet, the neural network that in 2012 kick-started today’s prevailing approach to AI. It is available as open source here.

AlexNet is an artificial neural network created to recognize the contents of photographic images. It was developed in 2012 by then University of Toronto graduate students Alex Krizhevsky and Ilya Sutskever and their faculty advisor Geoffrey Hinton.

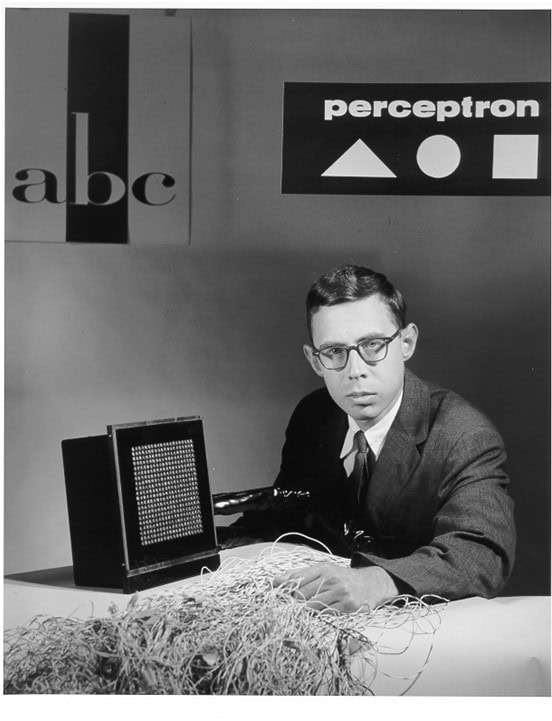

Geoffrey Hinton is regarded as one of the fathers of “deep learning,” the type of artificial intelligence that uses neural networks and is the foundation of today’s mainstream AI. Simple three-layer neural networks with only one layer of adaptive weights were first built in the late 1950s, most notably by Cornell researcher Frank Rosenblatt, but they were found to have limitations. Networks with more than one layer of adaptive weights were needed, but there wasn’t a good way to train them. By the early 1970s, neural networks had been largely rejected by AI researchers.

Cornell University psychologist Frank Rosenblatt developed the Perceptron Mark I, an electronic neural network designed to recognize images—like letters of the alphabet. He introduced it to the public in 1958. Credit: Cornell Aeronautical Laboratory/Calspan.

In the 1980s, neural network research was revived outside the AI community by cognitive scientists at UC San Diego, under the new name of “connectionism.” After finishing his PhD, Hinton became a postdoctoral fellow at San Diego and collaborated with David Rumelhart and Ronald Williams. They rediscovered the backpropagation algorithm for training multilayer neural networks and in 1986 they published two papers showing that it enabled neural networks to learn multiple layers of features for language and vision tasks. Backpropagation uses the difference between the current output and the desired output of the network to adjust the weights in each layer from the output layer backwards to the input layer. More detail on how neural networks work can be found here. “Backpropagation” is foundational to deep learning today.

In 1987, Hinton joined the University of Toronto. Away from the centers of traditional AI, Hinton’s work and those of his graduate students in the coming decades made Toronto a center of deep learning research. A postdoctoral student of Hinton’s at this time was Yann LeCun. While working in Toronto he showed that when backpropagation was used in “convolutional” neural networks they became very good at recognizing hand-written numbers.

Despite these advances, neural networks could not outcompete other types of machine learning algorithms consistently. They needed two developments from outside of AI to pave the way. The first was the emergence of vastly larger amounts of data for training, made available by the web. The second was enough computational power for this training, in the form of GPU hardware. By 2012, the time was ripe for AlexNet.

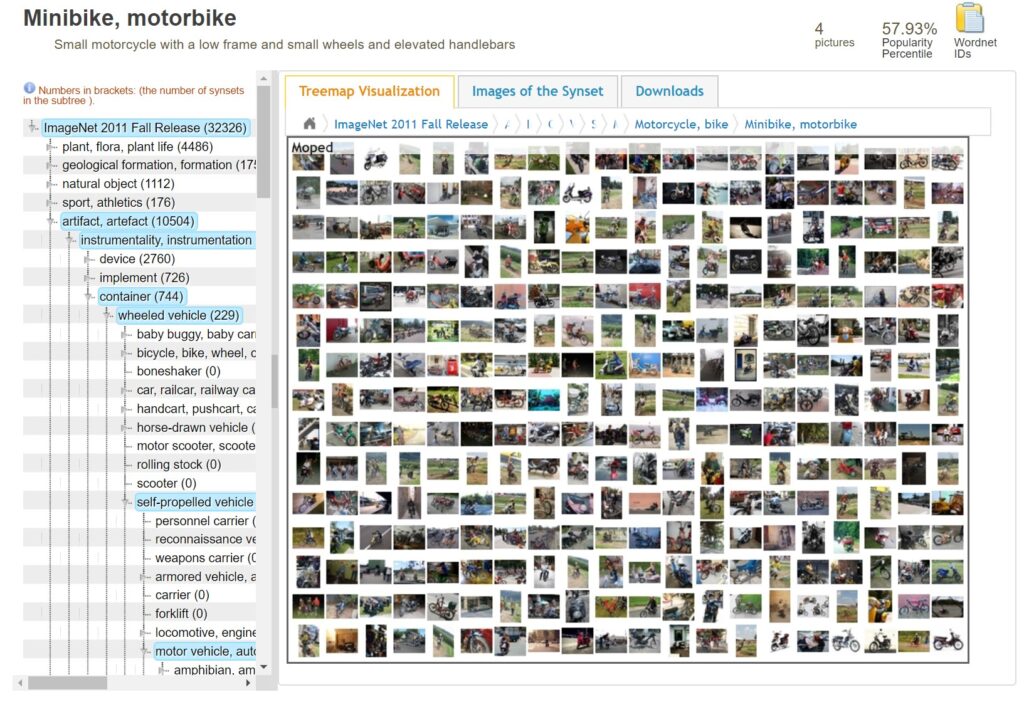

The vast amount of carefully curated data needed to train AlexNet was ImageNet, a project started and led by Stanford professor Fei-Fei Li. Beginning in 2006, and against conventional wisdom, she envisioned a dataset of images covering every noun in the English language. She and her graduate students began by collecting images found on the internet and classifying them using a taxonomy provided by WordNet, a database of words and their relationships to each other. Given the enormity of their task, Li and her collaborators ultimately crowdsourced the task of labeling images to gig workers using Amazon’s Mechanical Turk platform.

Fei-Fei Li discusses her book, The Worlds I See, on stage with Tom Kalil at CHM September 17, 2024. Photograph by Douglas Fairbairn, Computer History Museum.

Screenshot of the ImageNet database taken by the author in 2020.

Completed in 2009, ImageNet was larger than any previous image dataset by several orders of magnitude. Li hoped its availability would spur new breakthroughs, and she started a competition in 2010 to encourage research teams to improve their image recognition algorithms. However, after the first two years, the best systems were only making marginal improvements.

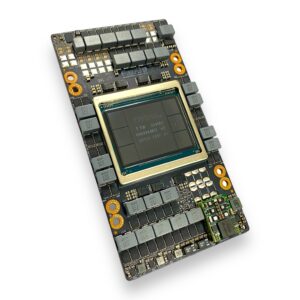

The second condition necessary for the success of neural networks was economical access to vast computation. Neural network training involves a lot of repeated matrix multiplications, preferably done in parallel, something that 3D graphics chips (known as GPUs) are designed to do. NVIDIA, headed by cofounder and CEO Jensen Huang, had led the way in the 2000s in making GPUs more generalizable and programmable for applications beyond 3D graphics, especially with the CUDA programming system, released in 2007.

NVIDIA cofounder and CEO Jensen Huang received a 2024 CHM Fellow Award for his contributions to the making of chips for computer graphics and AI. Photograph by Douglas Fairbairn, Computer History Museum.

The NVIDIA H100 GPU chip is in high demand for today’s AI work, training the large language models behind ChatGPT and other chatbots. Gift of NVIDIA Corporation, 102801460.

Both ImageNet and NVIDIA’s CUDA were, like neural networks themselves, fairly niche developments that were waiting for the right circumstances to shine. In 2012, AlexNet brought these elements (deep neural networks, big datasets, and GPU compute) together for the first time, with pathbreaking results. Each of these needed the other.

By the late 2000s, Hinton’s graduate students at the University of Toronto were beginning to use GPUs to train neural networks for both image and speech recognition tasks. Their first successes came in speech recognition, but success in image recognition would point to deep learning as possibly a general-purpose solution to AI. One student, Ilya Sutskever, believed that the performance of neural networks would scale with the amount of data available, and the arrival of ImageNet provided the opportunity.

Alex Krizhevsky in 2018. Courtesy of Alex Krizhevsky.

A milestone occurred in 2011, when DanNet, a convolutional neural network trained on GPUs created by Dan Cireşan and others at Jürgen Schmidhuber’s lab in Switzerland, won four separate image recognition contests. However, these results were on smaller datasets and were not able to move the field of computer vision. ImageNet, which was much larger and more comprehensive, was different. That same year, Sutskever convinced fellow Toronto graduate student Alex Krizhevsky, who had a keen ability to wring maximum performance out of GPUs, to train a convolutional neural network for ImageNet, with Hinton serving as principal investigator. Krizhevsky had already written CUDA code for a convolutional neural network using NVIDIA GPUs, called “cuda-convnet,” trained on the much smaller CIFAR-10 image dataset. He extended cuda-convnet with support for multiple GPUs and other features and retrained it on ImageNet. The training was done on a computer with two NVIDIA cards in Krizhevsky’s bedroom at his parents’ house. Over the course of the next year, Krizhevsky constantly tweaked the network’s parameters and retrained it until it achieved performance superior to its competitors. The network would ultimately be named AlexNet, after Krizhevsky. In describing the AlexNet project, Geoff Hinton summarized for CHM: “Ilya thought we should do it, Alex made it work, and I got the Nobel Prize.”

This is a rare photograph of the home computer with GPUs used to create AlexNet. Credit: University of Toronto.

Krizhevsky, Sutskever, and Hinton’s paper was published in the fall of 2012 and publicly presented by Krizhevsky at a computer vision conference in Florence, Italy, in October . Veteran computer vision researchers weren’t convinced, but Yann LeCun, who was at the meeting, pronounced it a turning point for AI. He was right. Before AlexNet, almost none of the leading computer vision papers used neural nets. After it, almost all of them would.

Krizhevsky, Sutskever, and Hinton’s seminal 2012 paper on AlexNet has been cited over 172,000 times, according to Google Scholar. The paper is freely available here.

AlexNet was just the beginning. In the next decade, neural networks would advance to synthesize believable human voices, beat champion Go players, model human language, and generate artwork, culminating with the release of ChatGPT in 2022 by OpenAI, a company cofounded by Sutskever.

In 2020, I reached out to Alex Krizhevsky to ask about the possibility of allowing CHM to release the AlexNet source code, due to its historical significance. He connected me to Geoff Hinton, who was working at Google at the time. As Google had acquired Hinton, Sutskever, and Krizhevsky’s company DNNresearch, they now owned the AlexNet IP. Hinton got the ball rolling by connecting us to the right team at Google. CHM worked with them for five years in a group effort to negotiate the release. This team also helped us identify which specific version of the AlexNet source code to release. In fact, there have been many versions of AlexNet over the years. There are also repositories of code named “AlexNet” on GitHub, though many of these are not the original code, but recreations based on the famous paper.

CHM is proud to present the source code to the 2012 version of Alex Krizhevsky, Ilya Sutskever, and Geoffery Hinton’s AlexNet, which transformed the field of artificial intelligence. Access the source code on CHM’s GitHub page.

Li, Fei-Fei. The Worlds I See: Curiosity, Exploration, and Discovery at the Dawn of AI. First edition. New York, NY: Moment of Lift Books; Flatiron Books, 2023.

Metz, Cade. Genius Makers: The Mavericks Who Brought AI to Google, Facebook, and the World. First edition. New York, NY: Dutton, an imprint of Penguin Random House LLC, 2022.

Special thanks to Geoffrey Hinton for providing his quotation and reviewing the text, to Cade Metz and Alex Krizhevsky for additional clarifications, and to David Bieber and the rest of the team at Google for their work in securing the release.

Chatbots Decoded: Exploring AI online exhibit

AI and Play, Part 1: How Games Have Driven Two Schools of AI Research

AI and Play, Part 2: Go and Deep Learning

How Do Neural Network Systems Work?

From Alto to AI (includes Ilya Sutskever)

2024 Fellow Awards (includes Jensen Huang)

CHM Live: Fei-Fei Li’s Journey

CHM Live: Ilya Sutskever and Eric Horvitz

2024 Fellow Awards Ceremony: Jensen Huang

Lecture by Geoffrey Hinton on neural networks, 1990

Patch panels from experimental 1960s Cornell neural network, likely the Mark I Perceptron

Analog memory plane used for the hidden layer of the Tobermory Perceptron, Cornell, 1960s

Special source code releases like these would not be possible without the generous support of people like you who care deeply about decoding technology for everyone. Please consider making a donation.