The machines took over most tasks long ago. We wake to an electrical alarm, sip machine-made coffee from a machine-washed cup and sit on a flush toilet whose hydraulic principles drove precision clocks in ancient Alexandria. Our breakfast cereal is harvested by semi-autonomous combines and processed in factories perfected over 300 years of industrial automation. At a word from us, the terminals in our pockets can show us almost anything we want to know. But we hardly notice any more. We wonder–and worry–about the ever-shrinking pool of tasks machines can't do well. "Does AI understand emotions?" "Can a car really drive itself?" "Will robots care for me when I'm old?"

Conservationists have a term for getting used to things over time: shifting baselines. The young fisherwoman doesn't realize how thin her catch is compared with the glistening bounty of times past. It's all she knows. Changes that happen slowly enough become the new normal.

That's why we don't see the absences. Only the oldest among us remember the legions of servants, service people, and specialists who used to run a merely semi-automated world by hand. Where are the laundresses, the meter readers, the fire stokers, the telephone operators, the punched card operators, the bus conductors, and the legions of factory welders? The secretarial pool?

Kalorik toaster, 1930. You had to switch many early models off yourself or the toast would burn! The first fully automatic toaster came in 1925, but manual models remained common for some years. While labor saving devices transformed homes in the early 20th century, automated controls were rarer than today. Central heating commonly required manual control, as did early washing machines. Courtesy Wikimedia Commons.

Of course, some of you may say most automation in the past was largely about force. That like the apocryphal John Henry's steam drill it replaced brawn, not brains. Weren't earlier innovations extensions of the strength in our hands, while only in this digital age have machines gotten smart enough to extend our minds?

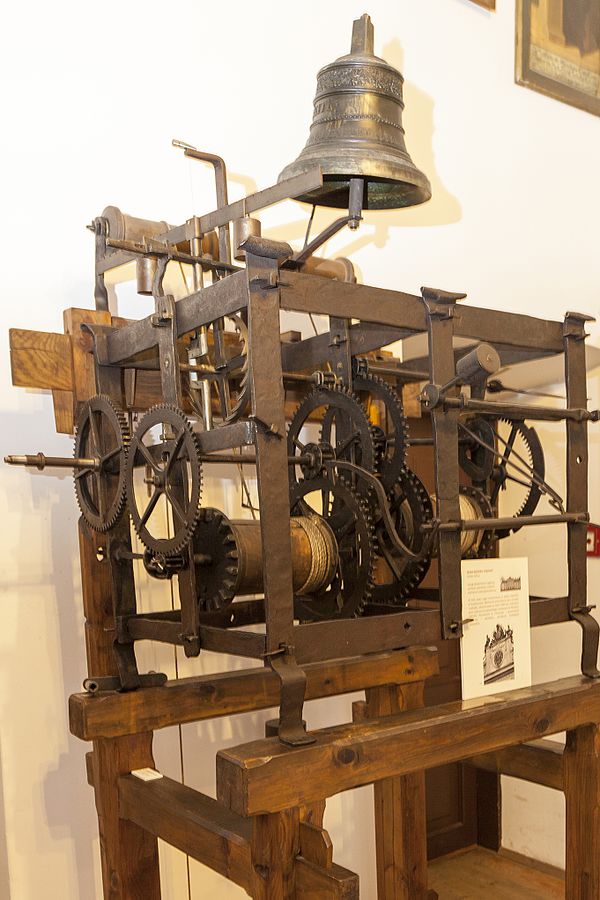

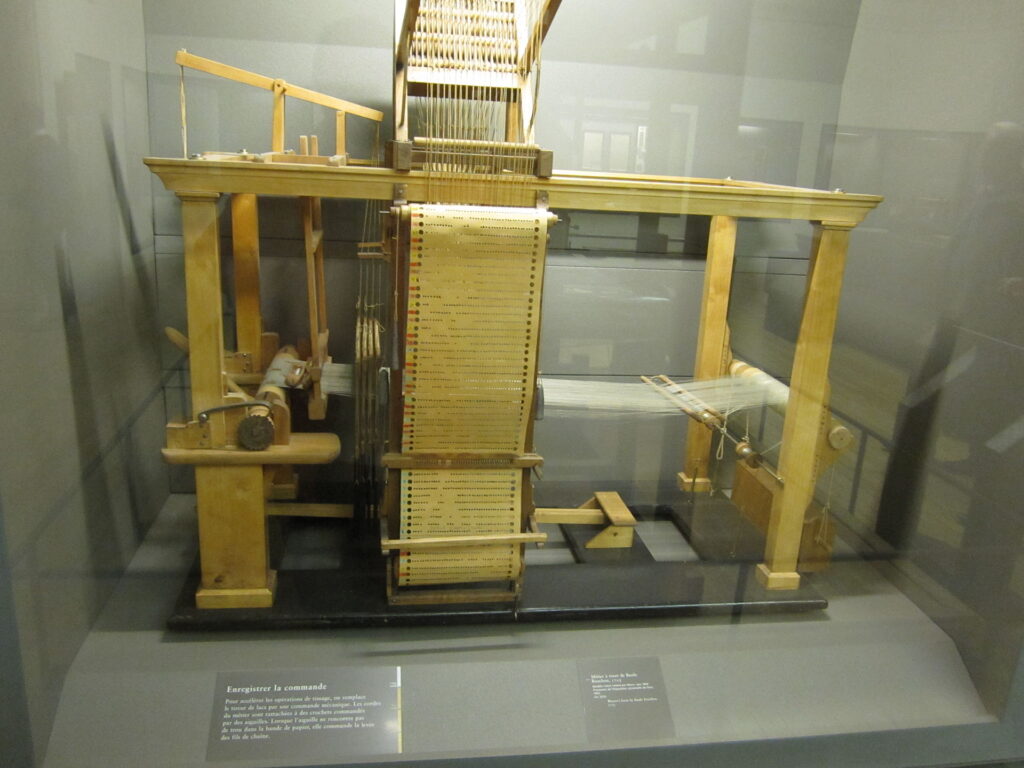

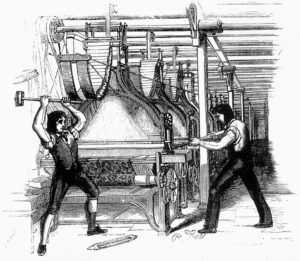

Not entirely. The first job lost to automation may have been the rather refined one of bell ringer, in the 11th century. That's when clockwork began to play melodies on church bells like a giant music box and spread the original machine-readable medium–pegs on a cylinder–along the way. The 18th century looms automated by Basile Bouchon and his successors Vaucanson and Jacquard replaced the intricate skills of master weavers, not the muscled legs of porters.

Clockwork with carrillon, Poland. Some of the earliest clockwork mechanisms powered carillons, or bell ringers for churches . The binary pegged cylinders that acted as "programs" had been used to control elaborate water clocks since at least 8th century Baghdad. They evolved into punched cards and tape, and eventually into modern hard disks and flash memory. Courtesy Wikimedia Commons.

In fact there's no bright line between machines designed to do physical work and ones whose only output is information. The same kinds of gears and ratchets that pulp paper in a watermill can operate a clock, an ancient south-pointing chariot, or a mechanical calculator. Transfer goes both ways. Electronics was developed to handle pure information, the telegraph. Only decades later was it co-opted to drive light bulbs and motors. The telegraph's switches went on to control giant dynamos, and later returned to the information side as the basis for the digital computer.

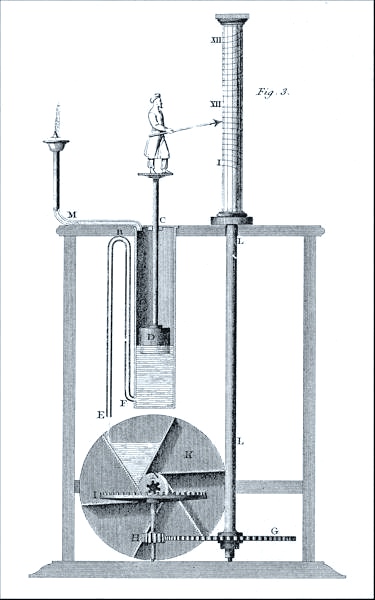

Early 19th-century illustration of the improved water clock (clepsydra) by Ctesibius in the 3rd century BCE. He was likely the first head of the Musaeum of Alexandria. His design incorporated a float valve similar to that in a modern toilet, often considered the first feedback mechanism. His clock could adjust time units to changing seasons. Water clocks were also used in ancient China and Mesopotamia, and some incorporated lifelike automatons. Sophisticated models were the atomic clocks of their day, used for timing scientific experiments.

Modern artificial intelligence (AI) suffers from an identity crisis. On the one hand, it is heir to a proud tradition dating back to the "automatos," or self-moving machines, of the ancient world. Those clever devices–water clocks, geared calendars, moving human and animal figures (automata), and Chinese mechanical "compasses"–kicked off one of the few new classes of technology since fire or textiles.1 For the first time artifacts could do lifelike things on their own, without a person guiding each step as with conventional tools. Automation eventually spread out to transform nearly every aspect of human life. In recent decades that transformation has been pushed into overdrive by the digital computer.

But to practitioners even modern AI's greatest successes can feel like abject failures–because the field started with such great expectations.

Grandiose dreams are an occupational hazard of imitating life with machines. From ancient hydraulics, to clockwork, to motors, to electricity, all the successive technologies used in automation made people wonder if they had stumbled upon analogs to how we ourselves function, a crude but working model of life itself. This inspired engineers and philosophers alike to grapple with fundamental questions: What is intelligence? Perception? Memory?

Unlike their predecessors, many AI pioneers took such wild, middle-of-the-night thoughts as an explicit goal: to make machines as smart as people. Today that goal remains science fiction. But as we'll see, it seemed far more reasonable at the field's start. That's because those pioneers had a triumphant example to follow, and to live up to: The electronic computer.

Basile Bouchon's semi-automated loom of 1725 used a wide punched paper tape to store machine-readable "programs" for different patterns. Vaucanson and Jacquard refined the design into a successful commercial product. Jacquard replaced the fragile tape with durable punched cards sewn together. Both cards and tape remained common for "smart" machines – industrial equipment, telegraph senders, punched card tabulators, and ultimately computers – into the 1970s. Charles Babbage specified Jacquard-style cards for his proposed Analytical Engine. Musée des Arts et Métiers, Paris.

Occasionally, people make simple technology do very smart things. For instance, Gutenberg's famous invention was a modified wine press, operated completely by hand.2 Yet the printing press replaced a team of highly trained scribes doing painstakingly slow, erudite work, and effectively ended that 4500 year old profession. It shows that machines don't always need to do life-like things in life-like ways to be effective. In the words of AI giant John McCarthy, "airplanes fly just fine without flapping their wings."

The story of computing itself is how certain "high" intellectual functions turned out to be far easier to simulate with simple machines than most had dared imagine. Claude Shannon had the epiphany that simple on/off switches, connected together, could implement Boolean algebra and thus serious chunks of math and logic. These had long been considered pinnacles of human intellect. Further, as Alan Turing showed, a machine like that could in theory emulate the function of nearly any other calculating machine.

These were the ingredients of the electronic computer, a kind of ultimate shortcut in the tradition of the printing press, or those airplanes with their rigid wings. Over just a decade, a huge swathe of human-only talents would fall to a device made of little more than dumb switches. It was stunning proof of the promise of machine "intelligence."

In the 1950s researchers began to plot the next leaps forward, toward "thinking automata " or—the name that stuck—"artificial intelligence." The electronic computer had already conquered the mathematical summits. AI pioneers hoped to soon master mere animal processes like vision, grasping, walking, and navigating around obstacles. And then on to an ultimate prize: Simulating the high-level functions that tie everything together into human-like intellect. Some optimists expected that to take as little as 20 years.

Sixty-five years after AI's start, we're awed when AI that runs on a net of zillion-MIP datacenters and whose training required the electricity consumption of Palm Springs can recognize a kitten. Or when a self-driving car manages an hour without human assistance, having gone through 10,000 simulated crashes to approach the level of proficiency a human beginner reaches in 15 crash-free hours.

Meanwhile, the original math and logic strengths of the digital computer pushed more pedestrian automation into overdrive. The machines took over data processing, scientific data crunching, simulations of all kinds, mapping, machining, and stock trading; revolutionized all human communication, and more–while radically reshaping billions of jobs.

Spot robot loading a dishwasher. Best known for its terrifying military robot prototypes, Boston Dynamics is also hoping to attack domestic chores. Boston Dynamics

For instance, computing helped quietly reshape manufacturing in the 1960s and 1970s. A whole class of factory jobs around control, like measuring the temperature of molten steel or the pH of chemical processes, had been going away since the 1950s. They were absorbed by a new generation of continuously monitoring sensors and process control systems. Initially these were connected to analog computers and electromechanical servo mechanisms. But by the 1970s most were mediated by modern digital electronic computers, greatly reducing the need for humans in the loop.

It was not yet the single-employee factory projected by French automation pioneer Vaucanson in a 1745 article.3 But such "lights-out" factories have now been built.

Conjectural model of a south-pointing chariot, London Science Museum. Clever gearing made sure that however you moved the chariot, the little man would point south. Such chariots were built in China by the 3rd century.

Nearly all computing meets older definitions of AI, like the McCarthy quote above or this one by Ray Kurzweil: “...machines that perform functions that require intelligence when performed by people." Exhibit A was the human "computers" made jobless by the arrival of the electronic version. A lot of automation also meets that definition, too, though for a far narrower range of functions.

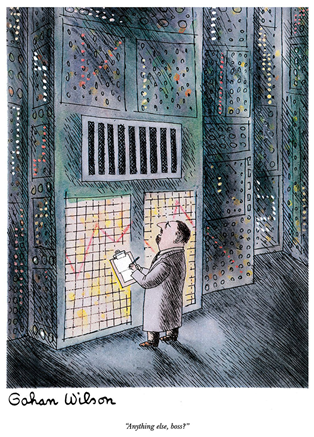

Yet neither automation or computing are accepted as family by some AI researchers. In fact, a lot of earlier AI work itself doesn't make the cut. That includes AI achievements that would have seemed utterly miraculous to 80 generations of automation pioneers, like mimicking and translating human language and learning along the way. As pioneer Rodney Brooks says about the field, "Every time we figure out a piece of it, it stops being magical; we say, 'Oh, that's just a computation.'"

For example, optical character recognition was a classic AI problem until it was reliably solved. Then it became a mere utility. Ditto for brute for chess programs, and of course the savagely effective algorithms that tune search results and our Facebook feeds. This rather extreme form of shifting baselines has its own name, the AI Effect, and a Wikipedia page.

The root of the problem was that early AI researchers were aiming for flexible intelligence like that of people and other animals. Intelligence that could play chess and also translate languages. Ending up with lots of brittle, single-purpose AIs like Google Translate and Deep Blue for chess feels more like a genie’s wish gone wrong. Or the legacy of the alchemists, who laid the groundwork for chemistry but never found their all-powerful Philosopher’s Stone.

The AI Effect isn't the only thing that obscures how big a role smart machines already play in our lives. Another is aliases. As a field AI has gone through several funding "winters" after results failed to live up to expectations. For long stretches that made "artificial intelligence" itself a term to avoid in grant proposals. So researchers got creative in rebranding their efforts: Optical character recognition, expert systems, pattern recognition, machine translation, information retrieval, and so on.

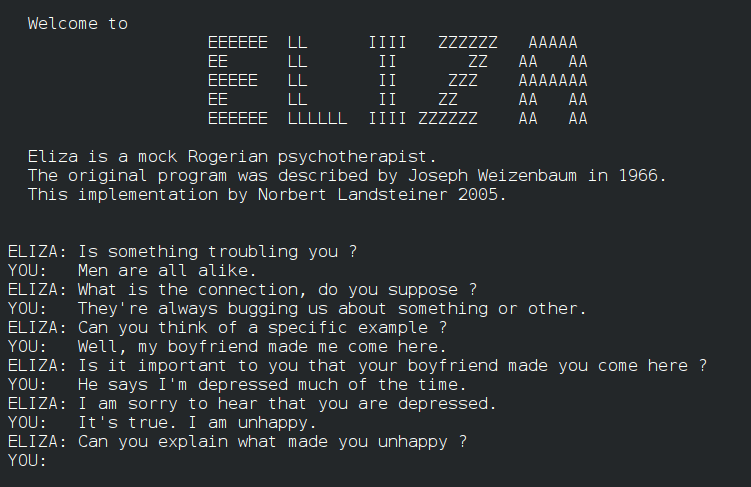

Faces and human or animal figures grab our attention. So does conversation, as here with the pioneering 1960s chatbot Eliza. Backend systems? Not so much. Creeping baby doll clockwork toy, patent model, National Museum of American History; screenshot from modern version of Eliza.

Then there's dullness. When kings made automation the centerpiece of their gardens they chose human and animal figures, not irrigation systems or faceless milling machines. Curious, chattering primates that we are, we want our smart machines to emulate the things that grab our attention. These include faces, communication, grasping, movement, and so on.

Unfortunately, digital computers and parts of AI itself more easily emulate the sorts of dull but useful intelligence we barely notice. Like correlating millions of payroll records, or optimizing the control system for a factory. Where most AI research tries to simulate conscious functions, these are closer to the hidden autonomic nerve functions that control our gut, or immune systems. Or even the slow "intelligence" of trees communicating through their roots. We're enthralled by even the simplest chatbot. But the far more powerful algorithms that trade stocks or balance our electricity grid stay invisible, apart from rare blackouts or crashes.

What counts as "real" AI is a worthy debate for practitioners and their funders. But taken together, the erasures above make the footprint of smart machines in our lives look far smaller than it really is. When it comes to the machines that can do our jobs or keep us alive, it may be more important to look at what human roles the technology replaces than the details of how it does so.

I'd wager that most people made redundant by gizmos over the last few centuries care little whether it was by mere automation, like an 18th century mechanized loom, or a digital computer, or the currently "genuine" AI of Deep Learning.

Future historians may look back and see three phases of smart machines overlapping in our time, and in our work lives: Traditional automation, the general-purpose digital computer, and the tip of that iceberg–modern AI. The distinctions that seem so fraught today may look distant and quaint, like 19th century engineers arguing over the importance of steam vs. pneumatic power.

But there's an immediate reason to see things whole: To better shape our own future. Back in the brass and gears of the first industrial revolution there were few precedents to learn from. Automation itself was ancient yet had only transformed a few niche fields. To apply it on a broad scale was a brave new world. Nobody knew how it might affect society; the workplace; education; the home. Today, we can try to learn from that 300-year history, including the vast acceleration since the electronic computer.

We edit our genes and fly to the moon. But the basis of our society is unchanged from the first cities over 5000 years ago: cereal crops grown with irrigation, or in fertile floodplains. We're actually more vulnerable to weather and pests than many of our ancient forebears–world food reserves range between 70 and 90 days . One bad year, whether natural or due to climate change, could starve billions.

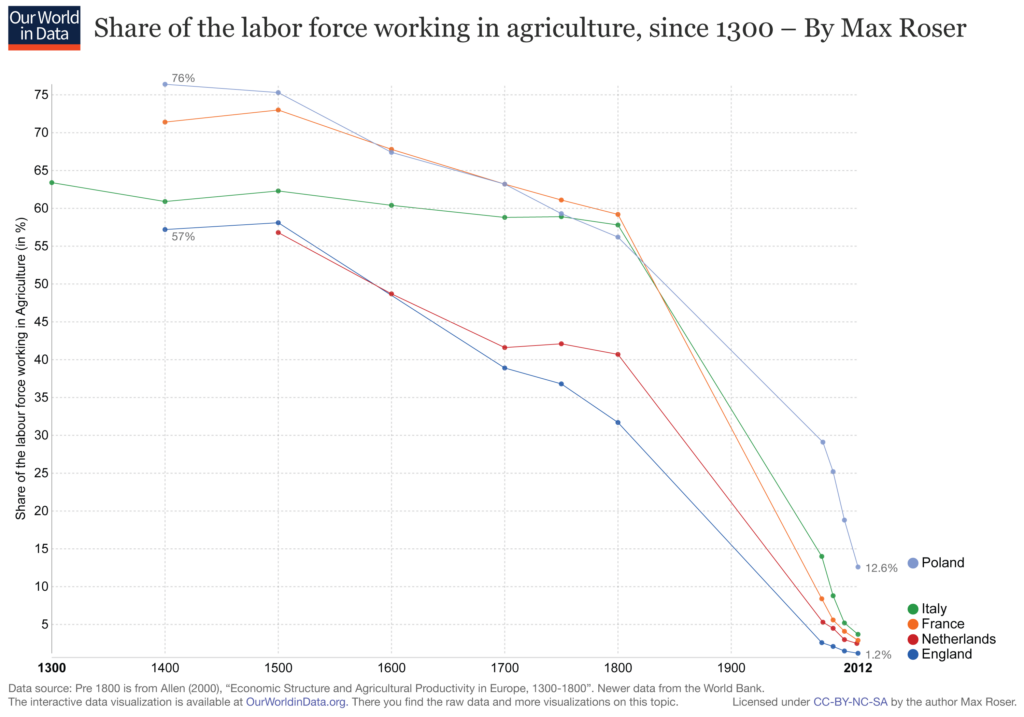

A big difference, of course, is that back in the days of ancient Ur nearly everybody worked the land. Or at least they guided the animals who worked the land for them. Long before automation, domesticated animals had already started to supplant human muscle. 4500 years of better farming techniques and new tools–the plow, the wheeled cart, the copper scythe, the horse collar–reduced farming to a mere 60% of the population in parts of Europe by the 1500s. Changes in social structure also helped, like the fading of feudalism. A third of the population could now take on other jobs, from merchant to craftsperson to entertainer.

The global colonial era partly reversed the trend, with vast plantations worked by enslaved or conquered peoples producing luxuries like cotton and sugar. But over time the industrial revolution offered a whole new set of aids for agriculture, from the traction engine to the steam pump, reducing the need for labor.

The cotton gin mechanized the painstaking process of separating the fiber from the seeds. In the short term, that actually increased demand for both cotton and the sale of enslaved people. But it was part of a wave of farm automation that would ultimately reduce the agricultural workforce by over three quarters. Courtesy Wikimedia Commons

In the industrialized world today, less than 10% of the workforce produces all of the food. We're just as dependent on those six inches of topsoil and the fact that it (usually) rains–and let's pray it continues to do so. But machines, more and more of them autonomous, do most of the work for us.

Employment in Agriculture, 1300-today. From ourworldindata.org.

Seeing the middle stages of this trend, 19th century economists predicted we'd be enjoying 15-hour work weeks by now, our days filled with poetry and fine, leisurely meals. It didn't work out that way. Instead, automation freed our great-grandparents to create lots of never-before-imagined jobs, truck driver and punched-card operator and support representative. From the point of view of, say, a 10th century farmer, over 90% of the jobs we do now are effectively made up–make-work stopgaps that simply prove, in the words of Mark Twain, that "civilization is a limitless multiplication of unnecessary necessaries."

Of course, whether you consider our modern jobs "unnecessary" or an unprecedented liberation of human potential is a matter of perspective. It's hard to argue we'd be better off if all the scientists and ballet dancers went back to the land or just put their feet up. It's also hard to argue that TaskRabbits and dog washers should be forced to work for their bare subsistence, as they are now, if our economy doesn't actually require them to do so.

Where's that 15-hour workweek for those who want it? The late David Graeber's darkly comic article "On the Phenomenon of Bullshit Jobs: A Work Rant" and followup book explore both sides of the topic.

But the irony we now face is that our current crop of "made-up" jobs are being threatened by a new and far more powerful kind of automation: modern AI. What will happen?

Two human traits AI researchers have struggled to replicate in silico are our flexibility and imagination. The percentage of us doing work necessary to actually sustain life may have dwindled to a rounding error. But thanks to our collective powers of imagination, our ever more abstract jobs still feel deeply real to us. That's because work has remained the very basis of our economic system. It's how we apportion wealth and other goodies, both luxuries and necessities. From the point of view of that hard-nosed 10th century farmer, we're over a hundred years into an era of largely "pretend" jobs. Yet it sure doesn't feel like pretending as we scramble to help the kids with homework and send a last work email before tumbling into bed. Those invented jobs let us buy very real food, shelter, and clothing.

A similar flexibility may carry us well into automation's next act. As AI eats up the previous set of made-up jobs we can doubtless create new ones. Some have already appeared: machine-learning system trainer, human post-editor for automated translations, transcriptions, and (soon) editors of original writing done by machine.

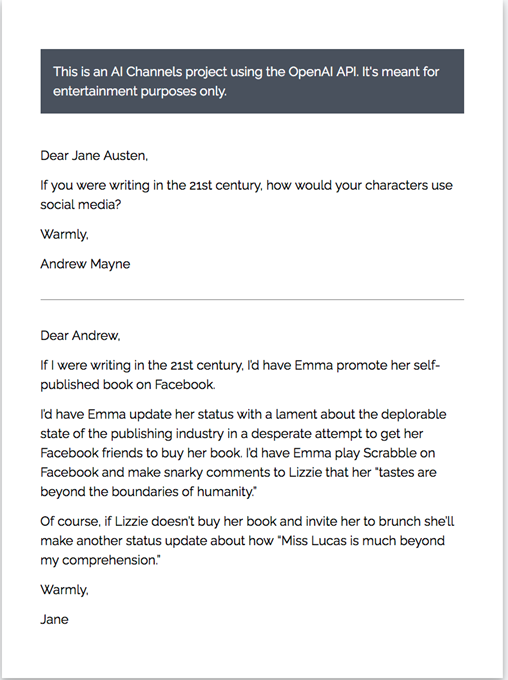

Here, the reply from "Jane Austen" is generated by AI Writer, which lets people exchange email with simulated historical figures. Created by Andrew Mayne, AI Writer is an application of OpenAI's GPT-3 language model. AI Writer

But there are caveats.

To keep smoothly replacing jobs with new ones, progress in AI needs to stay slow enough that young people can train for new roles. The slowly shifting baselines that leave us unconscious of the past depend on generational rates of change–the child sees as normal what the parent still finds odd and new. But problems come when change is fast enough to make masses of mid-career workers redundant. This has happened regionally over the last couple of centuries, such as the automation that produced the Luddite rebellion in parts of early 19th century England. On a global scale it could throw the entire economic system into chaos. And there are deep questions around a Universal Basic Income, the solution put forward by a number of AI pioneers as a kind of apology in advance for lost jobs. How can even a fat monthly check replace both the dignity and the real world status of work?

"Luddites" shown smashing machinery, early 19th century. Wikimedia Commons.

Another key question is about design. Do we try and build machines to augment human abilities... or replace them? It's less about technology than goals. Vaucanson's 1745 projection of an automated factory remains a powerful image of engineering humans clean out of the loop. (In this case, the well-paid guilds of French master weavers).

By contrast, Siri is an example of modern AI dedicated to augmenting human knowledge. It's no coincidence that its main creators, Adam Cheyer and Tom Gruber, consider themselves disciples of Douglas Engelbart, whose life's work was around amplifying, rather than replacing, human smarts.4 Engelbart's Augmentation Research Center is best remembered for pioneering a good chunk of modern computing, from windows, online collaboration, videoconferencing, and clickable links, to word processing and the mouse.

But his two fellow travelers and main funders, J.C.R. Licklider and Bob Taylor, wrote of a Siri-like assistant named Oliver (Online Interactive Expediter and Responder)5 in their landmark 1968 paper "The Computer as a Communications Device."

Licklider, who also helped launch AI as a field, felt that human-level artificial intelligence was inevitable, though possibly hundreds of years away. But his heart belonged to augmentation. He thought the most interesting period in human history would be before full AI's arrival, when we work, play, and learn together with a growing palette of smart assistance.

Another factor is the progress of AI itself. As I hope I've shown, machine abilities so far have been wildly uneven and "spiky." From clockwork to neural networks, smart machines can do certain tasks thousands of times better than humans, and others pitifully or not at all. It's like Savant Syndrome taken to a brutal extreme.

We can't always predict the next spike in machine abilities, but that may not matter. Their very unevenness is what has left room for humans, to fill in the gaps between spikes. The smart factory makes the toaster but can't load it on the truck.

But while the advance of machine smarts has been hugely uneven, it has been in only one direction: up. Meanwhile, our own inherent abilities haven't changed in about 200,000 years. What happens when those spikes of machine ability begin to converge, leaving fewer gaps? When an increasing profusion of specialized AI lets machines do not only, say, 60% of tasks better than humans, but 98%? Can we still maintain the suspension of disbelief underpinning our economic system, the one that says our work matters?

Cartoon, Gahan Wilson.

The ultimate caveat, of course, would come if AI ever reached its El Dorado of artificial general intelligence (AGI), like HAL in 2001 or the super-Siri in Her. Experts disagree on how quickly that might happen, with estimates ranging from 20 years, to 500, to never. But if it did it would mean machines as smart and flexible as humans and presumably getting more so, aided by hobbies like reading all of human literature in an afternoon. And with little need for weekends or coffee breaks.

It's pure science fiction to imagine what useful roles we might play after that. A key factor could be whether we'd also begun enhancing our own abilities with genetic engineering–it might prove faster to turbocharge proven intelligence than reverse engineer it from scratch with AI. Or if some people had actually achieved spooky Transhumanist-style connections with machines.

The essential question, of course, is whether we would still be in charge. Otherwise, our job options could dwindle to those in the quip about general AI attributed to pioneer Marvin Minsky: "If we’re lucky, they might decide to keep us as pets.

1. Previously, all tools were static, they didn't "do" anything without a human actively involved. Now, artifacts began to take on characteristics previously attributed only to life itself.

2. It built on the fact that a Chinese import, paper, had recently gone from a hand-made rarity to a product churned out by Europe's first major technology for mass production: water mills.

3. November 1745 edition of Mercure de France 49, p.116–120. https://books.google.fr/books?id=--h6GLQ-QtgC&pg=RA2-PA105&hl=fr&source=gbs_toc_r&cad=3 - v=onepage&q&f=false, retrieved February 2021.

4. As the great tech journalist and historian John Markoff has pointed out, in the 1960s the Stanford Artificial Intelligence Lab (SAIL) was across campus from Doug Engelbart's Augmentation Research Center (ARC), which focused on "IA," or intelligence amplification. SAIL's budget was many times bigger than ARC's.

5. After Oliver Selfridge, an AI pioneer and grandson of the founder of the English department store chain Selfridges.

![]()

Check out more CHM resources and learn about upcoming decoding trust and tech events and online materials. Next up on February 18, 2021: Should We Fear AI?

Want to share your experiences and thoughts about dating apps? Keep an eye out for our upcoming polls on Facebook and Twitter.