Is there more to fear or to hope from artificial intelligence (AI) today and into the future? On February 18, 2021, CHM gathered together a panel of leading experts with a variety of perspectives to answer questions and address concerns about AI technologies and robotics. A first-ever virtual town hall provided information and context to help us all become better informed digital citizens as AI innovations become ever more intertwined with daily life. If you attended the event, or think you're up to speed on these issues, try our KAHOOT! Challenge and join the running to win a prize (quiz must be completed by March 11, 2021).

The conversation was shaped by real-time polls and questions submitted by the audience before and during the event. Moderated by Tim O’Reilly, founder, CEO and chairman of O’Reilly Media, the panel included: Cindy Cohn, executive director of the Electronic Frontier Foundation, who focused on AI and civil liberties; Kate Darling, research specialist, MIT Media Lab, who looked at AI in the home and personal spheres; James Manyika, chairman and director, McKinsey Global Institute, who focused on AI and work; and, Sridhar Ramaswamy, former SVP of Ads at Google and cofounder of Neeva, who explored issues around AI and advertising and other business models.

Tim O'Reilly opened the event by inviting Kate Darling to talk about her provocative idea that most conversations about AI focus on comparing robots to humans when a more helpful analogy would be our relationship with animals throughout history. She shared insights from her upcoming book, The New Breed: What Our History with Animals Reveals about Our Future with Robots (2021).

Kate Darling discusses how our past relationships with animals can inform our future with robots.

Cindy Cohn noted that AI can learn a lot more than animals and so it’s necessary to build in limits and transparency to make the technology serve us rather than the other way around. As we’ve already discovered, machine learning in a racist society can be racist. “AI will take the best and worst of us and amplify it,” said Sridhar Ramaswamy. Tech companies that want to make use of AI efficiencies do not think about societal consequences. If AI destroys jobs, we as a society must decide what to invest in to shape the future.

James Manyika provided historical examples to show that these kinds of concerns and issues around technology, society, and jobs are not new.

James Manyika explains how losing jobs to new technologies is an age-old issue.

When Google perfected algorithms that used people’s data to deliver targeted ads it became the business model for much of the content on the internet, creating many new jobs. Tech companies are good at adapting to take advantage of the latest innovations, said Sridhar Ramaswamy, who worked in one of those new jobs. At Google in 2005, his team developed the largest machine learning system ever created in order to optimize revenue from targeted advertising. That advertising model creates incentives for behavior that allows companies to monopolize entire sectors. His new company, Neeva, aims to offer an alternative: an ad-free search platform for subscribers.

How can we prevent organizations, governments, corporations or even individuals who can afford AI technologies from pulling even further away from those who can’t, worsening global inequality and instability? Sridhar Ramaswamy and Cindy Cohn agree that this is an area where policy can make a difference.

Sridhar Ramaswamy and Cindy Cohn discuss remedies for tech monopolies.

James Manyika noted that at a fundamental level, AI and machine learning are scale-based, which leads to breakthroughs. However, computing power and data can also easily be concentrated in the hands of a few entities, and the most successful companies now capture 80% of available profit pools. Monopoly models of 80 years ago were different, he said, so we have to be careful in how we think about market power for this age. Cohn responded that this has been done in other areas of the law. Patents can be solely exploited for a period of time. Copyright provides a certain set of rights but not others. We need to think about protecting the incentive to create giant datasets that can benefit humanity without paving the way for monopoly power.

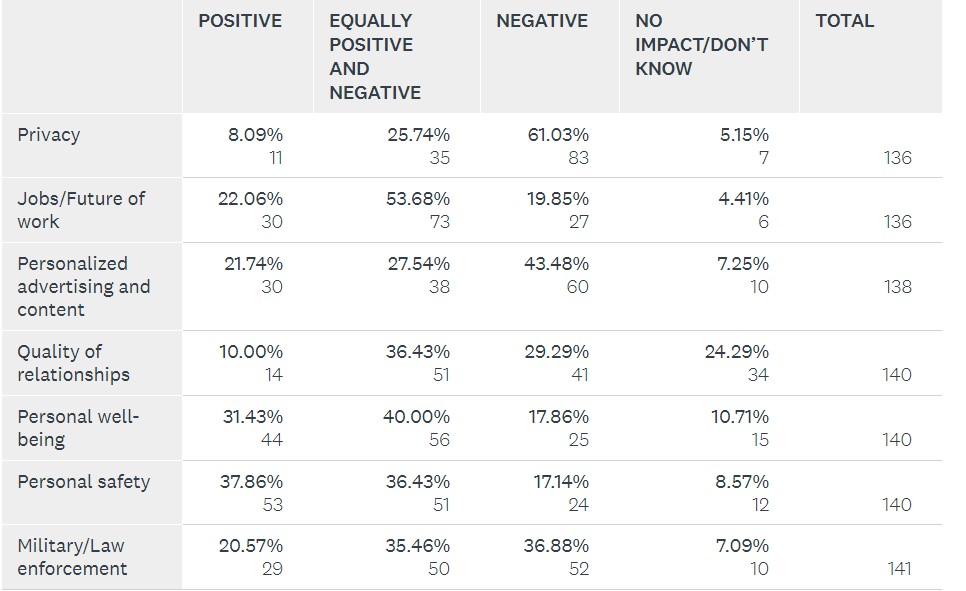

A poll of the audience revealed that people feel AI will probably have a more negative than positive impact on privacy, personalized advertising and content, quality of relationships, and in the military and law enforcement. They felt it would have a roughly equal impact for jobs, and a more positive than negative impact for personal safety.

Kate Darling questioned if people are even afraid or hopeful about the right things. She feels that people often worry about AI capabilities that are still in the realm of science fiction while they don’t worry about real problems like monopoly power. James Manyika noted that while AI can complement people’s work skills, it can also have the effect of deskilling existing jobs.

People’s concerns about privacy are valid, Cindy Cohn said, and addressing fears about lack of control over data and privacy could go a long way toward solving many other problems.

Cindy Cohn talks about how privacy is not valued in our society.

Is there a way that AI can actually help us achieve more power as individuals, perhaps through our own personal AI that helps us navigate privacy issues? asked moderator Tim O'Reilly. This question generated plenty of audience questions and an energetic exchange. Sridhar Ramaswamy said, “You get what you pay for” and argued that if you use a free service it’s implicit that your data will not be private. Cindy Cohn remarked that companies don’t hesitate to change terms and conditions if it benefits them and that even some paid services still don’t treat data privacy as a right. Tim O’Reilly wondered whether personal AI agents could look out for our interests by summarizing lengthy end-user license agreements and pointing out vulnerabilities.

The sustainability of life on earth is a big question and AI can do a lot, when, as Cindy Cohn noted, it is coupled with political will. Tim O’Reilly reported that the Institute for Computational Sustainability believes that AI is perfectly designed to help humans model complex interactive systems, which is the essence of sustainability. Sridhar Ramaswamy pointed out that AI is not inherently for or against sustainability, but private companies probably won’t pursue it on their own without government incentives.

Much of public investment in research today, said Cindy Cohn, goes to private companies that then reap the profits because governments have not required them to provide open access to their breakthroughs. James Manyika and Sridhar Ramaswamy believe that government funded AI research will be extremely important moving forward.

James Manyika and Sridhar Ramaswamy explain why we need more government funding of AI.

Publicly available AI technologies and datasets are necessary. One initiative that’s gaining traction, according to James Manyika, is similar to the National Institutes of Health and would be a consortium model to build compute capacity for a public infrastructure to support research. Cindy Cohn believes that making the results of research work available to the public should be a requirement for getting a government contract, as it is in many areas already.

Kate Darling spoke about her research with robots and human history with animals to explain how perhaps relationships are not really an area people should be concerned about with AI.

Kate Darling explains why robots won’t destroy our relationships.

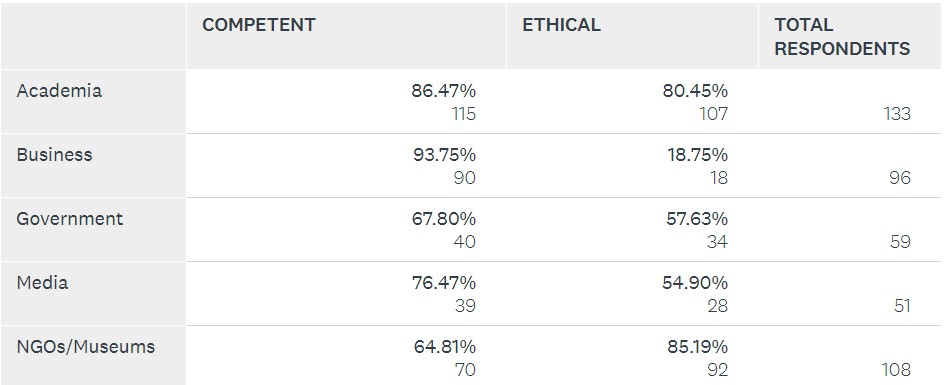

A second poll of the audience showed that they trusted NGO’s and museums, as well as academia, more than business, government, or the media to inform them about the potential impacts of AI. Clearly, there’s a trust issue with many institutions, and the panel explored what that means for the future of AI.

Sridhar Ramaswamy, James Manyika and Cindy Cohn discuss who people trust to tell them about AI.

Cindy Cohn noted that, with good reason, the business model companies use that provides a “free service” in order to gather people’s data has eroded people’s trust over time.

Can there be a shift so people will pay for a service? Sridhar Ramaswamy believes that for important functions like search, an ad-free subscription service like that offered by his company, Neeva, can succeed. He thinks that there will be a shift in consumption patterns away from multiple free sources and back to a few trustworthy ones. Cindy Cohn hopes that more options between free and paid services can be explored, particularly so that privacy doesn’t remain something that you can sell, ensuring that rich people will be able to afford privacy and the poor will not.

Productivity used to be linked to wages and was well-distributed, said James Manyika. But these days there’s productivity growth without wage gains. The way our economy works creates surpluses, but they don’t go to those who create them through their work. As a society, what can we do to correct that? Ideas to redistribute wealth like universal basic income (UBI), subsidies to the poor, and more taxes on the rich are being explored. Manyika thinks we need radical solutions to keep our economy healthy.

The panel agreed that AI must not perpetuate disparities and that capitalism needs to change to ensure it does not create greater inequality. Kate Darling joked that we should “tax the rich and smash capitalism.” Indeed, the current system is designed to share the wealth with a “remarkably small set of people,” said Sridhar Ramaswamy. James Manyika believes that capitalism must continue to evolve and adapt but under new rules. In the meantime, Cindy Cohn offers ways people can take action: talk to each other and pull together, make their voices heard by joining organizations dedicated to change, vote, and build ethical AI products.

As the results of the third audience poll showed, people’s trust in AI will increase if there is more transparency about communicating the downsides, more education and training, a code of ethics and regulation. Those seem to be eminently practical solutions that could help AI technologies create a positive future for us all.

![]()

Watch the full event video.

Read the related blog, Relax. Machines Already Took Our Jobs. Check out more CHM resources and learn about upcoming decoding trust and tech events and online materials.

Want to test your knowledge about what you just read? Try our KAHOOT! Challenge and join the running to win a prize (quiz must be completed by March 11, 2021).