AI powered by deep learning and neural networks surrounds us today. From online shopping recommendations to self-driving cars, Alexa to facial recognition, to medical diagnosis and drug discovery (particularly salient to the COVID-19 pandemic), we are interacting with neural network-based systems every day without realizing it. And just as the symbolic AI of yesterday advanced through applying AI to game playing, today’s deep learning systems have also learned from playing games. In fact, one of the most influential demonstrations of the superiority of deep learning over old-fashioned symbolic AI arrived when Google DeepMind’s AlphaGo beat the world champion in the ancient Chinese game, Go.

As Part 1 described, while chess AI had beaten humans back in 1997, Go was so much more complex that it seemed to be the last bastion of human creativity and strategic thinking . . . until 2016. AlphaGo’s defeat of grandmasters Lee Sedol in 2016 and Ke Jie in 2017 shocked the East Asian nations of China, Japan, and South Korea where Go is widely played, serving as their “Sputnik moment” according to AI researcher Kai-Fu Lee.1 This event has spurred China into investing billions into deep learning research and deployment, which could have massive economic and geopolitical ramifications if China leapfrogs the US in AI. More than China beating the US in an AI race, however, Lee is concerned with the potential job losses and socio-economic inequality that will result from the deep learning revolution. If AI can beat the best humans at that most challenging, ancient, and noble game of Go, what is there left for humanity?

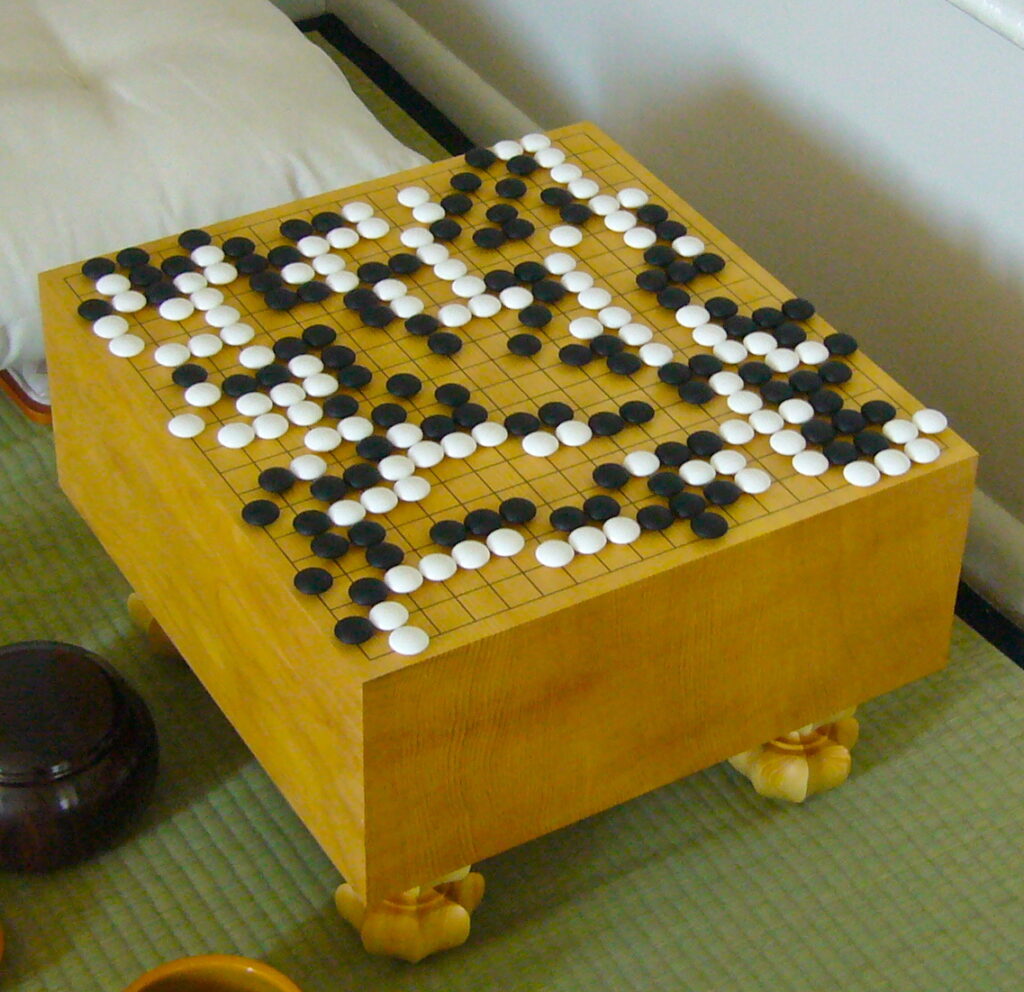

A Go board. Players take turns placing pieces (stones) on the intersections of grid lines. Stones are captured by being surrounded by the opposite color. Image: Wikipedia/Public Domain.

As we saw in Part 1, traditional symbolic AI, based primarily on heuristic search, was running out of steam by the 1990s. And despite Deep Blue’s success at beating the world champion in chess, its use of brute force search techniques actually showed that little intelligence was needed to play grandmaster-level chess if one had enough raw computing power to throw at the it. Chess may have been a complex enough problem in the 1950s to drive AI research, but by the 1990s, it was too simple. Much more complex was the Chinese game of Go, a game dating back to before the 4th century BCE.

Go is also known as weiqi in Chinese and baduk in Korean, go or igo being the Japanese name for the game. In Go, players place alternating black and white stones at intersections of a 19 by 19 grid, and surrounding your opponent’s stones with your own of the opposite color would convert all stones in between to your own color. Go is very easy to learn, yet very difficult to master as very complex relationships and patterns can emerge. Similar to chess’s status in the West, Go had for centuries in East Asia been associated with education, scholarship, and creativity, virtues aspired to by the elites of Confucian societies. Kai-Fu Lee writes that “the game was believed to imbue its players with a Zen-like intellectual refinement and wisdom.”2 Technically, Go was impervious to brute force search: a player could place a stone in 19 times 19 or 361 positions, a branching factor of 361 (compared to 38 for chess),3 resulting in 10170 board configurations, greater than the number of atoms in the universe!4 Obviously, human players do not use brute force search when they are playing Go, otherwise the solar system would be dead long before a game ended!

South Korean grandmaster Lee Sedol, right, playing against AlphaGo. Courtesy Google DeepMind.

Given this problem, how was DeepMind able to make AlphaGo beat the world champion at Go? In between 1997 and 2016, a revolution had occurred in AI. All of the old symbolic approaches to AI, including expert systems, involved preprogramming knowledge and rules into programs. Today such systems are sometimes called “Good Old Fashioned AI,” or “GOFAI.” In the 2000s, a different approach, which had begun as early as the 1960s but had never caught on with the mainstream of AI, began to gain steam, known as “machine learning.” In machine learning systems, instead of a human programming in the rules, the program learns the rules by itself from data. The Internet boom of the 1990s provided this data.

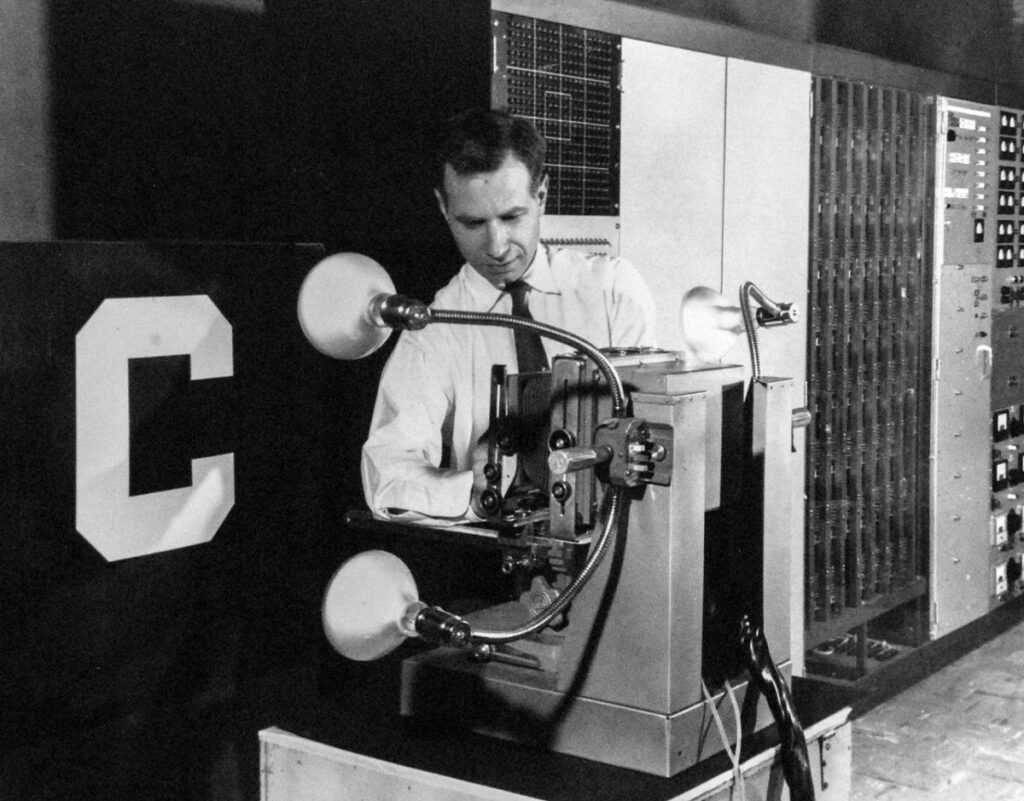

Arthur Samuel coined the term “machine learning” and was the first to use the technique for his checkers program in 1959, which ran on the IBM 700 series of mainframes. Here Samuel demonstrates his program on the IBM 7090 in 1959. Courtesy IBM Corporate Archives.

Frank Rosenblatt with the Mark I Perceptron, Cornell University, ca 1960s. A piece of a second perceptron, the Tobermory, is in the CHM collection. Image: Wikimedia Commons.

In 2012, a particular form of machine learning, called “deep learning,” became the dominant form of machine learning. Deep learning is based on artificial neural networks, which had been a topic of research going back to the very earliest days of computers. In the 1960s, Frank Rosenblatt at the Cornell Aeronautical Laboratory created the Perceptron, arguably the first artificial neural network which became a model for many of the neural networks of today. His original network had certain limitations, however, and after symbolic AI pioneers Marvin Minsky and Seymour Papert wrote a book criticizing perceptrons in 1969, most neural network research stopped.

Neural network research revived in the 1980s around a group of cognitive scientists at the University of California, San Diego, among them 2018 Turing Award winner Geoffrey Hinton, who began to apply a new algorithm called “backpropagation” to the problem of training deep neural networks, solving the limitations of Rosenblatt’s perceptrons. This approach to AI using neural networks came to be known as the “connectionist” or “Parallel Distributed Processing” school. They believed that, unlike the symbolicists, who modeled the human mind as if it were a symbol-processing computer, we should try to base our models on the brain itself, which is made up of biological neural networks. Such networks would prove to be much better at pattern recognition tasks such as vision and speech that even small children could do better than the best symbolic AI programs.

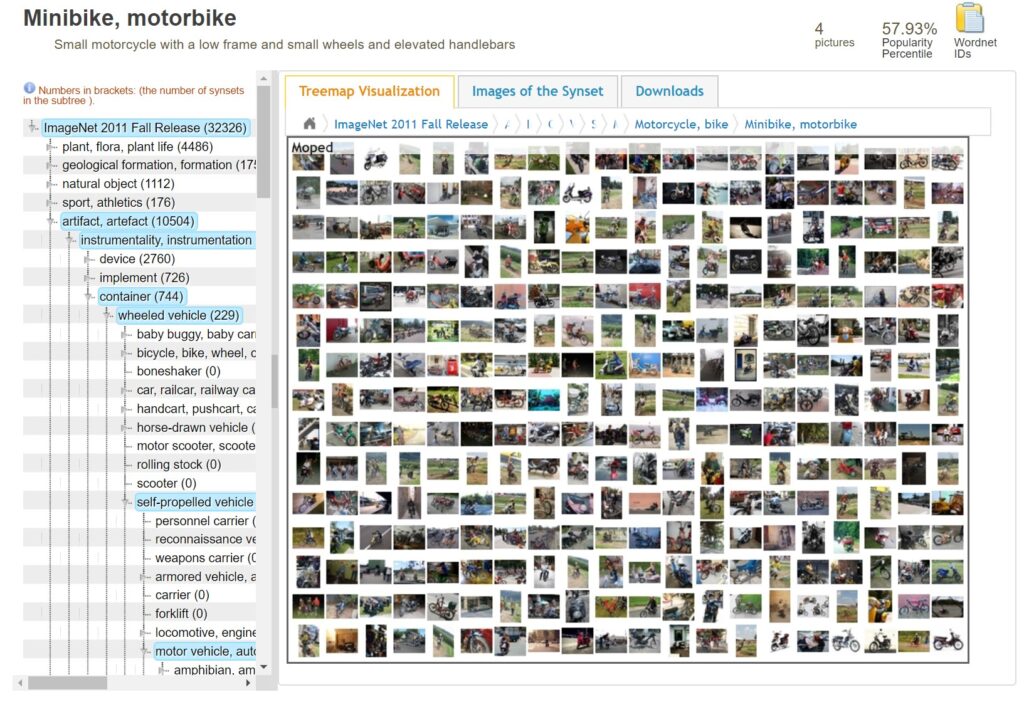

By 2012, two things would make possible the deep learning revolution. First neural networks work much better than simpler machine learning methods when a lot of data is available. The availability of very big datasets that could be found via the Internet and labeled via crowdsourcing made neural networks much more viable. One crucial dataset was ImageNet, a giant database of images organized by Stanford professor Fei-Fei Li. There was a yearly contest to see which machine learning system could do the best at recognizing these images.

Screenshot of the ImageNet database.

Li speaking at AI for Good Global Summit 2017. Fei-Fei Li, Sequoia Professor of Computer Science, Co-Director of the Human-Centered AI Institute, and former Director of the AI Lab, Stanford University. She also served as Vice President and Chief Scientist of AI/ML at Google Cloud from 2017-2018. The ImageNet database was her signature contribution to AI. Image: Wikipedia, Creative Commons License 2.0.

Second was the availability of a large amount of computational power in the form of graphics processing units, or GPUs. GPUs are used in videogames to draw 3D graphics. They accelerate a form of math known as matrix math, which is used by 3D. It turns out that matrix math is also used in neural networks, making GPUs a good match for accelerating the training of neural networks. Alex Krizehvsky, a student of Geoffrey Hinton, used GPUs in his “AlexNet” neural network which won the prize for the ImageNet competition in 2012, showing greater than human reliability in this task. This milestone was the turning point after which most of the activity in AI, both in research and in commercial applications, would be focused on deep learning.

Alex Krizehvsky, a student of Geoffrey Hinton, developed a convolutional neural network trained using GPU hardware, which won the ImageNet competition in 2012. “AlexNet” as it is now known, sparked the current deep learning revolution. Courtesy of Alex Krizehvsky.

Being a system that plays a board game, AlphaGo still uses a form of tree search, albeit an updated version of search that made use of randomness, called “Monte Carlo tree search.” Beyond this, however, AlphaGo also uses deep learning to help it decide what moves to make, unlike symbolic chess programs which used pre-programmed evaluation functions that wouldn’t change. AlphaGo uses one neural network, called the “policy” network, to make these choices. A second network, the “value” network, predicts which player will win the game.5 These networks are fed the records of actual human-played games of Go in the supervised learning stage. Then AlphaGo plays itself thousands of times in the reinforcement learning stage. Many of these reinforcement learning techniques were first pioneered by Gerald Tesauro with his TD Gammon backgammon program in 1992 at IBM. The system’s “experience” from playing itself, in addition to the data from existing human games, stands in for the kinds of expert knowledge and hand-crafted heuristic rules that symbolic AI researchers used to use to help prune the search tree. The difference is that machine learning does it much better, and in a more adaptive way, and is not constrained by the limits of human knowledge of the game.

South Korean Go master Lee Sedol, born 1983, was the second ranked international player in 2016, when he was defeated by AlphaGo 1-4. Image: Wikipedia.

All its training allowed AlphaGo to beat Lee Sedol, considered the best Go player of the last decade, as well as Ke Jie, the world’s number one player in 2017. AlphaGo even managed to discover moves no human player had ever considered before, especially in move 37 of game 2 of the match against Lee Sedol. This move, which commentators originally thought to be a mistake by AlphaGo, not only led to AlphaGo winning the game, but has since transformed the way human players see the game.

Since then, DeepMind has found that training an AI system with prior human knowledge is not only unnecessary, but actually less effective. AlphaGo’s successor AlphaGo Zero was trained only through reinforcement learning, playing games against first AlphaGo and then itself. Its successor AlphaZero can play Go, chess, and Japanese chess or Shogi all from reinforcement learning only. AlphaZero beat the best chess program using traditional search techniques, Stockfish, while being way more efficient, searching only 60 thousand positions a second compared to 60 million for Stockfish.6 It is the most general two player game playing AI system so far.

Using deep reinforcement learning, DeepMind has also created AI systems that can play real-time games. Even before AlphaGo, DeepMind had systems that could play Atari video games better than human players.7 Since then, DeepMind has created AlphaStar, which can beat the top players of the real-time strategy game Starcraft, which combines real-time response and imperfect information with short-term tactical maneuvering as well as long-term planning, economic management and strategic map control. Because of these features, after Go, Starcraft was seen as the AI’s next grand challenge. DeepMind showed that AlphaStar could beat top human players even without the superhuman ability to see the entire map or perform more actions per minute than a human.8 These systems can become so good because with reinforcement learning, a computer can play games much faster than humans can, and can accumulate the equivalent of thousands of years of human experience in just a few days. It seems that with games, the sky is the limit.

Nevertheless, does expertise in game playing transfer to the real world, and can it lead to true general intelligence? As we saw in Part 1, research on games and chess in particular helped advance the development of search algorithms that continue to see use today. In addition, Arthur Samuel’s checkers playing program (1959) was an early milestone in machine learning (Samuel coined the term), though it did not use neural networks. AI research on games has remained steady as a percentage of all AI research, according to IBM Deep Blue leader Murray Campbell, and the actual number of publications has increased significantly.9

But although the earliest AI pioneers thought getting AI to play games would naturally lead to human-level intelligence, since then there has been some debate. There has been a shift in AI away from the “toy” puzzles that dominated early AI toward real-world problems. Roboticist Rodney Brooks argued in 1990 that true general AI had to come from grounding in the physical world, unlike a game which is necessarily a simplified simulation of it.10 But now that deep learning has succeeded in many real-world tasks where symbolic AI failed, some have renewed confidence that game-playing AI has relevance elsewhere.

Games, like scientific experiments, still provide a constrained space so that researchers do not have to tackle the full complexity of the real world, but can bite off a portion of it at a time. Different games highlight different aspects of that world, just as experiments do. Google’s DeepMind team, for example, is confident that the techniques used in AlphaGo, AlphaZero, and AlphaStar are leading to more general purpose AI, and have applications to science. They pointed to AlphaGo’s innovative move 37 in game two against Lee Sedol that AI can be creative in a way humans can be. AlphaGo’s successors are increasingly general, playing many different games, and have even been repurposed for such tasks as quantum computing.11 DeepMind’s AlphaFold AI uses deep learning to predict how protein molecules fold in 3 dimensions, which could help scientists develop new drugs.12 Researchers working on the RoboCup Challenge, which aims to build robots capable of beating the World Cup championship team at soccer, argued that this work could lead to robots for elderly care or disaster rescue. Indeed, this research has led to robots that participated in rescue operations after 9/11 and the Fukushima nuclear disaster.13 One robotics startup that came from RoboCup research led to Kiva, the Amazon subsidiary that makes its warehouse robots.

Others are skeptical, pointing to the ways that games are different from the real world. Geoffrey Hinton has argued that reinforcement learning is too inefficient for achieving human-level intelligence because humans don’t have the luxury of speeding up time the way computers do.14 When IBM’s Watson computer beat human Jeopardy contestants in 2011 using both search and machine learning, it represented a new milestone because Watson could seemingly understand ambiguous human language. IBM hoped to repurpose Watson for medical diagnosis, but found there wasn’t a viable market there. Watson has not been successful in real-world medical applications. Watson had trouble extracting insights from new medical literature, had difficulty dealing with inconsistently written health records, and couldn’t compare new patients to previous ones to find patterns, all things a human doctor does easily.15

In addition, most deep learning systems today are fragile, and narrowly special-purpose, because they have to be trained with a lot of data. This leads many to believe that deep learning is ill-suited to be the path towards artificial general intelligence. Indeed, if generality is the key towards true human-level intelligence, game playing AIs, which traditionally have been single-purpose, may not ultimately be the answer either, despite AlphaZero’s success in that direction. Real life is not always a zero-sum game in which there are only winners and losers. Sometimes humans can “win” by not winning, by optimizing for human values other than money or power.

Besides its usefulness to computer science, however, building AI to play games may simply be fun, another way for humans to engage in play. At a conference talk on the history of AI and Games, Michael Bowling of the University of Alberta speculated that play evolved in humans, like in many predatory animals, as a way to practice hunting behaviors that might otherwise be dangerous to do. Games provided structure and motivation to engage and improve such skills.16 If that is true, playing games are part of what it means to be human. So it makes sense that as we seek to make artificial humans in the form of AI, we would like to endow them with the ability to play games. Yet as we’ve seen, the success of Deep Blue and AlphaGo at beating humans exhibits a pattern in AI: that once an AI system can beat humans at a task, we humans no longer think of that system as “intelligent” any longer. At the end of the day, the measuring stick for intelligence is still ourselves. Playing games alone, it seems, isn’t what makes us smart, especially if our own creations can beat us at our own game. But it is part of what makes us human.

1. Kai-Fu Lee, AI Superpowers: China, Silicon Valley, and the New World Order. (Boston; New York: Houghton Mifflin Harcourt, 2019), 1–5.

2. Lee, 1.

3. Nils J. Nilsson, The Quest for Artificial Intelligence, 1st edition (Cambridge ; New York: Cambridge University Press, 2009), 325.

4. “AlphaGo: The story so far,” DeepMind, accessed May 28, 2020, https://deepmind.com/research/case-studies/alphago-the-story-so-far.

5. “AlphaGo.”

6. David Silver et al., “AlphaZero: Shedding new light on the grand games of chess, shogi and Go,” DeepMind, December 6, 2018, https://deepmind.com/blog/article/alphazero-shedding-new-light-grand-games-chess-shogi-and-go.

7. Volodymyr Mnih et al., “Playing Atari with Deep Reinforcement Learning,” ArXiv:1312.5602 [Cs], December 19, 2013, http://arxiv.org/abs/1312.5602.

8. AlphaStar team, “AlphaStar: Mastering the Real-Time Strategy Game StarCraft II,” DeepMind, January 24, 2019, https://deepmind.com/blog/article/alphastar-mastering-real-time-strategy-game-starcraft-ii.

9. AI History Panel: Advancing AI by Playing Games (AAAI, Tuesday, February 11, 4:45–6:15 PM), https://vimeo.com/389556398.

10. Rodney A. Brooks, “Elephants Don’t Play Chess,” Robotics and Autonomous Systems, Designing Autonomous Agents, 6, no. 1 (June 1, 1990): 3–15, https://doi.org/10.1016/S0921-8890(05)80025-9.

11. AI History Panel.

12. Andrew Senior et al., “AlphaFold: Using AI for scientific discovery,” DeepMind, January 15, 2020, https://deepmind.com/blog/article/AlphaFold-Using-AI-for-scientific-discovery.

13. AI History Panel.

14. Geoffrey Hinton, G E Hinton Turing Lecture, ACM A M Turing Lecture Series (Phoenix AZ, 2019), https://amturing.acm.org/vp/hinton_4791679.cfm.

15. Eliza Strickland, “How IBM Watson Overpromised and Underdelivered on AI Health Care,” IEEE Spectrum: Technology, Engineering, and Science News, April 2, 2019, https://spectrum.ieee.org/biomedical/diagnostics/how-ibm-watson-overpromised-and-underdelivered-on-ai-health-care.

16. AI History Panel.

![]()