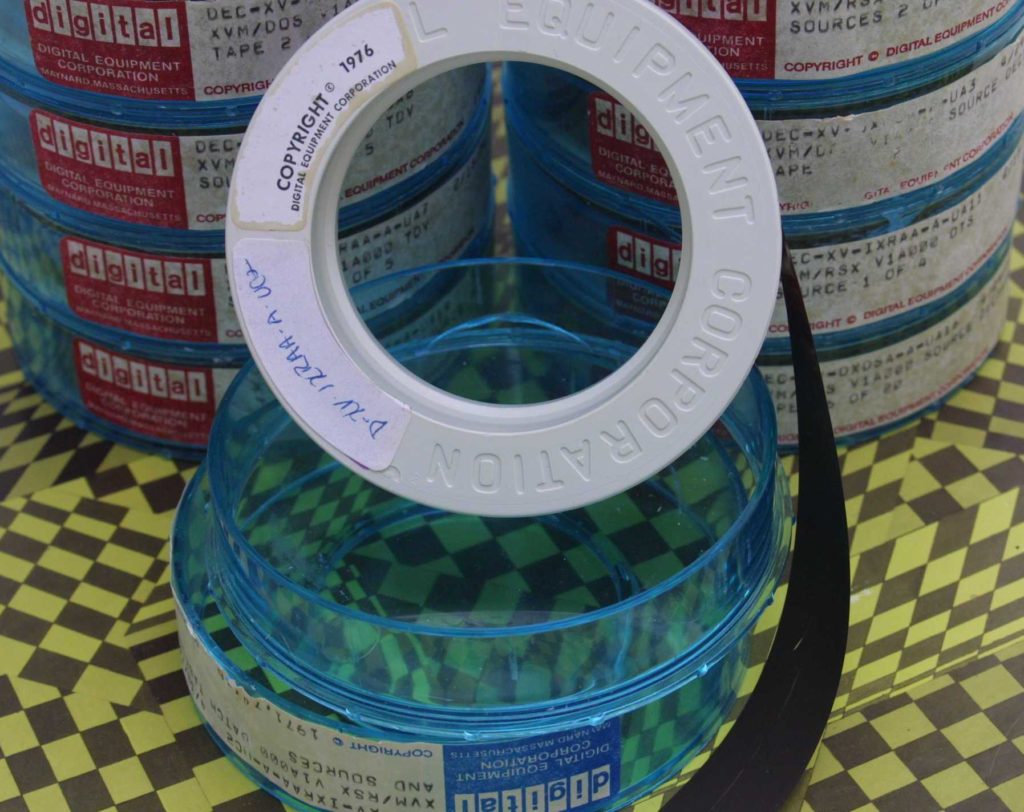

PDP-15 DECtapes for RSX-15, an early DEC real-time operating system. The collection includes a complete set of about 50 tapes that I read using an original DECtape drive with a custom interface to a PC.

The collection, preservation and presentation of software artifacts at CHM has been actively pursued during my time as the software curator here, though most of the work has been going on behind the scenes. Since we now have a nice venue for talking about this work with the @CHM blog, I wanted to share with you some of the approaches and challenges of collecting software.

During the development of the Revolution exhibit, we made the conscious decision to not have software isolated as a separate topic in the exhibit narrative. We felt that the best way to present the subject of software to the general audience was to use a ‘systems approach’ when dealing with a particular era in computing, that is, to include elements of hardware, software, ‘makers’ and ‘users’. It turned out that this presented us with a problem; there were many more artifacts in the collection about the ‘makers’ as opposed to the ‘users’. In the case of software, it turned out our collection was heavily weighted towards operating systems, compilers, and other tools produced by computer vendors, and much less about the application of these tools from the perspective of the day to day computer user.

How do you preserve the user-written applications for minicomputers and mainframes? Does it make sense from a physical storage perspective to do so? Does enough information, say, from a 1970’s minicomputer hotel reservation system even exist today to preserve? If you have the source code for it, would it make any sense to anyone studying it in the future? If you just had the binaries and a software simulation of the hardware could you actually run it in the future?

CHM has acquired the Engelbart/SRI tape collection (X5726.2010, 52 boxes of magnetic tape), containing the source code for NLS as well as all technical content and correspondence generated by the SRI Augmentation Research Center (ARC) from 1968 to 1980.

The problem, as we observed when constructing a computing exhibit beyond the timeframe of the 1970s is that the computing world has evolved into a small number of hardware platforms with a generally common set of development tools, while the application and diversity of computing has exploded due to Moore’s Law and the fact that general purpose computers are now ubiquitous, networked, and inexpensive.

So, given that the world has changed, what does the Museum, as an institution with a mission to “preserve and present for posterity the artifacts and stories of the information age” collect? Where do the artifacts and stories come from? How much time, effort and cost will there be to preserve and make them available for museum or external use? Ultimately, who is the audience for what has been collected? As an institution with physical and financial constraints, these aren’t questions in the abstract. They represent real choices that have to be made with regards to space planning for physical documents and artifacts, and for ‘born digital’ ones that require media recovery, permanent archival indexing, and storage.

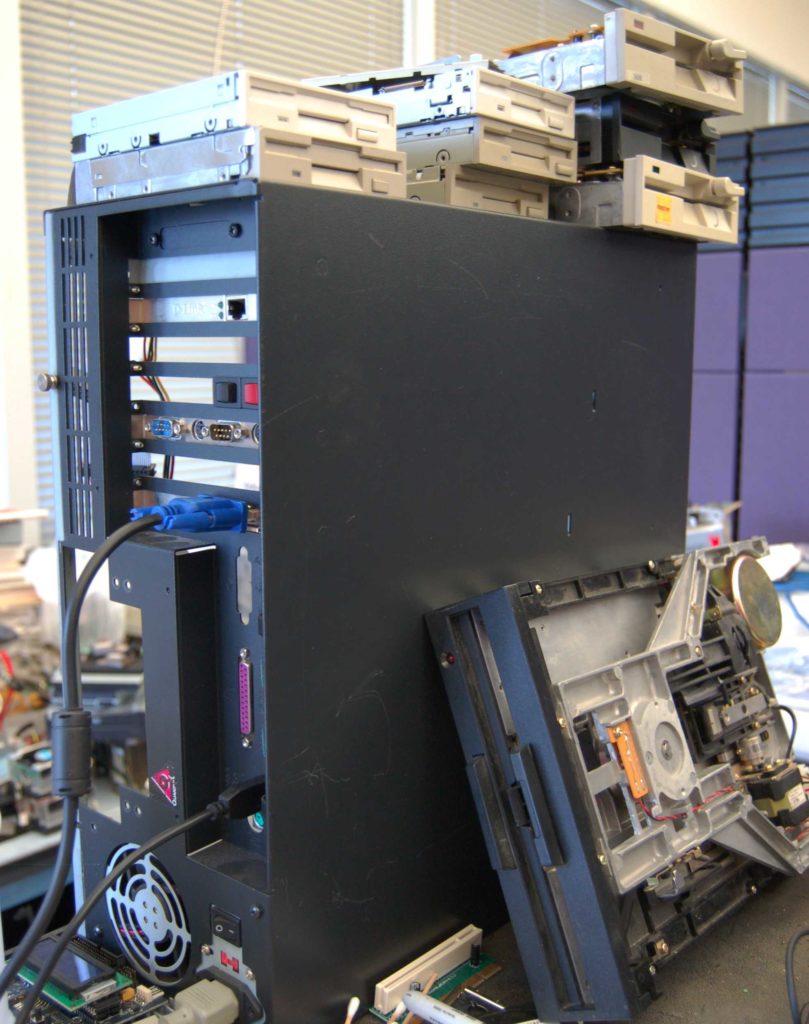

Reading old software formats, such as floppy disks, requires custom equipment. This is my floppy disk reader connected to a PC. Notice all the different sizes, including an 8” floppy disk drive.

I have been collecting and reading software since the late 1970s. My focus has been to find anything that still survives from the mainframe and minicomputer eras, precisely because they are so rare and at risk of being lost forever. It turns out that as these big systems were scrapped, the software was scrapped along with them. The manufacturers had no reason to keep old software around for obsolete architectures, so they didn’t.

During the time that I have been with the museum, new sources for surviving collections of software for these sorts of machines have dwindled. In a few cases, we have acquired collections of surviving archives from companies (in particular from HP) which will require a significant amount of time to recover from their original media, catalog, and ingest them into CHM’s digital repository. Along with these donations, we try to obtain the rights to redistribute the software we obtain for non-commercial use. Len Shustek, the founder of CHM, and I have been working hard to track down source code and obtain permission from the companies to release the code. Receiving permissions from IBM to distribute APL/360, Xerox PARC releasing the code for the Alto, and Unisys releasing BTOS are some of our recent success stories. Len just wrote a nice blog entry on APL, I will be doing the same on the Alto and BTOS in the future.

The Stoyan collection on LISP programming documents its origins, evolution, and use of the language for many applications especially artificial intelligence. The 100 linear feet collection is comprised of memoranda, manuals, technical reports, published and unpublished papers, source program listings, promotional material, computer tapes and floppy disks covering 1955 through 2001.

In addition to the work done by the CHM staff, volunteers have been working on specific directed projects. They have been a huge help in processing the collections we’ve received in the past few years. The work that Paul McJones and Randy Neff did cataloging the Stoyan LISP documentation archive and the work that J. David Bryan did to completely index the tens of thousands of HP 1000 minicomputer software files from the archival backup tapes that were donated by Hewlett Packard are two examples.

Related to help from our volunteers is my work to provide access to the software collection to preservation communities around the world by scanning documentation and reading different media such as 1/2″ magnetic tape, DECtape, and punched cards. There are now dozens of other organizations actively working to preserve computing history either by collecting and restoring old computers, or working on simulators that have been asking for copies of the software and documentation that we have in our collection.

Finally, I am excited about the development of a robust digital repository at CHM to preserve the work that I have done over the past thirty years. My hope is that it will make it easier for people to access what we have acquired and indexed. Learn more about CHM’s Digital Repository project.