Take a walk through the exhibit.

Watch an interview with Ameca the robot.

Today’s chatbots and artificial intelligence are changing science, art, work, and education. Wondrous? Worrisome? Or just another technology that will fit seamlessly into our lives like others before it?

Everyone speculates about chatbots’ impact. They seem to converse intelligently, something we long thought uniquely human. Yet they aren’t conscious, simply remarkable fusions of math, data, and computing power.

What do they hold in store? Today’s extraordinary chatbots have a long history. And history is a powerful guide.

Chatbots are computer programs designed to engage in the distinctively human activity of conversation—written or spoken—using everyday natural language. To accomplish this, chatbot creators have drawn on evolving artificial intelligence techniques and computer capabilities.

The goal? Make computers more helpful, capable, personal, and easy to use. Some developers have created chatbots to model human experiences and explore the limits of computing. Many strive to turn chatbots and their underlying technology into major businesses—the next big thing.

What if machines or other creations could talk, act, or think? What would it mean if we could build them ourselves? For centuries, in myth and in fiction, different cultures have contemplated the implications, benefits, and dangers of this longstanding dream. These stories still shape our understandings of today’s technologies.

Reading, writing, speaking, thinking: We’ve long viewed such abilities as uniquely human, but we’ve also long tried to create machines that mimic, or perhaps recreate them.

Alan Turing. Credit: Science History Images/Almay Stock Photo

“You can buy a machine now to do some of your thinking for you,” proclaimed a 1949 Popular Mechanics article. This was true for mathematical calculations, which digital computers had begun doing at superhuman speed. If computers do things that people can do using their human intelligence, do computers also think?

Alan Turing’s work with digital computers in this era convinced him that computers could perform tasks once considered exclusively human. In 1950, he published a paper proposing an “imitation game.” A human and computer would write to each other, asking and answering questions. If people thought they were communicating with a person, Turing felt it was reasonable to say the computer could think. This became known as the Turing Test.

Researchers gathered to address this question during a summer workshop at Dartmouth College in 1956. Their goal? Making computers do things that we do using our intelligence. They agreed to call it “artificial intelligence.” They didn’t agree on how to achieve it.

Some advocated cybernetics, dynamic systems responding to feedback—like thermostats reacting to their surroundings. Others emphasized machine learning using statistics and probability. Ultimately, two primary approaches emerged. Symbolic AI used logic and rules to manipulate symbols representing concepts or objects. Neural networks were hardware and software models inspired by living brains. Initially, symbolic AI dominated, then statistical machine learning became more widely adopted. Neural networks now drive the latest AI.

Some two-dozen researchers interested in machines able to think and learn attended the Dartmouth Summer Research Project on Artificial Intelligence in the summer of 1956, where they named the emerging field. From left to right, Oliver Selfridge, Nathaniel Rochester, Ray Solomonoff, Marvin Minsky, Peter Milner, John McCarthy, Claude Shannon. Credit: Photo by Nathanial Rochester/Courtesy The Minsky Family

Some two-dozen researchers interested in machines able to think and learn attended the Dartmouth Summer Research Project on Artificial Intelligence in the summer of 1956, where they named the emerging field. From left to right in the photo above: Oliver Selfridge, Nathaniel Rochester, Ray Solomonoff, Marvin Minsky, Peter Milner, John McCarthy, Claude Shannon.

Professor Bernard Widrow discusses his experience of the Dartmouth Conference.

The April 2, 1965 cover of Time magazine. Note illustrator Boris Artzybasheff’s depiction of the computer as both a giant brain and a conversationalist, typing away and talking on the telephone. Credit: TIME USA, LLC.

When you do math, you use symbols to represent numbers and processes. For instance, the symbol “5” represents a specific quantity and “+” represents addition.

Symbolic AI takes a similar approach, representing the world through symbols. It then applies logic rules to prioritize and process those symbols. The primary type is “lookahead” search, exploring multiple paths to solve a problem. The more complex the problem, however, the more paths to explore. Programs pare down the number of options using various rules-of-thumb called heuristics.

Symbolic AI excels for many things, from planning routes and proving theorems to playing chess. Some such programs, called “expert systems,” exceed human abilities in tasks such as diagnosing infections. Symbolic AI dominated from the 1960s into the 1990s, pushing aside alternatives such as cybernetics, statistical machine learning, and neural networks.

Allen Newell, like other artificial intelligence researchers, believed that computers playing chess offered an ideal model of intelligent problem-solving. Many of these researchers were themselves avid chess players. This is Newell’s personal chess set. Gift of Paul Allen Newell, 2023.0050

The idea of intelligent computers—“thinking machines”—became a regular feature of science fiction in the 1950s. Astounding Science Fiction published many important science fiction authors. Credit: Used with permission of Dell Magazines

Don’t reinvent the wheel! To create intelligent machines, why not model them on intelligent animals?

Brains are intricate networks of cells called neurons that interconnect and interact in complex ways. Using this as inspiration, researchers attempted to create simplified models called “neural networks” using hardware and software.

Neurophysiologists Warren McCulloch and Walter Pitts laid the groundwork for this effort. In 1943, they presented the first mathematical model of a neural network. In 1958, psychologist Frank Rosenblatt created the Perceptron Mark I, which built that model using electronic hardware.

Neural networks are AI systems inspired by brain structures. They’re built using software or hardware . . . though most today primarily use software.

In living brains, neurons activate in response to signals from other neurons to which they’re connected. In artificial neural networks, a mathematical web of “nodes” is arrayed in grids. Each node holds a single number and is interconnected with other nodes in other grids.

These systems process information (represented by numbers) by passing it through a series of layers (the grids): an input layer, output layer, and a middle layer called the “hidden layer.” Often there are many hidden layers in a neural network.

Every interconnection between nodes is weighted to determine how strongly the value of one node affects another. Initially, these weights are random. The system then alters the weighting an unimaginable number of times until it gets the desired results found in a massive amount of input data. This process, called “training,” creates a neural network able to perform a specific task.

Before there were chatbots . . . there were chatbots. Researchers coined the term in the 1990s, but programs designed to chat with users had appeared decades earlier.

Early computers used punched paper cards as input. In the 1960s, timesharing (multiple users sharing a centralized computer) began changing that. People typed commands on terminals, and computers “typed” back almost instantly. But could people use everyday language instead? In the mid 1960s, Joseph Weizenbaum at MIT tested that with his symbolic AI program, ELIZA.

People typed sentences in plain English. Using set rules, ELIZA generated replies to key words. To make it seem natural, Weizenbaum borrowed a Rogerian psychotherapy technique: repeating someone’s statement as a question. He became alarmed at how users, captivated by the software, invested chats with emotion and meaning even when they knew ELIZA lacked any understanding.

Try out ELIZA on this simulator.

AI developers have long focused on creating computers that can communicate with people the same way we communicate with each other—called Natural Language Processing (NLP). They’ve embraced many different ways to achieve it.

Like AI in general, early NLP efforts mostly relied on symbolic AI. Over time, researchers increasingly used statistical machine learning, neural networks, and deep learning.

Many NLP developers have focused on text, addressing tasks such as translating between languages, checking spelling and grammar, and predictive text that suggests words as we type. Other researchers have concentrated on speech. They apply NLP to speech-to-text transcription and voice synthesis software designed to help computers sound more human.

Today, these various facets of NLP are often interconnected. You can talk or write to chatbots, digital assistants, or smart speakers in different languages and they talk or write back.

Can we really develop intelligent computers? Should that even be our goal? Various critics have posed these and other basic questions. Essentially, they’re asking if AI research is on the right track.

From the 1960s through 1990s, symbolic AI dominated the field. Yet philosopher Hubert Dreyfus, writing in 1972, argued that much of the way humans think is not symbolic. He suggested that the underlying AI model was therefore misguided.

Others, such as ELIZA-creator Joseph Weizenbaum, emphasized the importance of values in decision making—arguing that values sprang only from lived experience. Anthropologist Lucy Suchman similarly stressed the role of human experience. She reasoned that decisions take shape only in the context of complex social interactions . . . which computers lack.

CBS Media Ventures

Star Trek first aired on television from 1966 into 1969. The computer aboard its starship Enterprise primarily interacted with crew members verbally in ordinary language. This vision of a “voice computer” still inspires the work of many computer researchers and entrepreneurs.

Credit: Warner Brothers Entertainment Inc.

HAL 9000, in Stanley Kubrick’s 1968 film 2001: A Space Odyssey, remains a popular depiction of an artificial intelligence system communicating through speech. In the scene above, HAL wins a chess game against astronaut Dave Bowman. Chess playing has long fascinated artificial intelligence researchers. Later in the film, HAL famously becomes homicidal until Bowman disconnects it.

The hold of 2001 on our imaginations is reflected in the ways it has been referenced and appropriated over the decades, as in the 1976 comic book below.

The emotional power of conversation might explain why talking to early chatbots was so captivating. It also may lie behind the extraordinary array of chatty toys that people created in this era, giving the illusion they’re more than simply objects.

Sophisticated chatbots benefit from large pools of data and large numbers of users. Both became readily available through the World Wide Web.

As the World Wide Web mushroomed in the 1990s, search engines to help people find information became a priority. Developers looked to statistical machine learning. That approach requires tremendous amounts of data and examples—which the web offered in abundance.

Statistical AI also facilitates online recommendations and targeted advertising, which are highly profitable. Its commercial potential inspired companies such as Google, Microsoft, Baidu, and others to assemble huge AI research teams. The web also connected AI systems to users worldwide, whose real-world interactions help train systems.

It’s all about finding patterns in data, then making predictions based on those patterns.

More specifically, statistical machine learning uses the mathematics of statistics to identify patterns in samples of data about a specific topic. The system then uses these patterns to create a model that can make general predictions about that topic. Using a statistical model to make fresh predictions is called “inference.”

There are various types of machine learning. In supervised learning, humans categorize and label the training data. In unsupervised learning, systems discover these categories on their own. Reinforcement learning is essentially a trial-and-error approach. Computers attempt a task over and over. After each failure, the system modifies itself to achieve greater success.

The web supercharged the demand for statistical machine learning and provided the vast data it required. Websites used statistical machine learning for searches, product and entertainment recommendations, and for targeted advertising.

Folks thought they were chatting with a person. But Alice was actually A.L.I.C.E.

The Turing Test famously challenged AI systems to fool people, making them believe they were conversing with a human. A.L.I.C.E. (Artificial Linguistic Internet Computer Entity)—a web-based chatbot that debuted in 2001—succeeded repeatedly. Its creators wrote a programming language that used pattern matching to analyze users’ input. A.L.I.C.E.’s rules-based program is open-source and free, letting programmers worldwide continue to enhance it.

A scene from Her. Credit: Warner Brothers Entertainment Inc.

In Her, an award-winning 2013 science-fiction film written and directed by Spike Jonze, a man falls in love with his AI voice assistant. The film explores emotional relationships between people and AI capable of conversation, feeling, learning, and, eventually, superhuman abilities. Jonze was inspired in part by using the web-based A.L.I.C.E. chatbot in the early 2000s.

A.L.I.C.E. screenshot, ca. 2004

Pandorabots CEO Lauren Kunze on ALICE and commercial chatbots.

Try A.L.I.C.E. for yourself here.

Chatbots aren’t alive. They have no gender. Yet we often give them one.

The voices, scripts, or images we choose for chatbots create an illusion of gender. It’s often female, reflecting cultural stereotypes about assistants. Chatbot makers report that more than one in five interactions involve sexual language or content, reflecting perceptions or projections about gender.

Jabberwacky—launched on the web in 1997—had an explicitly male persona based on the developer who created it. Jabberwacky responded to queries by finding patterns in questions people had asked previously, often quoting them verbatim.

Jabbberwacky screenshot, ca. 2011

From the outset, chatbot developers strove to create “intelligent assistants.” These would not only converse with users but also do real work, from summarizing information or accessing computing resources to controlling devices and more. In the 1980s, Apple researchers created a video of an imagined futuristic “Knowledge Navigator” intelligent assistant that inspired a generation of developers.

As chatbots approached this goal in the late 1990s and early 2000s, they increasingly transitioned from research projects to commercial products and services. Text-based chatbots became commonplace on the web, in smartphone apps, and for sales and customer support. Voice-based assistants—such as Alexa, Siri, Cortana, and Google Assistant—launched as commercial products, often integrated with other software and devices.

As AI research gathered steam in the 1950s, neural networks played second fiddle. Maybe third. Symbolic AI, and then statistical machine learning, dominated. That balance started shifting in the 2010s.

Hardware, software, design, and data advances brought a new approach to neural networks called “deep learning” that began outperforming other AI approaches. It got a big boost from powerful computing chips called GPUs (Graphics Processing Units), originally developed for graphics and gaming but soon put to other uses.

In 2006, a team at Stanford under Fei-Fei Li began building ImageNet, a vast database of photos with descriptive labels. They then challenged AI researchers to compete in having their systems identify the contents of each image.

In 2012, AlexNet, a deep learning system from the University of Toronto, trounced its symbolic AI and statistical machine learning rivals. AlexNet’s victory launched a new era dominated by deep learning.

University of Toronto graduate students Ilya Sutskever and Alex Krizhevsky, alongside advisor Geoffrey Hinton, created the trailblazing deep learning system AlexNet. Its extraordinary image recognition capabilities relied on graphics processing unit (GPU) chips that performed all the computations required to train the model. From left to right, Ilya Sutskever, Alex Krizhevsky, and Geoffrey Hinton, 2017. Credit: University of Toronto

This is a rare photograph of the home computer with GPUs used to create AlexNet. Credit: University of Toronto

Early code by Alex Krizhevsky from the AlexNet project, now on Github. Learn more.

Neural networks are loosely inspired by brain structures. They feature interconnected nodes organized into an input layer, an output layer, and a middle layer that links the other two (called the “hidden layer”). Deep learning systems expand on this basic structure, using many hidden layers to process vast amounts of information.

Deep learning models require three key ingredients.

First, they need an enormous amount of data—millions or billions of examples—to train their models. Second, they require tremendous processing power to handle all this training. Finally, they depend on considerable human labor. People prepare data and correct the model’s output, essentially fine tuning the model.

Once the model’s training is complete, using it (called “inference”) requires much less computing power. And much less human involvement.

You’ve probably seen predictive text on smartphones or computers. You type a word, and the device suggests the most-likely next word. Large language models let chatbots do something similar . . . but on a far bigger scale. They’re built on mountains of training data. In general, the more data, the more effective the model.

Earlier neural networks could only process text one word at a time, making it impractical to train large models. Transformers—a mathematical approach to deep learning developed by Google in 2017—process text in parallel, enabling truly gigantic models.

Today’s large language models span billions of network nodes and connections. They’re trained on more than a trillion words—a substantial percentage of all English text readily available on the web. If you wanted to read that many words, clear your calendar. It would take about 50,000 years nonstop.

It talks, but does it understand?

Here’s a big question experts are tackling about today’s chatbots: Do they actually understand the world?

Some say “Yes!” A 2023 paper from Microsoft Research, Sparks of Artificial General Intelligence, argues that these models show real intelligence, reasoning, and knowledge. The mystery is how they pull it off.

Others say “No way!” A 2021 paper, On the Dangers of Stochastic Parrots, by researchers from Google and the University of Washington, suggests these models are more like parrots—repeating phrases they’ve picked up without really understanding them. Think of it as saying something in a language you don’t speak.

Which view is correct? The answer could shape the future of AI.

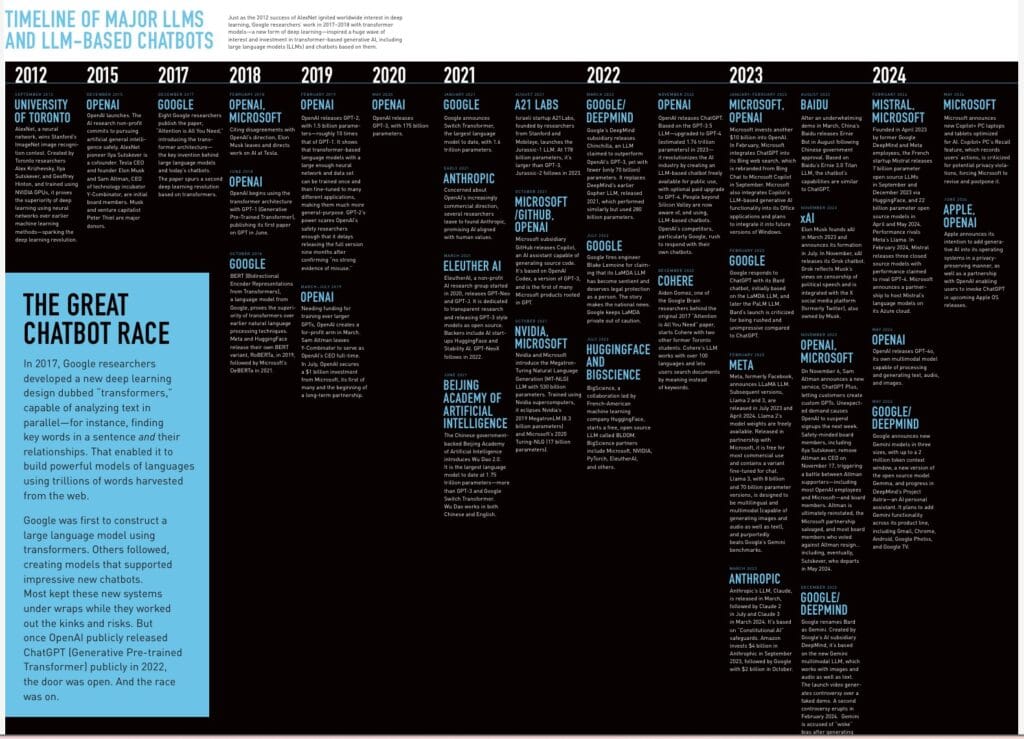

In 2017, Google researchers developed a new deep learning design dubbed “transformers,” capable of analyzing text in parallel—for instance, finding key words in a sentence and their relationships. That enabled it to build powerful models of languages using trillions of words harvested from the web.

Google was first to construct a large language model using transformers. Others followed, creating models that supported impressive new chatbots. Most kept these new systems under wraps while they worked out the kinks and risks. But once OpenAI publicly released ChatGPT (Generative Pre-trained Transformer) publicly in 2022, the door was open. And the race was on.

An explanation of Large Language Models, created by 3Blue1Brown for Chatbots Decoded.

Co-inventor Jacob Uzkoreit on transformers.

Explore the timeline of major LLMs and LLM-based chatbots here.

In the 1950s, symbolic AI seemed most promising. In the late 1990s and early 2000s, vast data available on the web swung the pendulum toward statistical AI and, in the 2010s, to deep learning. Today, large language models, chatbots, and generative AI predominate.

Has the pendulum finished swinging?

Doug Lenat was among those researchers predicting that the future lies in combining symbolic AI with deep learning. At MIT, Josh Tenenbaum uses statistics to model how children learn from just a few examples. Jeff Hawkins, at the AI company Numenta, advocates neurobiology-inspired systems that “reverse engineer” the human brain. AI is still evolving. Multiple paths remain open.

Handheld computing entrepreneur and brain scientist Jeff Hawkins cofounded both Palm and Handspring. He believes that the biological fundamentals of the cerebral cortex—the part of our brain that produces thoughts, perceptions, and language—are essential to designing powerful AI systems, and cofounded the firm Numenta to pursue this vision. Credit: Anastasiia Sapon/The New York Times/Redux

Most people have deep-seated biases absorbed from their surrounding culture. But computers aren’t people. Are they free from prejudice?

Some prominent AI experts, who are also women of color, have highlighted problems, limitations, and biases often embedded in deep learning systems, including large language models and chatbots made with them.

Joy Buolamwini’s research spotlighted racial bias in facial recognition and image classification systems, which frequently misidentify and miscategorize people of color. Timnit Gebru documented biases baked into large language models and chatbots. Safiya Noble was a trailblazer in studying racial bias in AI systems—notably the machine learning underlying Google search.

They and others have shown how complex systems can reflect and perpetuate the preconceptions and biases of the people and societies that created them.

Microsoft created an English language version of Xiaoice called Tay, which used previous conversations to shape its responses. After just 16 hours on Twitter, Tay produced hateful and harmful posts, regurgitating language from users targeting it, and had to be removed. Tay highlighted the need for virtual guardrails to protect users from harmful AI results—and AI from harmful users. Credit: Microsoft

Large language model chatbots excel at juggling words. That’s great for producing text and speech. But people communicate creatively in many other ways too. Researchers have adapted transformer models to produce images, music, videos, computer code, and more. These diverse systems are grouped under the umbrella term “generative AI,” reflecting their ability to generate new content.

Many systems weave together various forms of generative AI. For example, integrating a text-writing system with an image generator yields a text-to-image program. Users can describe a scene in words . . . and the AI will draw it.

Generative AI technology can be used to make “deepfakes”—images, audio, or video impersonating real people or events. While earlier deep learning approaches also could generate text and produce deepfakes, today’s technology is truly impressive. And worrying. What will happen if people feel that they can no longer believe what they read, see, or hear?

Is this aerial photograph of the Golden Gate Park in San Francisco real? The photograph was posted to social media site Reddit, leading many users to suspect it was too beautiful not be to made with generative AI. Fact-checking site Snopes concluded it was real, and the story made news in San Francisco. The original photographer, Eric Thurber, confirmed its authenticity on Reddit. Credit: copyright Eric Thurber

A promotional still for the Western film produced in 1960 for the CBS television show “Tomorrow” on “The Thinking Machine.” MIT’s Doug Ross programmed the TX-0 computer to produce the script for the Western film, an important example of AI text generation before “generative AI.” Credit: MIT Museum Collections

Some generative AI models are trained on huge numbers of images found on the Internet, and are able to generate an astonishing array of new images in response to simple text “prompts.” The images in the slider below were all created with such a generative AI model, called Midjourney. Each image shows us something about how these models work.

There’s a lovely place called Filoli Gardens. There’s also this Filoli Gardens—a fantastical dreamscape by artist Daniel Ambrosi.

To create these pictures, Ambrosi used a neural network trained to classify images. He then shifted it into reverse, back-filling photos of the actual gardens with elements taken from images in the training data. Many of those added bits are biological. Look closely. You may see something looking back at you!

The technique is new, but the motivation behind it isn’t. Artists have always embraced new tools to reimagine our world. As Ambrosi observed: “We are all actively participating in a shared waking dream, a dream that is on the precipice of considerable amplification by rapid advances in artificial intelligence and virtual reality.”

Credit: Photo by Kirsten Tashev

Will AI steal my job? My artwork? Our elections? Or will user-friendly chatbots just make me more efficient and simplify my life?

New technologies spark hopes and fears. Throughout history, machines have sometimes replaced workers. They’ve also lightened our loads. Chatbots fill a gap long missing in technology: the ability to converse with us naturally. They’ll likely take us someplace new. The question is, where?

Many new technologies follow a predictable cycle: from phenomenal to familiar. They debut in a blaze of hope and hype. But once they’re part of our lives, the glow dims. Excitement may even sour to disillusionment as they fall short of our unrealistic expectations.

This cycle has been particularly pronounced for AI. Since the 1950s, researchers have repeatedly forecast earth-shattering transformations. Yet Earth remains unshattered. In the 1980s, developers hailed expert systems using symbolic AI as revolutionary. Instead, the technology is now routine, powering every automated phone system you call.

This cycle is compounded by the “AI effect.“ As soon as researchers reach an AI goal, the technology becomes just another everyday tool. Grammar checking, speech and handwriting recognition, chess-playing: each was once hailed as requiring true intelligence. Once achieved, however, they were dismissed as merely calculations.

Market analysts Gartner Research introduced their “hype cycle” in 1995. It captures the emotional rollercoaster around new tech trends and contains pithy advice for decision makers.

In AI, such hype-fueled enthusiasm was followed by “winters,” when funders pulled back after disappointing results. Successful products got rebranded with a non-AI name, fueling the “AI Effect.”

In spring 2023, the Writers Guild of America (WGA) went on strike, in part to protest the use of generative AI in developing scripts for movies and TV shows. Gift of Writer’s Guild of America West, 2023.0179

As the Industrial Revolution dawned, textile workers calling themselves “Luddites” rioted against the newfangled machines stealing their skilled jobs.

Throughout history, people have used inventions to destroy or downgrade some jobs while creating or augmenting others. Chatbots may follow a similar pattern. Their ability to perform what were once human-only tasks sparks legitimate anxiety. So does their need for low-skilled human help. Yet AI also brings tremendous potential to enrich and improve our jobs.

“Painting is dead,” artist Paul Delaroche supposedly proclaimed. It was 1839. He’d just seen Daguerre’s early photographs.

Technology continually transforms creative processes, altering the relationship between artists and their work. Does AI pose a unique threat—or simply new opportunities? When does technology move from being a tool to a partner? Will artists become more like patrons, commissioning rather than creating work? Generative AI is trained using other people’s work. What does that mean for intellectual property and copyright laws?

Why struggle with a programming problem that someone may already have solved? Chatbots, trained on vast libraries of code available online, can suggest ready-to-use code. That can make programmers vastly more productive. Will it also make them unnecessary?

Early computers were laboriously hand coded with 1s and 0s. Higher-level programming languages streamlined the process. Chatbots are starting to let us perform some programming tasks using everyday language, empowering more people to develop software. What will these changes mean for programming professionals?

Today’s chatbots can generate computer code much as they do text, drawing from training data. AI “pair programmers,” like CoPilot from Microsoft, OpenAI, and online codebase GitHub, suggest code as programmers write, both boosting speed and stoking fears about job security. AI code can also introduce errors that humans must debug. Credit: Github

Education may face the greatest impact from AI. Teachers already use educational chatbots. Some schools employ AI grading systems. And enterprising students are pioneering chatbot cheating!

Chatbots already serve as one-on-one tutors. Will the growth of personalized lessons or custom textbooks enhance coursework? The educational potential of AI is tremendous. So are the perils. Chatbots sometimes make stuff up. And how do teachers know whether a student or chatbot did the homework?

There’s a perpetual arms race between students and teachers. Technology often plays a role.

In the 1970s, educators worried that kids could cheat by sneaking calculators into math class. In the 2000s, they fretted about students taking Wikipedia shortcuts. Both tools are now accepted. Teachers, meanwhile, can use technology to spot plagiarism.

How will AI shift the balance in our effort to help students learn? Will chatbots become champion cheaters? Or just another tool?

As chatbots become more convincing conversationalists, they also become more convincing companions. Communicating naturally through voice or text makes it easier to bond. Maybe too easy?

Computers today connect us. The internet nurtures global communities. Videoconferencing brings people face to face. But chatbots, rather than reinforcing connections, have the potential to replace them.

Websites and apps providing virtual boyfriends and girlfriends—including the ability to buy them gifts!—are upgrading to the latest AI. Several companies, such as Replika, offer customizable AI companions reachable via text or animated avatars. Millions of people today already cherish relationships built on chatbot technology. Does this reflect our growing isolation . . . or contribute to it?

Mental health care is expensive. Trained psychologists are often scarce. Can chatbots fill in?

The roots of chatbot therapy are deep. ELIZA, in the 1960s, was simple but hinted at the possibilities. It used the psychotherapist a psychotherapy technique of repeating a patient’s words as questions. In the 1970s, a program called PARRY modeled paranoid schizophrenia. A team at Stanford University released the Woebot app in 2017, offering digital counseling.

Today, large language models bring enhanced fluency and naturalness to therapy chatbots. But what about privacy? And who is responsible if they give unwise or potentially dangerous advice?

Therapy chatbots like Woebot promise mental health services to the millions worldwide who can’t afford a human therapist, raising ethical, safety, and confidentiality concerns. Clients can chat from anywhere, 24/7, through a friendly conversational app. Credit: Woebot

Most major chatbots come from companies supported by advertising. We’re their product! Will their priorities match our needs? And given chatbots’ tendency to make things up, will they become misinformation machines?

Many questions remain. Should companies pay for the human-generated content that trained their chatbots? Who is liable if bad advice injures someone? But there’s also ample cause for hope. The potential benefits could be immense. The choices we make will help determine which outcomes prevail.

The New York Times sued OpenAI and Microsoft for training ChatGPT on 170 years of copyrighted articles without permission, claiming they “…seek to free-ride on The Times’s massive investment in its journalism.” Credit: Gary Hershorn/Getty Images

Creating cutting-edge chatbots is costly. How will developers pay for them? Or rather, how will we pay for them?

Three funding models support most information technology today: advertising, subscriptions, or pay-per-use. The web relies primarily on advertising. People browse sites, then click on targeted ads. Will chatbots upset that model? Will people click product ads if chatbots can recommend products instead? Alternatively, will chatbots supercharge advertising, befriending visitors then selling them stuff? Will we have to subscribe to chatbots?

The business model for chatbots is in flux. How we ultimately fund them will shape their evolution.

Search engines mix organic results with sponsored results—essentially, ads. But when chatbots answer questions directly, where can ads go? Google and Bing are trying search/chat hybrids to preserve ad revenues. Credit: Jovelle Tamayo for The Washington Post via Getty Images

Chatbot business models remain in flux, but some clear winners have emerged: makers of the modified graphics chips powering modern AI. A year after ChatGPT’s debut, sector leader Nvidia had nearly doubled in value to over $1 trillion.

Chatbots jangle nerves. People worry about misinformation. About copyrights. About jobs. About harmful content. About too much power in too few hands.

When people worry, government regulation often follows.

Earlier information technologies also raised fears—and regulations shaped their evolution. For example, America’s Telecommunications Decency Act decreed that social media companies weren’t liable for user content. That legal shield freed digital platforms to host nearly . . . anything! Different rules would have brought different results.

Today, regulating AI has sparked a white-hot debate. America, China, and the European Union are already taking different approaches. Stay tuned!

Millions of people—many in developing countries and even in European prisons!—teach AI to accurately recognize everything from earlobes to irony. They may spend 12 hours a day clicking on images or words. These often underpaid “taskers” or “clickworkers” are among AI’s hidden human costs.

AI has an environmental cost too. Chatbot data is stored in the “cloud.” But the cloud is actually here on Earth: colossal data centers with seemingly insatiable appetites for energy.

The datacenters that chatbots call home consume more electricity than a small country, plus substantial quantities of water both for cooling and for making that electricity. A datacenter’s cooling towers can use millions of gallons daily. Credit: Google

Behind every line of chatbot poetry are often low paid “clickworkers” making sure AI can identify everything from elbows in crowds to Finnish verb endings, as well as curbing inappropriate output. Many work in the developing world for ever-changing contractors. Credit: New York Magazine

Where will chatbots be 10 years from now? How will they change our lives?

There are predictions aplenty. But we’re in the midst of a technological explosion. New systems, capabilities, and players continually arise. Leaders worldwide are debating how to regulate AI. Companies are testing potential uses. We won’t know where we’re headed until the dust settles. Nonetheless, we’ve asked experts to share their thoughts on what’s most important to understand about chatbots, and what might lie ahead.

Can we trust AI? Expert perspectives.

Bubble wrap was invented as wallpaper and insulation. Only later did folks start packing breakables with it. The Segway, meanwhile, was predicted to be “bigger than the internet.” Both examples remind us that an invention’s impact ultimately depends on how regular people end up using it.

Futurists breathlessly predict the future of chatbots and AI. Yet your opinion may matter more. Will it be transformative? Dangerous? Or will it become just another convenience, barely noticed? The path ahead partly depends on users like you.

How do you think AI will change the way we work and live in the next decade? Share your thoughts here.

Onsite visitors can share their views on the meaning and future of chatbots on the “talk-back” wall. Below are some of their ideas.

All of the videos that appear in the physical Chatbots Decoded exhibit can be found in this YouTube playlist.

All of the videos that appear in the physical Chatbots Decoded exhibit can be found in this YouTube playlist.

All of the extended interviews with AI experts, critics, and users conducted for Chatbots Decoded can be found in this YouTube playlist.

Your Feedback Powers Our Next Exhibit.

Share it here.

Kirsten Tashev, Lead exhibit director

David C. Brock, Lead exhibit curator

Hansen Hsu, Exhibit curator

Marc Weber, Exhibit curator

Jon Plutte, Lead media creator

Justin Adkinson, Media production

Max Plutte, Media production

Michelle Winn, Exhibit content manager

Aurora Tucker, Registrar & artifact mount specialist

Massimo Petrozzi, Interview coordination

Heidi Hackford, Exhibit editing and online exhibit development

Jennifer Alexander, Building infrastructure

Van Sickle & Rolleri, Exhibition design

Julio Martinez, Studio 1500, Graphic design

Paul Rosenthal, Exhibit writer

Mark Horton Architecture (MHA), Architect-of-Record

Exhibit Concepts Inc., Exhibition fabricators

Bowen Technovation, Media Integration

3Blue1Brown, Explainer / Animator