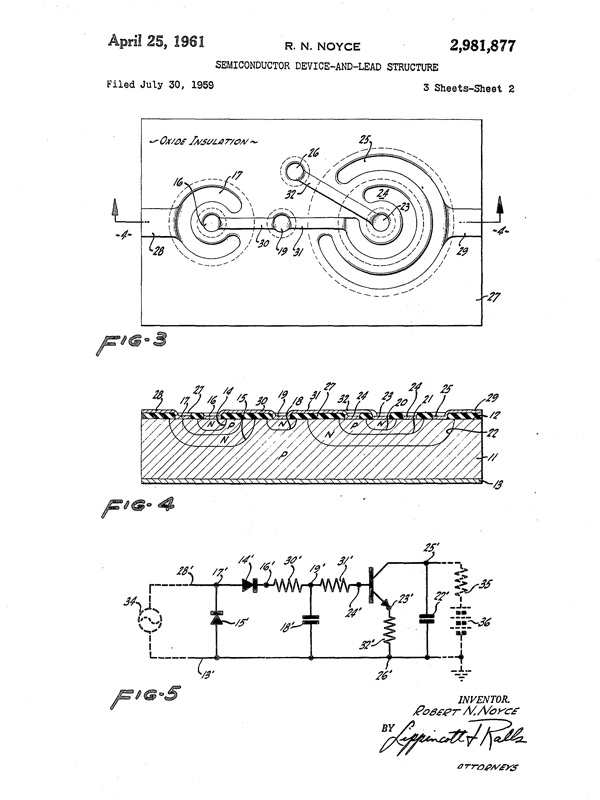

Figures from Robert Noyce’s planar IC patent, “Semiconductor device-and-lead structure”

Humans have been creating tools since before recorded history. For many centuries, most tools served to amplify the power of the human body. We call the period of their greatest flowering the Industrial Revolution.

In the last 150 years we have turned to inventing tools that amplify the human mind, and by doing so we are creating the Information Revolution. At its core, of course, is computing.

“Computer” was once a job title. Computers were people: men and women sitting at office desks performing calculations by hand, or with mechanical calculators. The work was repetitive, slow, and boring. The results were often unreliable.

In the mid 1800s, the brilliant but irascible Victorian scientist Charles Babbage contemplated an error-filled book of navigation tables and famously exclaimed, “I wish to God these calculations had been executed by steam!” Babbage designed his Difference Engine to calculate without errors, and then, astoundingly, designed the Analytical Engine — a completely programmable computer that we would recognize as such today. Unfortunately he failed to build either of those machines.

Automatic computation would have to wait another hundred years. That time is now.

The computer is one of one of our greatest technological inventions. Its impact is—or will be—judged comparable to the wheel, the steam engine, and the printing press. But here’s the magic that makes it special: it isn’t designed to do a specific thing. It can do anything. It is a universal machine.

Software turns these universal machines into a network of ATMs, the World Wide Web, mobile phones, computers that model the universe, airplane simulators, controllers of electrical grids and communications networks, creators of films that bring the real and the imaginary to life, and implants that save lives. The only thing these technological miracles have in common is that they are all computers.

We are privileged to have lived through the time when computers became ubiquitous. Few other inventions have grown and spread at that rate, or have improved as quickly. In the span of two generations, computers have metamorphosed from enormous, slow, expensive machines to small, powerful, multi-purpose devices that are inseparably woven into our lives.

A “mainframe” was a computer that filled a room, weighed many tons, used prodigious amounts of power, and took hours or days to perform most tasks. A computer thousands of times more powerful than yesterday’s mainframe now fits into a pill, along with a camera and a tiny flashlight. Swallow it with a sip of water, and the “pill” can beam a thousand pictures and megabytes of biomedical data from your vital organs to a computer. Your doctor can now see, not just guess, why your stomach hurts.

The benefits are clear. But why look backward? Shouldn’t we focus on tomorrow?

History places us in time. The computer has altered the human experience, and changed the way we work, what we do at play, and even how we think. A hundred years from now, generations whose lives have been unalterably changed by the impact of automating computing will wonder how it all happened—and who made it happen. If we lose that history, we lose our cultural heritage.

Time is our enemy. The pace of change, and our rush to reach out for tomorrow, means that the story of yesterday’s breakthroughs is easily lost.

Compared to historians in other fields, we have an advantage: our subject is new, and many of our pioneers are still alive. Imagine if someone had done a videotaped interview of Michelangelo just after he painted the Sistine Chapel. We can do that. Generations from now, the thoughts, memories, and voices of those at the dawn of computing will be as valuable.

But we also have a disadvantage: history is easier to write when the participants are dead and will not contest your version. For us, fierce disagreements rage among people who were there about who did what, who did it when, and who did it first. There are monumental ego clashes and titanic grudges. But that’s fine, because it creates a rich goldmine of information that we, and historians who come after us, can study. Nobody said history is supposed to be easy.

It’s important to preserve the “why” and the “how,” not just the “what.” Modern computing is the result of thousands of human minds working simultaneously on solving problems. It’s a form of parallel processing, a strategy we borrowed to use for computers. Ideas combine in unexpected ways as they built on each other’s work.

Even simple historical concepts aren’t simple. What’s an invention? Breakthrough ideas sometimes seem to be “in the air” and everyone knows it. Take the integrated circuit. At least two teams of people invented it, and each produced a working model. They were working thousands of miles apart. They’d never met. It was “in the air.”

Often the process and the result are accidental. “I wasn’t trying to invent an integrated circuit,” Bob Noyce, co-inventor of the integrated circuit, was quoted as saying about the breakthrough. “I was trying to solve a production problem.” The history of computing is the history of open, inquiring minds solving big, intractable problems—even if sometimes they weren’t trying to.

The most important reason to preserve the history of computing is to help create the future. As a young entrepreneur, the story goes, Steve Jobs asked Noyce for advice. Noyce is reported to have told him that “You can’t really understand what’s going on now unless you understand what came before.”

Technology doesn’t run just on venture capital. It runs on adventurous ideas. How an idea comes to life and changes the world is a phenomenon worth studying, preserving, and presenting to future generations as both a model and an inspiration.

Besides—computer history can be fun. An elegantly designed classic machine or a well-written software program embodies a kind of truth and beauty that give the qualified appreciative viewer an aesthetic thrill. Steve Wozniak’s hand-built motherboard for the Apple I is a beautiful painting. The source code of Apple’s MacPaint program is poetry: compressed, clear, with all parts relating to the whole. As Albert Einstein observed, “The best scientists are also artists.”

Engineers have applied incredible creativity to solve the knotty problems of computing. Some of their ideas worked. Some didn’t. That’s more than ok; it’s worth celebrating.

Silicon Valley understands that innovation thrives when it has a healthy relationship with failure. (“If at first you don’t succeed…”) Technical innovation is lumpy. It’s non-linear. Long periods of the doldrums are smashed by bursts of insight and creativity. And, like artists, successful engineers are open to the happy accident.

In other cultures, failure can be shameful. Business failure can even send you to prison. But here, failure is viewed as a possible prelude to success. Many great technology breakthroughs are inspired by crazy ideas that bombed. We need to study failures, and learn from them.

Given the impact of computing on the human experience, it’s surprising that the Computer History Museum is one of very few institutions devoted to the subject.

There are hundreds of aircraft, railroad, and automobile museums. There are only a handful of computer museums and archives. It’s difficult to say why. Maybe the field is too new to be considered history.

We are proud of the leading role the Computer History Museum has taken in preserving the history of computing. We hope others will join us.

The kernel of our collection formed in the 1970s, when Ken Olsen of the Digital Equipment Corporation rescued sections of MIT’s Whirlwind mainframe from the scrap heap. He tried to find a home for this important computer. No institution wanted it. So he kept it and began to build his own collection around it.

Gordon Bell, also at DEC, joined the effort and added his own collection. Gordon’s wife, Gwen, attacked with gusto the task of building an institution around them. They saw, as others did not, that these early machines were important historical artifacts—treasures—that rank with Gutenberg’s press. Without Olsen and the Bells, many of the most important objects in our collection would have been lost forever.

Bob Noyce would have understood the errand we are on. Leslie Berlin’s book The Man Behind The Microchip tells the story of Noyce’s comments at a family gathering in 1972. He held up a thin silicon wafer etched with microprocessors and said, “This is going to change the world. It’s going to revolutionize your home. In your own house, you’ll all have computers. You will have access to all sorts of information. You won’t need money any more. Everything will happen electronically.”

And it is. We are living in the future he predicted.

The Computer History Museum wants to preserve not just rare and important artifacts and the stories of what happened, but also the stories of what mattered, and why. They are stories of heretics and rebels, dreamers and pragmatists, capitalists and iconoclasts—and the stories of their amazing achievements. They are stories of computing’s Golden Age, and its ongoing impact on all of us. It is an age that may have just begun.