We take history seriously at the Computer History Museum. It’s our middle name, after all. But it’s not easy history to do, for several reasons.

ENIAC and the Origins of Software

The history of software, into which CHM is placing increasing effort, is particularly hard. I’d like to give one example in some detail: the relationship of ENIAC to the origins of software.

There has been a conventional wisdom about when what we now called “software” began to run. Many textbooks and websites, including Wikipedia and that of the University of Manchester, record June 21, 1948 as “the birth of the stored-program digital computer” because the “Manchester Baby” ran a 17-line program on that day.

The cover in ENIAC in Action by Thomas Haigh, Mark Priestley, and Crispin Rope.

But it’s not that simple. A recent book by computer historian Tom Haigh and colleagues Mark Priestley and Crispin Rope explores the conversion of ENIAC into what they prefer to call a “modern code paradigm” computer. Based on machine logs and handwritten notes, they have discovered that a complex program began running on ENIAC on April 12, 1948.

ENIAC – the Electronic Numerical Integrator and Computer – was a room-sized machine with over 17,000 vacuum tubes. It started running at the end of 1945, and for five years it was the only fully electronic computer running in the US. Estimates are that by the time it was retired in 1955, it had done more calculations than all human beings in all of history.

The initial design of the ENIAC did not use anything like the software we know today. It was basically an assembly of “functional units” that were wired together in a particular way for each new problem. If you wanted to do a multiplication after an addition, you would run a wire from the multiplier to the adder. Control was very distributed, and the machine could do many things in parallel. But designing and setting up new calculations was difficult and time-consuming.

Even before ENIAC was finished, engineers realized that there was a much better centralized way to control such a complex machine, using coded instructions stored in memory and “executed” in sequence. Control operations, such as looping and branching, could be accomplished simply by “jumping” out of order to fetch the next instruction from a different memory address. We now call this a “computer program.”

The origin of this “lightbulb over the head” idea is hotly contested. Physicist and mathematician John von Neumann was the first to describe it in an incomplete document that was widely distributed in June of 1945. But he had been discussing related ideas with many people, including ENIAC engineers John Mauchly and Presper Eckert. No one knows whose idea it was, how many people thought of it “first,” or what prior work might have influenced them. For a discussion of how it was different from earlier ideas, see Tom Haigh’s recent article “Where Code Comes From”.

Regardless of who deserves the credit, it was quickly adopted as the right way to build computers. Even the ENIAC, starting in July of 1947, was converted to use this scheme. Because ENIAC had very little writeable electronic memory, the coded instructions were stored in “function tables,” banks of 10-position switches that had previously been used to store pre-computed numerical constants. It was the modified ENIAC that ran a computer program stored in switches in April of 1948.

ENIAC vs The Baby

So, what’s the importance of this new historical discovery? That it ran 9 weeks before the Manchester Baby? That it happened in the US, not the UK? No. Those are interesting but relatively insignificant facts. Look instead at the substantial differences between the two events.

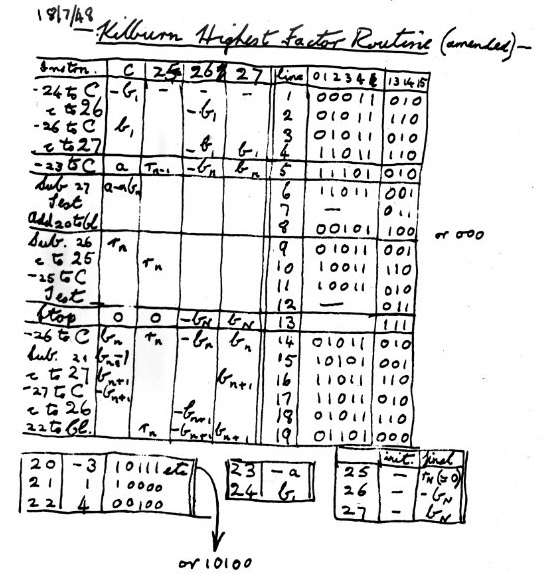

The original demo program of the Manchester Baby.

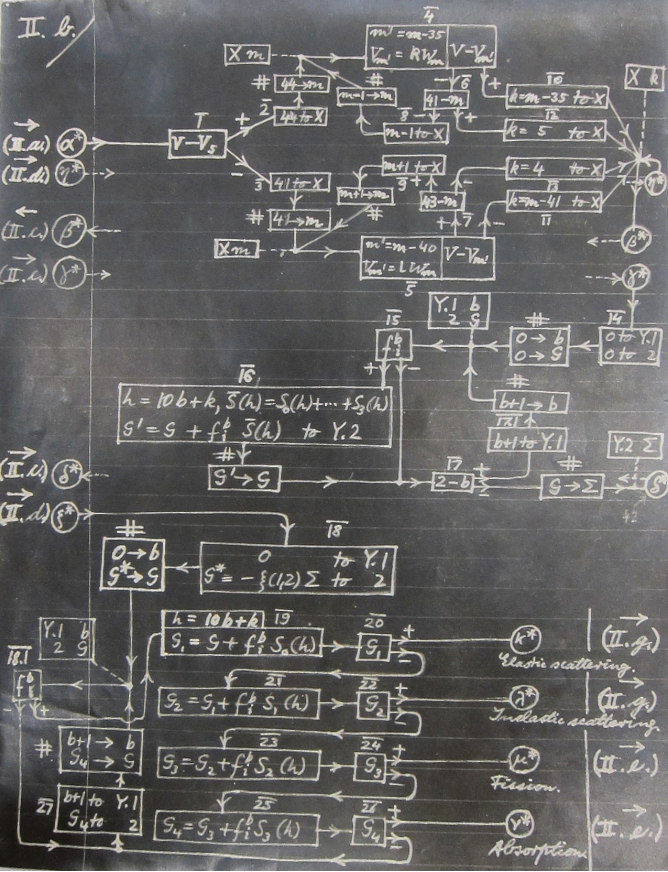

A diagram of the original demo program of the ENIAC.

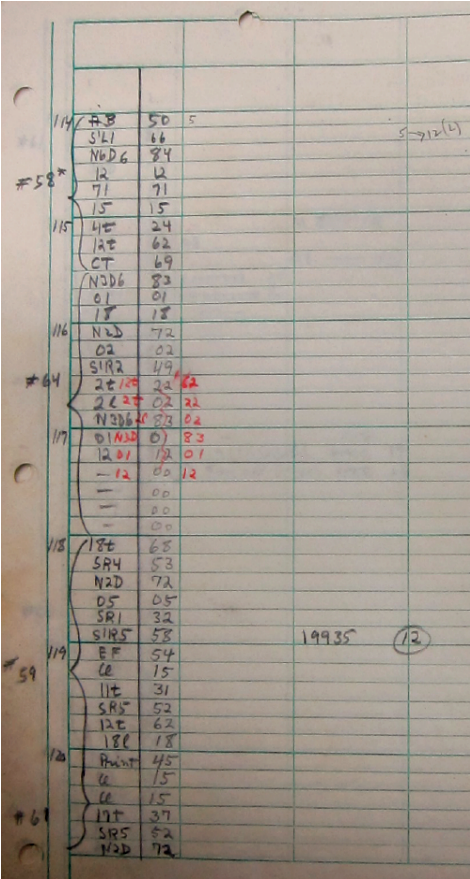

A table of the ENIAC's addresses.

John and Klara Dan von Neumann.

ENIAC’s April feat was an accomplishment, but there’s at least one good reason why calling it a “first” is problematical: the program was stored in what we now call “read-only memory”, or ROM. Manchester’s, on the other hand, was stored in the same memory used for data. That was the design that von Neumann had described, and is a characteristic of what is often called the “von Neumann architecture”.

Does that matter? Was the modified ENIAC less of a computer than the Manchester Baby because its program was in ROM and could not be changed by the computer? Historian Doron Swade has asked many computer experts about the importance of programs being in memory. He observes that “no one challenged the status of the stored program as the defining feature of the modern digital electronic computer”, but “we struggle when required to articulate its significance in simple terms, and the apparent mix of principle and practice frustrates clarity.”1

Look at it this way: many modern microprocessors, especially small ones for embedded control, have their programs in ROM. If they are modern-style computers, then so was the modified ENIAC. That’s my opinion, anyway; you are free to add or subtract your own adjectives and reach a different conclusion.

Haigh’s new book is a refreshing change in the academic treatment of computing history. In the 1970s and 1980s, historical accounts were frequently written by practitioners “who were there”, and tended to focus on technical details. In the 1990s and 2000s, professional historians shifted the discourse primarily to business, political, and social aspects. The pendulum may now be swinging back to a welcome midpoint; the authors describe their book as “an experiment in the re-integration of technical detail into history.”

This is great new history. It’s the kind we encourage, and we do — history that’s complex, nuanced, and not static. If this is what it means to rewrite history, let’s keep doing it.