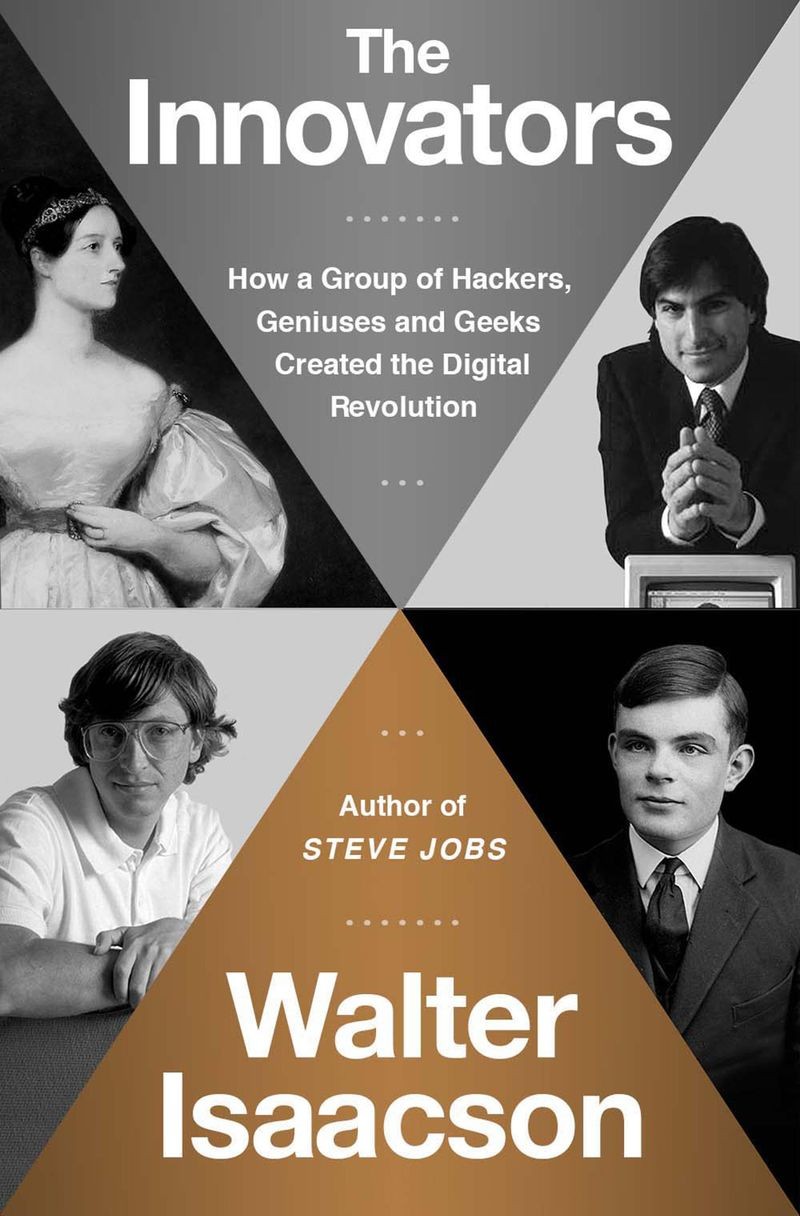

The Innovators by Walter Isaacson

In the closing pages of his epic 2007 biography “Einstein: His Life and Universe,” author Walter Isaacson observed that Albert Einstein not only was a scientist who sought a unified theory that could explain the cosmos. Einstein also was a humanist who believed that freedom was the lifeblood of creativity.

“Perhaps the most important aspect of his personality,” Isaacson wrote, “was his willingness to be a non-conformist.” Isaacson cites a forward that Einstein wrote, late in his life, to a new edition of Galileo. “The theme that I recognize in Galileo’s work,” Einstein wrote, “is the passionate fight against any kind of dogma based on authority.”

“The world has seen a lot of impudent geniuses,” Isaacson concludes.

In his latest work of history, “The Innovators: How a Group of Hackers, Geniuses and Geeks Created the Digital Revolution,” Isaacson returns to these themes once more. Impudence, yes. Genius, for sure. Yet “The Innovators” veers radically away from Isaacson’s exhaustive study of the lone brilliance of Einstein, much less the petulant independence captured in his award-winning biography of Steve Jobs. More about that in a moment.

First, the obvious: Anyone associated with a place called the Computer History Museum should be thrilled that “The Innovators” has emerged from Isaacson’s desktop. He has undertaken as sweeping, entertaining and occasionally even intimate a history of computing as any curious reader will ever find in one stop. The table of contents alone is a handy set of Cliff’s Notes of many of the milestones of the digital age: programming, the transistor, the microchip, video games, the internet, the personal computer, software, online and the web. It is a rich roadmap.

However, the main hashtag, as it were, is embedded in the subtitle of “The Innovators:” #group. “The Innovators” is a tale of teams—teams led by some extraordinary and brilliant mavericks, to be sure, but teams nonetheless—who have steadily shocked the world during the last 75 years (or more) by imagining a new future and creating a present day to enable it. In that regard, “The Innovators” departs from his recent attention to the solitary game-changer.

Isaacson has become one of the leading biographers of our age. He has tackled not only Einstein and Jobs but also Benjamin Franklin with aplomb—and all of that after he undertook a then-definitive biography of Henry Kissinger in 1992. Yet as Isaacson reveals early on, his choice to focus on teams is not only personally satisfying (“I wanted to step away from doing biographies, which tend to emphasize the role of singular individuals”) but also central to his main point about how most of the authentic revolutions of the digital age came about. He attributes the innovations of “The Innovators” to “collaborative creativity,” a phrase he coins early on and which becomes the book’s central axis.

“The tale of teamwork is important because we don’t often focus on how central that skill is to innovation,” Isaacson writes. “Collaborative creativity is important in understanding how today’s technology revolution was fashioned. It can also be more interesting.”

Isaacson builds his creativity narrative on several important pillars. The first is a prodigious amount of research. The second is an expert journalist’s eye for discerning and illuminating pivotal moments where a fair amount of chaos reigns. In this case, the chaos mainly stems from the fact that many of the history-makers themselves, complete with their own views and biases, are still around. Isaacson is one of the first outsiders to wade into this thicket with such instinct, ambition, self-confidence—and success. The third is an accomplished biographer’s skill in distilling all that he found into a set of compelling storylines that are people-centric without skimping on technical detail. “The Innovators” is therefore friendly to expert and novice alike.

The sum total is a book that not only explores in depth many key ideas and figures in computing’s history. It does so with humanity. Time after time, Isaacson exhibits a clear understanding of the subtlety required when so many people are still seeking and receiving credit in real time for inventions that have changed the world forever. Isaacson is painting on a canvas that is not yet dry and won’t be for a long time. The major success of the book rests on his fundamental understanding of those challenges, and on the fact that he gets it right.

I first encountered Isaacson’s work ethic as a researcher, oddly enough, on Super Bowl Sunday two years ago. He was in town and wanted to come by the Museum to stroll together through our major exhibition, “Revolution: The First 2000 Years of Computing.” We jokingly referred to it as Cowboys and Saints fans looking for an alternative. When he called to schedule the visit, he also asked me if I knew how he might contact Bob Taylor and Larry Roberts—two landmark figures of the internet era. As we met in the Museum’s lobby, I not only gave him a tip on how to reach them but also two large, printed transcripts of our oral histories of Taylor and Roberts.

“Oh you didn’t need to do that,” he said. “I’ve already read them. I’ve read at least 200 of your oral histories, in fact.” At that point, Isaacson was more than 13 years into the project (“gathering string,” as he called it). As we walked and talked for a couple of hours together in “Revolution,” which is itself a major collection of the same themes in a similar intellectual framework, I discovered how deep and clear his own interpretive viewpoint had become, and how broadly he commanded the sweep of events. He reinforced his depth of knowledge across this incredibly diverse range of people and issues when he spoke at the Museum in October 2014. “Revolution” and “The Innovators” are, in that regard, a bit like twins separated at birth.

Isaacson’s journalistic eye is, of course, extraordinarily well developed. He spent decades at the pinnacle of the field as managing editor of Time and as chairman of CNN. The corresponding skill he brings to illuminating pivotal ideas hatched by non-conforming geniuses is clear from the start. He might easily have chosen to begin with the big bang of the planar integrated circuit birthed in 1959 by Silicon Valley’s legendary “Traitorous Eight,” or perhaps to revisit Jobs’s launch of the Macintosh in 1984. (Our increasingly younger visitors provide lots of sobering insight into how “old” the Mac can seem; one was overheard saying to an adult while looking at the display of an original 128k Macintosh, “Oh look! It’s a Macintosh! My grandmother had one of those!”) Instead, Isaacson winds the tape back about 170 years and opens “The Innovators” with the story of Charles Babbage and Ada Lovelace—using the Babbage-Lovelace partnership both as an early illustration of his “collaborative creativity” thesis and as a poignant reminder of the challenges faced by the impudent innovator.

Until now, mostly, the main embattled genius in the narrative has been Charles Babbage—vindicated a century and a half later by the successful construction of his landmark Difference Engine No. 2 (which operates daily at the Museum), but frustrated and largely bankrupted by his failed pursuit of it during his lifetime. To be sure, Isaacson covers this story in detail.

Portrait of Ada Lovelace by British painter Margaret Sarah Carpenter, 1836

The crux of the story as Isaacson elaborates on it, however, is revealed in his accurate reporting of the relatively unsung contribution and achievements of Lovelace. Isaacson brings her into the foreground and uses her as a case study on several levels: a creative, if not brilliant, theoretical mathematician who served as Babbage’s muse and helpmate; an insightful, emerging engineer whose aptitude was utterly stifled in Victorian England because she was a woman; a gifted technical writer denied the opportunity to publish in scholarly journals under her own name, again because she was a woman; and an impudent maverick who did in fact skirt the system by publishing a detailed set of “Notes” to Menabrea’s essay on Babbage’s Analytical Engine in the October 1842 edition of Scientific Memoirs (signing them only “A.A.L.” for Augusta Ada Lovelace).

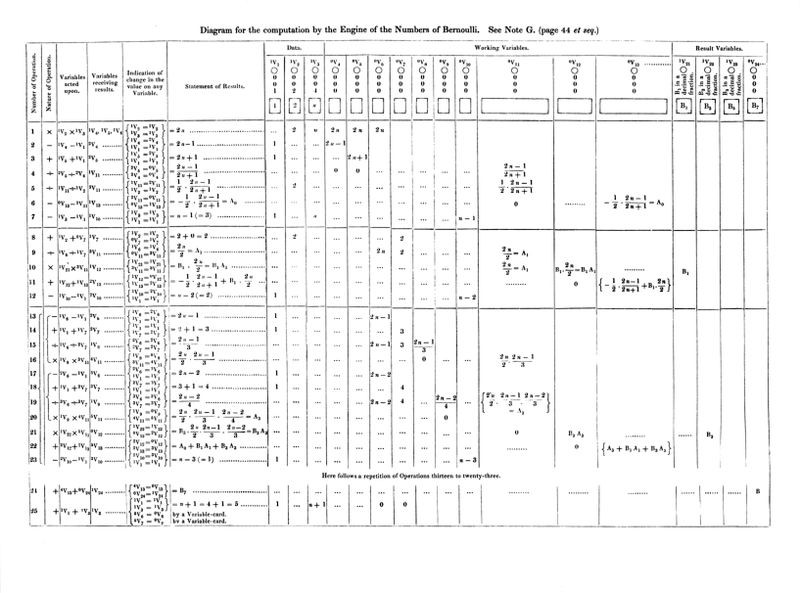

Here the Lovelace story gets interesting to those being exposed to it for the first time. As Isaacson points out, among its other achievements, Lovelace’s “Note G” to Menabrea’s essay works out a method by which Bernoulli numbers could be generated by Babbage’s theoretical Analytical Engine. Today that “method” would be known by another name: an algorithm, an essential component of modern-day software. While Babbage helped Lovelace perfect it, Isaacson quotes liberally from Lovelace’s letters showing her to be “deeply immersed in the details,” and ultimately responsible. Later this year, when more of Lovelace’s rarely seen papers are made accessible by the Bodleian Library at Oxford, our insights in this area will surely grow (just as they took root in part through the scholarship of former Museum curator Doron Swade).

Bernoulli number 'algorithm', Ada Lovelace, 1843

At this point, the third pillar of Isaacson’s work emerges, and shines through: the practiced historian’s eye for dispassionate synthesis of competing ideas. Ada Lovelace may be, simultaneously, the most under- and over-appreciated figure in the history of computing. As Isaacson notes, she has been wrongly dismissed by some historians as flighty, delusional and a crank. In other circles, she is heroically labeled as the world’s first programmer (most recently by the official White House blog). Here I’m going to quote Isaacson’s assessment of Lovelace liberally, and for a reason:

As he concludes, Ada Lovelace’s contributions were inspirational and profound. She should be celebrated for the pioneer she was—and, most of all, she should be celebrated for the icon she has become in a world that needs more iconic, technically skilled women who are smart and ambitious, and who are turning the worlds of engineering, computing and other sciences upside down. See Elizabeth Holmes and her Silicon Valley-based company, Theranos, for example.

If the relationship within the Babbage-Lovelace “team” seems relatively prosaic by Victorian standards, hang on. Isaacson also steers the narrative through some of the more difficult and tempestuous partnerships of our age. In fact, to be such a team-based narrative, “The Innovators” also overflows with rivalry, ego, sharp elbows and conflict. The most gut-wrenching of these stories centers on the paranoid selfishness of William Shockley, winner of the Nobel Prize with John Bardeen and William Brattain for their invention, in 1947, of the transistor.

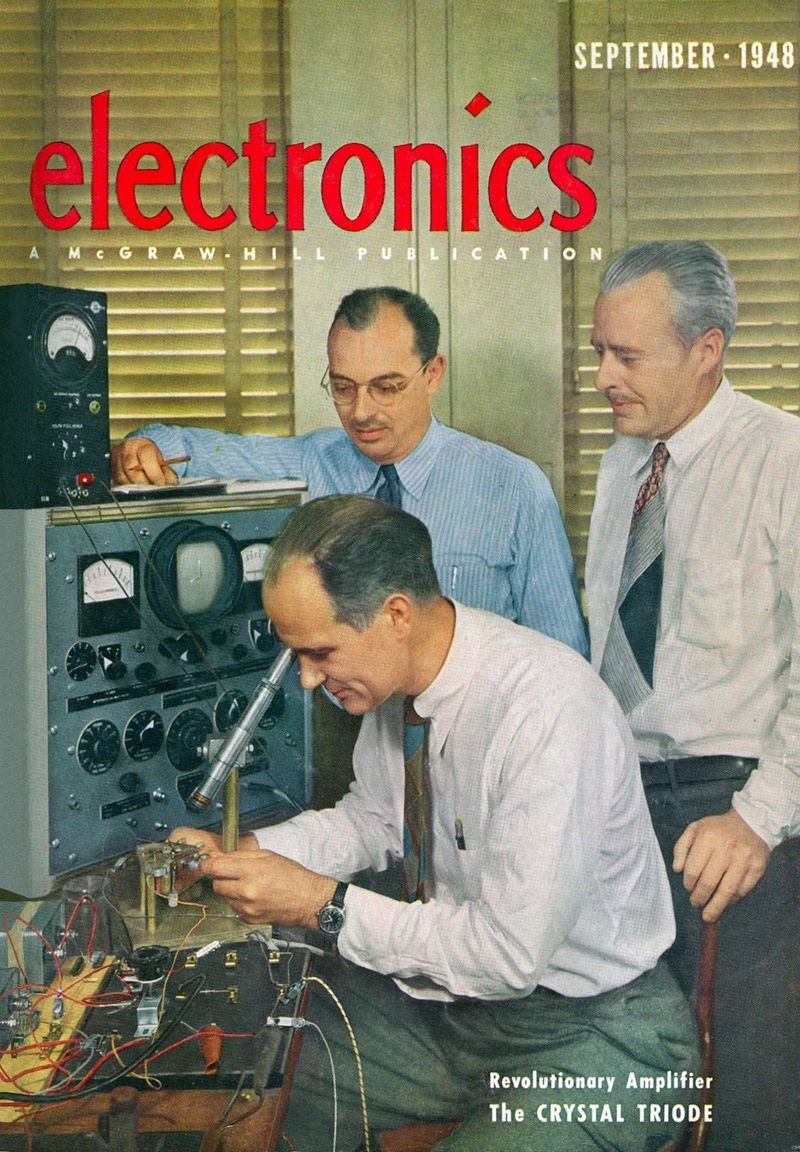

On the one hand, the triangularity among Shockley, Bardeen and Brattain has the essential elements of innovation that Isaacson identifies repeatedly: the theorist and the experimentalists working side by side, in a symbiotic relationship, bouncing theories and prototypes back and forth, failing many times before finding a breakthrough answer. Indeed, the genius Shockley and the skilled and workmanlike Bardeen and Brattain produced their science at Bell Labs, probably the ideal environment in the first half of the 20th Century for collegial technical innovation. On the other hand, what ultimately tore them apart, and indeed what vexed Shockley throughout his life, was his inability to share the credit.

Bardeen & Brattain at Bell Telephone Laboratories, 1948

Not long after Bardeen and Brattain demonstrated in December 1947 that Shockley’s theory, together with their materials science, could produce a working transistor, Shockley wrote, “My emotions were somewhat conflicted. My elation with the group’s success was tempered by not being one of the inventors.” When the press later gathered at Bell Labs to snap news photographs of the three pioneers simulating their work at their lab bench, Shockley wedged himself, at the last second, in front of Bardeen and Brattain. He sat on Brattain’s stool and peered into Brattain’s microscope. The effect was to position Shockley front and center in one of the most legendary photos in the history of computing. “Boy, Walter (Brattain) hates this picture,” Bardeen later said. “That’s Walter’s equipment and our experiment.”

The more satisfying teamwork stories in “The Innovators” are found when similarly hard-charging, singular-minded (and, yes, impudent) geniuses manage to sort out their differences and get on with it together. The most compelling example, and the longest chapter in the book, deals with the roller coaster relationship between Bill Gates and Paul Allen in the founding and early development of Microsoft.

The chapter is entitled simply “Software.” In it Isaacson carefully pieces together the boyhood nerd-friendship that developed between Gates and Allen at the Lakeside school in Seattle. Gates was brash, harsh, brilliant—impudent. So much so, in fact, that when his mother turned to a psychologist for help raising her rambunctious young son, she received this advice: “You’re going to lose. You had better just adjust to it because there’s no use trying to beat him.” Allen, two years older than Gates and driven in his own way, but with a slightly milder manner and a more wide-ranging mind, had a similar observation: “He was really competitive. He wanted to show you how smart he was. And he was really, really persistent.”

Isaacson traces their on-again, off-again relationship, mainly through the history of a programming team they co-founded and anchored and that became known as the Lakeside Programming Group. They built a business, remarkable at that time given the boys’ ages, on service contracts for small companies that needed data processing software. Gates and Allen alternated as the alpha dogs. For a while neither could win the upper hand, once and for all, as the acknowledged leader. The fateful moment came when Allen tried a power play and froze Gates out of a contract, then discovered that he and the other members of the group couldn’t finish the job without Gates. At this point in the book, Isaacson reports a remarkable exchange between Gates and the group that settled, with finality, who stood where in the team. He quotes Gates: “That’s when I said, ‘Okay. But I’m going to be in charge. And I’ll get used to being in charge, and it’ll be hard to deal with me from now on unless I’m in charge. If you put me in charge, I’m in charge of this and anything else we do.”

Allen accepted his role, and less than a decade later Microsoft was born. That sorting out of the roster for Team Gates persisted for 40 years.

There are two or three dozen junctures, at a minimum, where Isaacson is forced to make insightful judgments about roles and responsibilities in landmark innovations where the actual events are in dispute. When called upon to do so, Isaacson strikes a careful, informed balance among competing accounts. He excels at telling these complex human stories and getting the subtleties right. This talent is crucial to the book, for the human stories are at the center of all successful teams—and teamwork, of course, is his central theme. Where the “hard cases” come up—and there are many—Isaacson is quite expert at sorting them out: the collegial but spiky relationships between semiconductor pioneers (and ex-Shockley employees) Bob Noyce, Andy Grove and Gordon Moore; the sophomoric jockeying for position of Allen and Gates, a pointed feud that persists to this day; the uneasy relationship between internet pioneers Taylor and Roberts, and the further complications represented by fellow pioneer Len Kleinrock; the open warfare between Wikipedia’s Jimmy Wales and Larry Sanger. These larger-than-life personalities make only a cameo appearance here but are central stars in Isaacson’s narrative.

My colleague Kirsten Tashev, the executive in charge of all of our exhibition and collections work, has wisely observed that the trick in an exhibit is not just what to put in, but also what to leave out. We therefore understand first-hand that Isaacson had to make hard choices with “The Innovators.” I offer as an observation, and not necessarily a critique, how much I might have liked his take on Seymour Cray, and particularly Cray’s epic battles with IBM and other competitors in supercomputing. Talk about a non-conforming genius who built great teams. I also missed getting his point of view on the social and technological implications of Facebook, Twitter and the other world-shrinking applications—and remarkably young leaders—who seem to hold such promise for the future. Clearly those will have to wait for another day.

It would be tremendously self-serving to say that “The Innovators” brings luster and prestige to the field of computer history, and of course it does. But the book is about much, much more. It defines why capturing the history of computing is important in the first place: because it reflects the trials and success of human imagination, where the humanities and technology intersect, and impudent geniuses harbor the notion that ideas can change the world, because they have.