Today, AI based on deep learning and neural networks is taking the world by storm. However, many of the algorithms that guide our web searches and driving directions today are much older, rooted in what people now call “Good, Old Fashioned AI,” also known as “symbolic” AI, which was the primary form of AI from the 1950s through the 1990s. The eclipse of symbolic AI by deep learning is illustrated by two major milestones in AI history, each featuring the world’s top human player being beaten in a game by an AI system.

World Champion Garry Kasparov beat IBM’s Deep Blue in 1996, but was defeated by it in 1997 in a score of 4 games to 2. © Laurence Kesterson/CORBIS SYGMA

The 1997 defeat of world champion chess grandmaster Garry Kasparov by IBM’s Deep Blue computer was hailed as a triumphant watershed in technological history, akin to the moon landing, because it appeared to show that computers could beat humans at something once thought to be exclusive to us: thinking.1 The symbolic AI techniques DeepBlue used are now considered passé, especially for more complicated games such as Go, believed to have been invented in China 2,500 years ago. But in 2016, world Go champion Lee Sedol was defeated by Google DeepMind’s AlphaGo AI system. This event has been called China’s “Sputnik moment” by AI researcher and venture capitalist Kai-Fu Lee,2 who argues that this specific event led China to pour billions of dollars of money into AI research to catch up with, and potentially surpass, the United States. AlphaGo’s victory illustrates the rise of the new paradigm in AI, deep learning and neural networks, at the heart of the current AI revolution.

Why have games such as chess and Go been so important in the history of AI? The pioneers of artificial intelligence, which included Herbert Simon, Alan Newell, John McCarthy and Marvin Minsky, viewed human intelligence through a tradition of Western philosophy going back to Aristotle. This masculine-gendered, Eurocentric view of intelligence—rooted in the Cartesian separation of mind from body—privileges the cerebral skills of logic, math, and problem-solving ability over bodily, emotional, social, and cultural forms of intelligence. If Reason (i.e. Logic) separated Man from Beast, then Logic must be the basis for intelligence, they thought.

Blaise Pascal was a philosopher and mathematician. In the 1640s, he invented for his father, a tax collector, a machine that could add. © Mark Richards. Collection of the Computer History Museum, B150.8.

Many Western philosophers and mathematicians, from Blaise Pascal to George Boole and Bertrand Russell aspired either to make calculation or logic, which they equated with thought itself, more mathematically rigorous (more “formal”), or to take the next step, to mechanize it. Pascal himself built a calculating machine for this purpose, and this Western impulse culminated in the invention of the digital computer in the 20th century. For the AI pioneers of the 1950s and 1960s, playing games were seen as just another way that people displayed intelligence by solving problems. If AI researchers could emulate how players did this, they could then automate the process. “Game theory,” a branch of mathematics with applications to economics and warfare both, was founded by mathematician and computer pioneer John von Neumann, and provided optimization strategies and algorithms more broadly useful to computer science. AI pioneer Herbert Simon applied such theories to both computer science and to economics (the field in which he won the Nobel Prize). Thus, the idea that games could seriously model aspects of the real world was central to early computer science. In particular, since early computers had difficulty modeling the complexities of the real world, games provided a simpler “micro-world,” with boundaries and rules easily understood by computers, that made rapid progress possible in the 1960s.

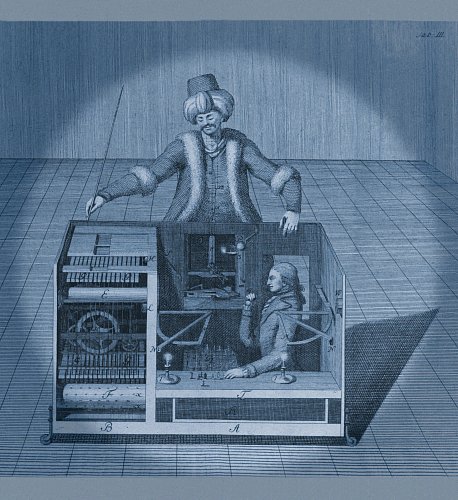

Wolfgang von Kemplen’s chess playing automaton, the Turk, which was operated by a human player hidden inside. Courtesy of the Library Company of Philadelphia.

Chess, in particular, has historically been seen in the West as the pinnacle of intellectual activity. It was an intellectual’s game, associated with logic and strategy. Think of Star Trek’s Mr. Spock, and you may picture him at his 3D chess board, outplaying his human opponents. Even in the 18th century, European elites were fascinated by the idea of machines that might play chess. Wolfgang von Kempelen became famous for his “Mechanical Turk,” an automatic chess machine built for the Austrian Empress Maria Theresa, which defeated Benjamin Franklin and Napoleon. Eventually, the Turk turned out to be fake, with a real man inside, but it nevertheless captured the imaginations of Edgar Allen Poe as well as Charles Babbage. This interest in chess as a marker of intelligence carried on in the mathematicians that helped define the theory of computing in the 20th century: Alan Turing, Claude Shannon, John von Neumann, Norbert Wiener, and of course the AI pioneers Herbert Simon, Alan Newell, and John McCarthy. For Newell and Simon, in particular, chess was an exemplary problem for AI particularly fitted to their preferred solution: search.

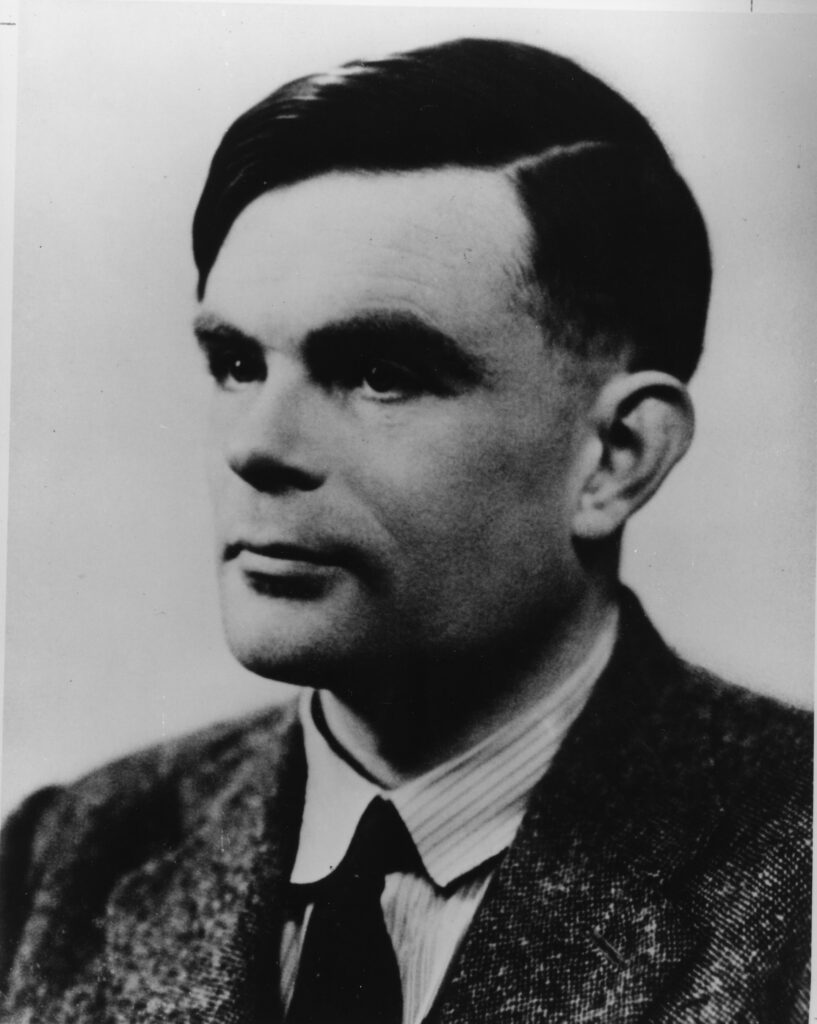

Computer pioneer Alan Turing developed the first theoretical computer chess program as an example of machine intelligence in 1947.

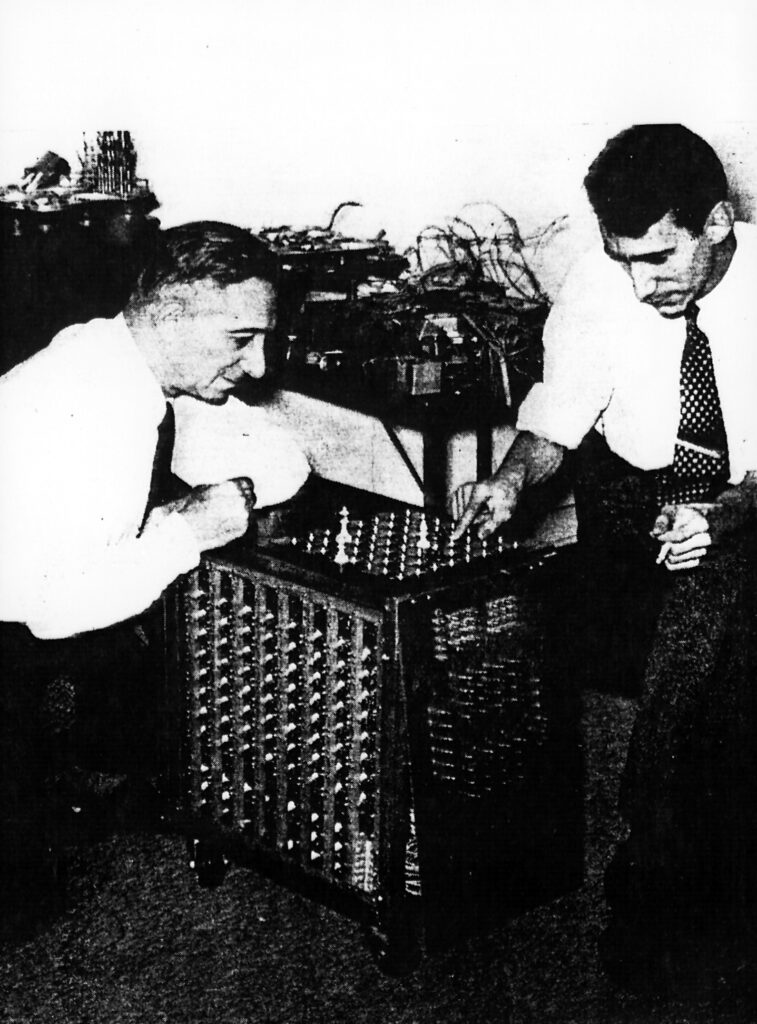

MIT and Bell Labs researcher Claude Shannon (right) developed modern information theory and published the first article on how to write computer chess programs in 1950. He also built this chess machine (pictured) at MIT using relays. Chess champion Ed Lasker at left. ca. 1950. Gift of Monty Newborn, Collection of the Computer History Museum, 102645398.

Betty and Claude Shannon at the 3rd World Computer Chess Championship in Linz, Austria. 1980. Gift of Monty Newborn, Collection of the Computer History Museum, 102645392.

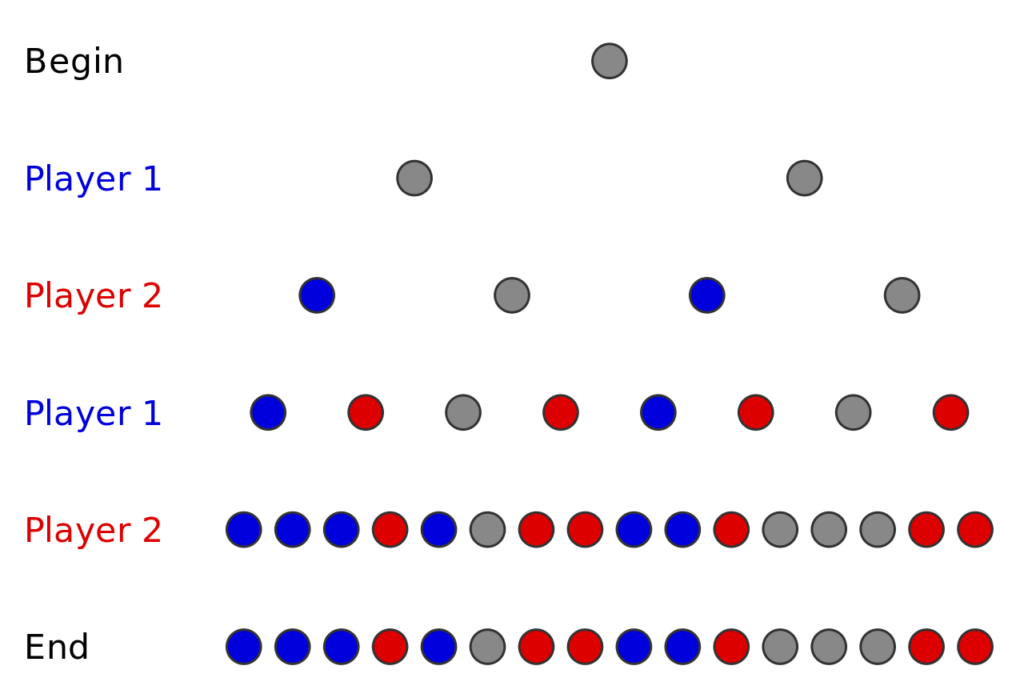

What is search, and how can it be used to play chess? By search in the AI context, I don’t mean searching for text on the web using Google (although a web search engine may use search in the AI sense.) Instead, in AI, search refers to the trial and error process of traversing possible paths to solving a problem. Search is one of the fundamental methods in classical AI, also known as “symbolic” AI because these methods involve the manipulation of lists of symbols, such as in the solving of algebra problems. All kinds of problem-solving tasks, such as proving theorems, solving puzzles, playing games, and navigating a maze, involve making choices about what to try first. These choices can be modeled as a tree of branching decisions.

Depiction of an arbitrary game tree being solved. Public domain via Wikipedia.

Branching decision tree of a tic-tac-toe game. Like chess and many other 2 player games, tic-tac-toe can be played with the minimax algorithm.

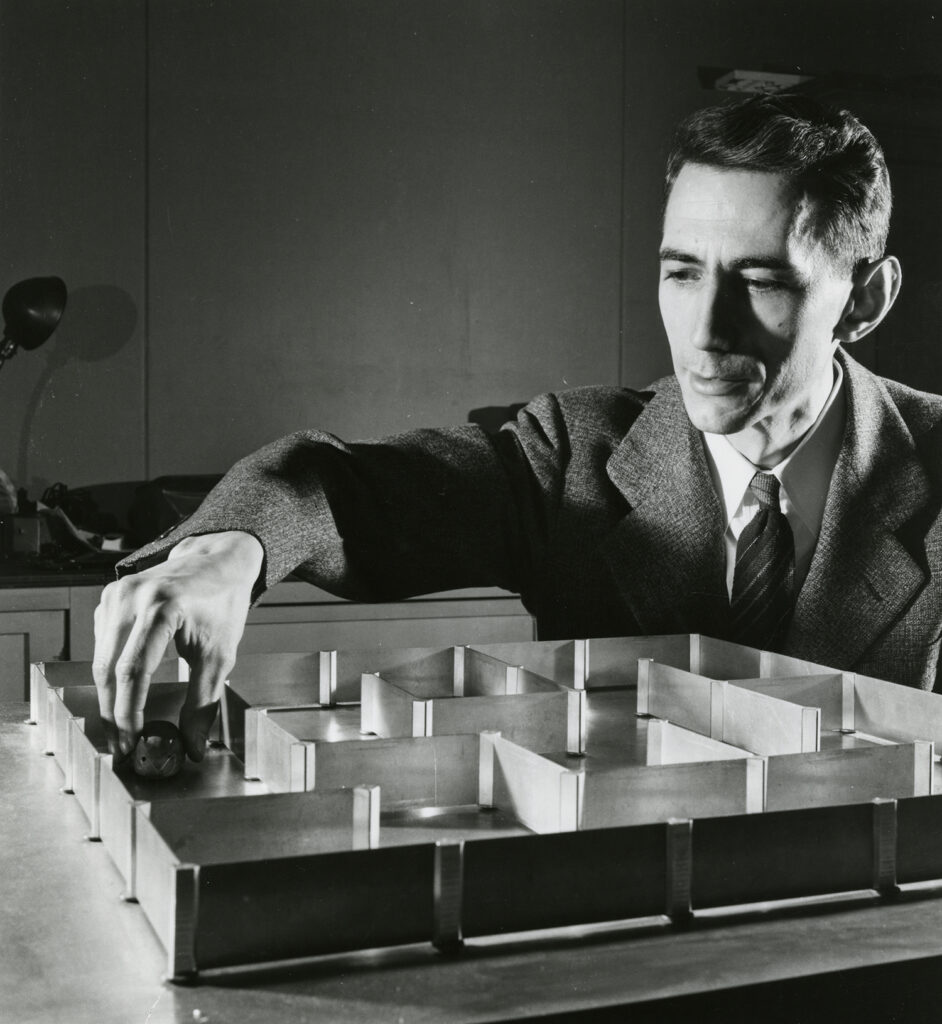

For instance, let’s say we wanted to build a robot mouse searching for the way out of a maze (something Claude Shannon did in 1950). If it arrives at a 4-way intersection, it can go right, forward, or left if it is not allowed to go backwards. This gives it 3 possible choices. Computer scientists would say that it has a “branching factor” of 3. A basic way to program a computer to solve the maze would be to try out each of the choices, or branches, in turn. This is known as “brute force” search: try every choice. However, our mouse would undoubtedly run into another intersection before it had a chance to backtrack to try the other choices in its first intersection. Each time it reaches another intersection, it can choose between another 3 paths. We could specify how many intersections deep the mouse should search before backtracking and trying out a different path.

Claude Shannon moving his electric mouse in a maze, ca. 1952. Collection of the Computer History Museum, 102630790.

This is known as the search depth or in the context of games, “look ahead.” As you can see, the number of paths that the mouse needs to search gets very big very quickly: 3, or the branching factor, multiplied by itself the number of times we look ahead in the decision tree. In other words, the problem grows exponentially. This is often called the “combinatorial explosion” problem in AI.

Similar methods can be used for chess. Every player’s turn, they have a choice of up to 38 possible legal moves, giving the chess problem a branching factor of 38. A quantitative method of evaluating the relative advantage of one chess position over another is used to choose the best move out of these 38. This is called the “evaluation function.” A typical chess game takes about 42 moves, and because there are two players, this is multiplied by two, giving roughly 3884 possible choices for the entire game, which is roughly 1034, greater than the number of stars in the universe. Very early in the history of AI, it was recognized that brute force search of chess and other problems simply would not work with the hardware of the time; there were too many possibilities to consider, and computers were too slow. Claude Shannon, who was among the first to use the “minimax” algorithm in a computer chess program (an algorithm that is still the basis of most chess programs today), noted that one could apply human knowledge and experience of the game to quickly eliminate many branches from consideration. Herbert Simon and Alan Newell proposed the use of “heuristics,” or rules of thumb that humans often use in problem solving, that work most of the time but not always. Heuristics are a form of human knowledge that can be programmed into a computer.

Chess has many more branches in its decision tree than tic-tac-toe. The number of chess games is estimated to be 10120, more than the number of atoms in the universe.

Given the enormous number of branches, chess programs can only look ahead to a finite depth in the search tree or be overwhelmed.

One such heuristic that was found to be useful in chess was known as “alpha-beta pruning.” This technique meant that if one of your moves was found to be easily countered by your opponent, other ways they could counter the same move didn’t have to be found. Further search along this path could be ignored, pruning off this entire branch of the decision tree. This could greatly reduce the branching factor, from 38 down to 6 or sometimes as low as 3. In addition, given the limits of computers at the time, most programs could only look 4 moves ahead. One of the earliest chess programs to be able to play competently against amateurs was created between 1959 and 1962 by MIT students including Alan Kotok, being advised by John McCarthy. The Kotok-McCarthy program used alpha-beta pruning.

John McCarthy playing chess at Stanford’s IBM 7090. McCarthy used an improved version of the Kotok program to play correspondence chess against a Soviet program developed at the Moscow Institute for Theoretical and Experimental Physics (ITEP) by George Adelson-Velsky and others. In 1967, a four-game match played over nine months was won 3-1 by the Soviet program. Courtesy of Stanford University.

In 1959, MIT freshmen Alan Kotok, Elwyn R. Berlekamp, Michael Lieberman, Charles Niessen, and Robert A. Wagner started working on a chess-playing program based on research by artificial intelligence pioneer professor John McCarthy. By the time they had graduated in 1962, the program could beat amateurs. Collection of the Computer History Museum, 102645440.

Newell and Simon believed that all AI problems, like chess, could be solved by search combined with heuristics, or “heuristic search.” Heuristic search was the central idea behind Newell and Simon’s early breakthroughs, the Logic Theorist and the General Problem Solver, and was a key pillar in their theory that intelligence in both humans and machines was simply the manipulation of symbols, the fundamental building blocks of both mathematics and language. This “physical symbol system” hypothesis was the assumption upon which the entire project of symbolic AI was founded, from its beginnings in the 1950s through the early 2000s. This theory, which posited that computers and human minds were thus equivalent, became extremely influential in cognitive psychology as well, and ultimately made its way into popular culture through cyberpunk science fiction, in which humans can upload their brains to the internet or have them replaced by chips.

Artificial Intelligence pioneers Allen Newell (right) and Herbert Simon, who along with Cliff Shaw, developed AI programs such as the Logic Theorist, the General Problem Solver, and their NSS chess program (which used an approximation of alpha-beta pruning), all of which ran on the JOHNNIAC at the RAND Corporation. JOHNNIAC is on exhibit at CHM. Collection of the Computer History Museum, 102657545.

As computers got faster and as computer scientists who were also experienced chess players, such as Richard Greenblatt and Hans Berliner, created their own chess programs, they found that earlier chess programs (such as Kotok’s) played exceedingly poorly, and added their own knowledge of how human players approach the game to their programs in the form of additional heuristics to better evaluate chess positions, databases of opening moves and endgames, and recognizers of board patterns. However, over time, it turned out that chess programs running on faster computers or with custom hardware could beat programs with a lot of human knowledge built in. This was because no heuristic is perfect or can cover every case. Occasionally genius moves can occur if a player tries something that looks to most people like a bad move. Most heuristics would prune out such a move before searching it, and thus the canned-knowledge-based programs would never find such a move.

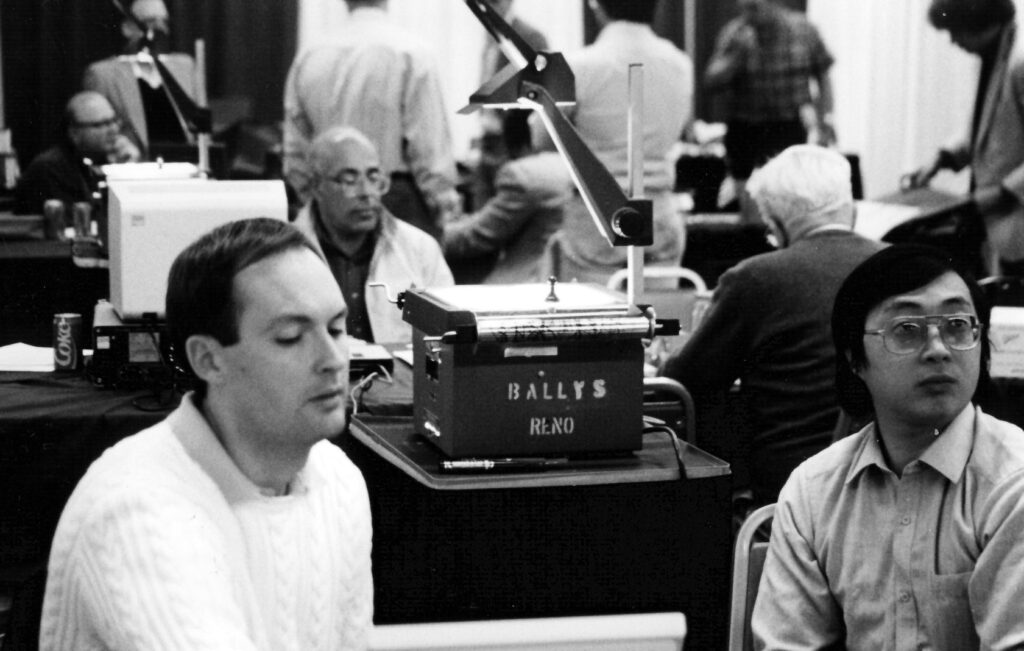

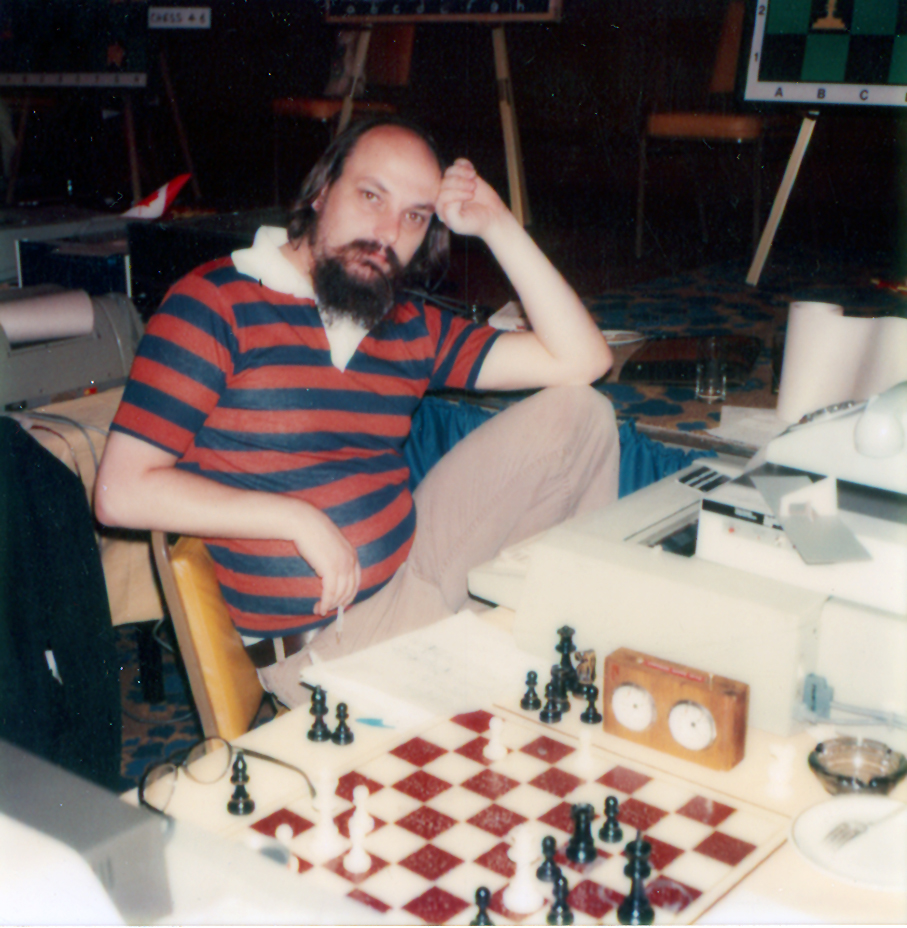

Hans Berliner (rear), Murray Campbell (front, left) and Feng-Hsiung Hsu at the 20th annual ACM Computer Chess Championship in Reno, Nevada. Two teams—HiTech (Berliner’s team) and Deep Thought (Campbell and Hsu’s team)—both from Carnegie Mellon University, tied for first place. Three members of the Deep Thought team (including Campbell and Hsu) were later hired by IBM to create Deep Blue. Collection of the Computer History Museum, 102645329.

As computers got faster, they could begin to look ahead more deeply, to 6, 7, 8 moves ahead, easily trouncing programs that only looked 4 moves ahead. A more efficient search algorithm, known as “iterative deepening search,” was discovered which could gradually increase the search depth of an avenue that looked promising. This was first used in David Slate and Larry Atkins’ Chess 4.5,3 which was the first program to win in a human chess tournament in 1976. More memory also allowed programs to save previously considered positions, further reducing the amount of search required. All of these innovations (alpha-beta pruning, iterative deepening, saving of searched positions, and databases of opening and endgames) were freely shared among chess program developers at computer chess tournaments, and became standard tricks of the trade.

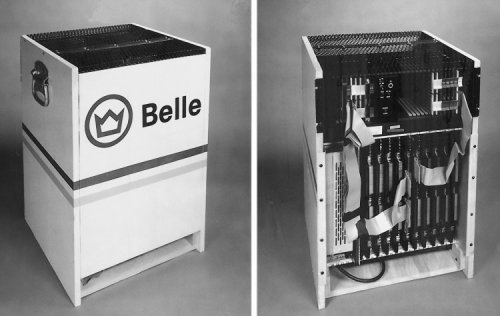

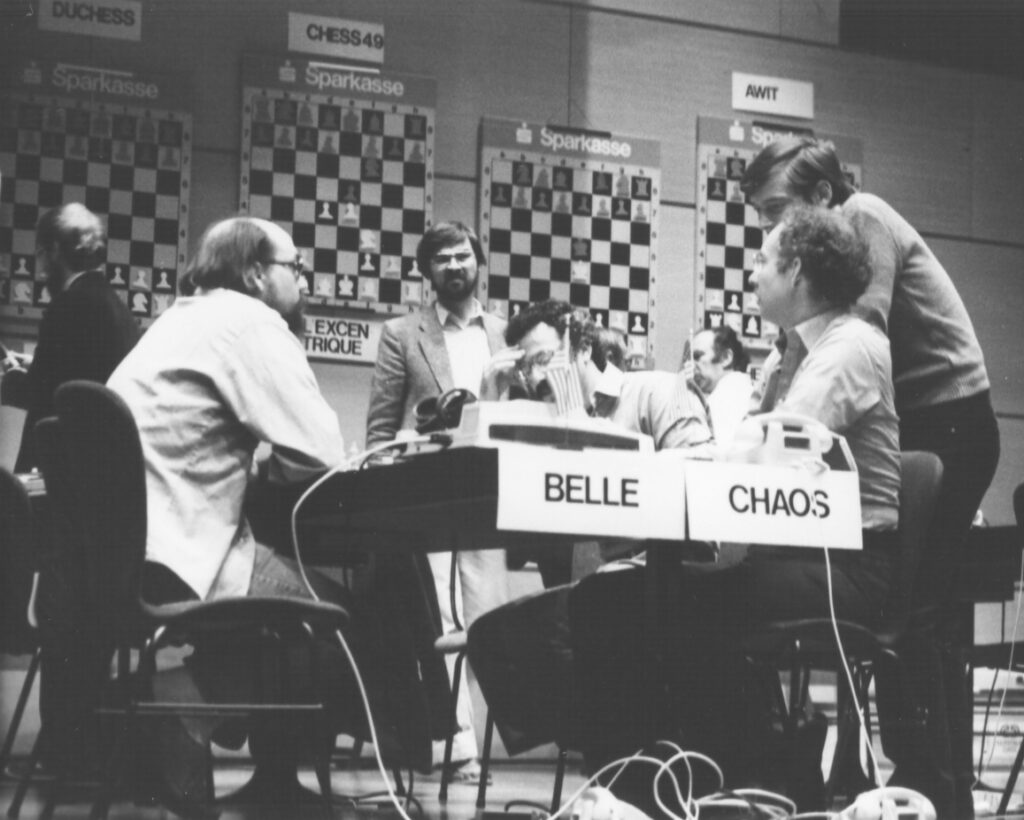

At Bell Laboratories in 1977 Ken Thompson (best known as the co-creator of the Unix operating system) and Joe Condon designed Belle—a dedicated chess-playing machine. Belle’s custom chess hardware and endgame database revolutionized computer chess.

Belle co-developer Ken Thompson at the 13th North American Computer Chess Championship. Custom chess machine Belle won the tournament, followed by Cray Blitz. From 1970 to 1994, the Association for Computing Machinery (ACM) hosted annual computer-to-computer chess tournaments to provide a forum for the exchange of ideas. Gift of Kenneth Thompson, Collection of the Computer History Museum, 102645360.

During the 1980s, Belle, developed by Ken Thompson and Joe Condon at Bell Labs, was a strong competitor at chess tournaments. Shown here are chess programs Belle and CHAOS at the 1980 WCCC in Linz, Austria, where Belle played University of Michigan’s CHAOS in a closely matched competition and won. Collection of the Computer History Museum, 102645444.

Belle faces off against Chess 4.0 at the 4th World Computer Chess Championship in New York City in 1983. Shown from left, back are: Ken Thompson, Feredric Friendel, and Joe Condon. From left, front: Chess co-developers David Slate and William Blanchard. Supercomputer-based chess program Cray Blitz won the tournament, followed by Bebe. Gift of Monty Newborn, Collection of the Computer History Museum, 102645364.

Despite these advances in software, as computers got faster in the 1970s, chess programs automatically got better without any software innovation. By the 1980s progress in computer chess was dominated by using hardware to accelerate search. It had become a computer design problem, not an AI problem. In 1997, Deep Blue was still using mostly the same software techniques as chess programs 20 years earlier, but beat Kasparov mainly by being a faster computer with many custom parallel processors. In a way, as computers got faster, the chess programs became less intelligent.

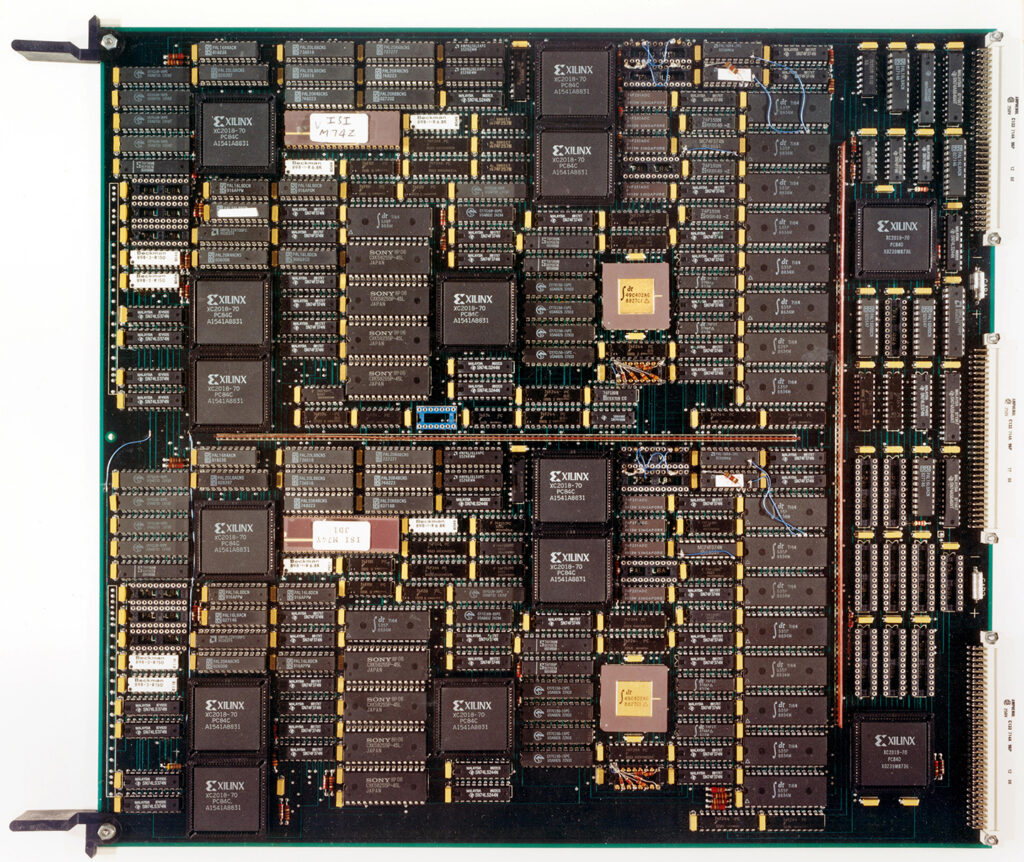

Deep Thought I Circuit Board, 1988. Custom chess machine Deep Thought, developed by CMU students, was a precursor to Deep Blue. Gift of Feng-Hsiung Hsu, Collection of the Computer History Museum, 102645419.

Deep Blue. Loan of IBM. Photo: © Douglas Fairbairn Photography.

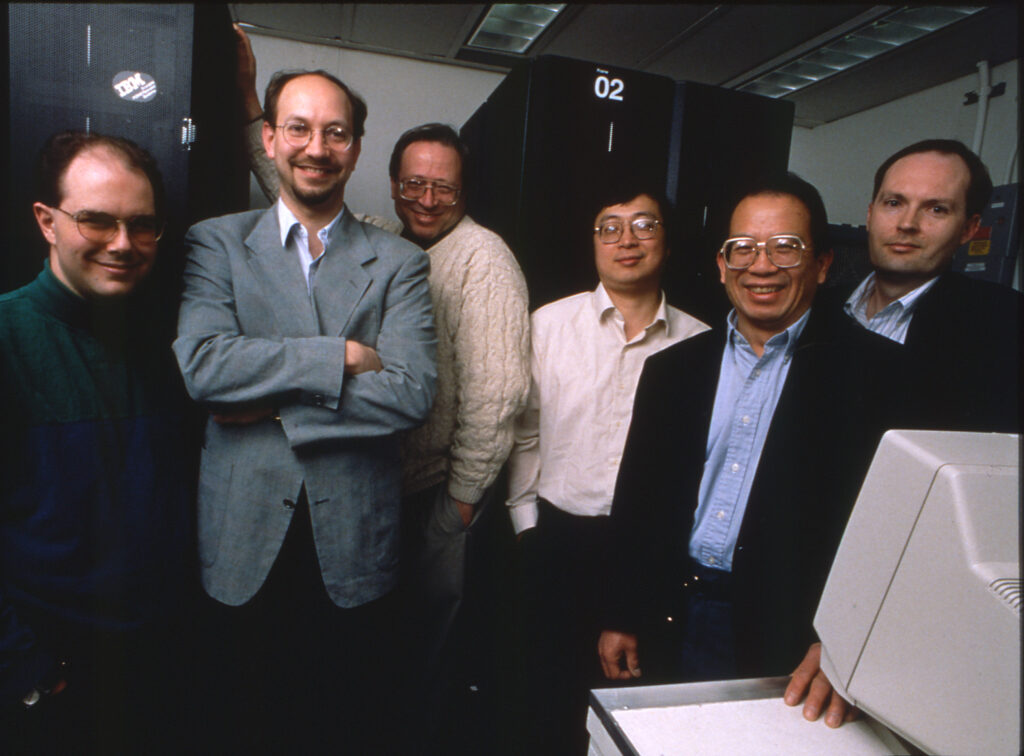

IBM Deep Blue Team (Joe Hoane, Joel Benjamin, Jerry Brody, Feng-Hsiung Hsu, C. J. Tan, and Murray Campbell). Courtesy of IBM Archive.

Garry Kasparov shakes hands with Feng-Hsiung Hsu at game 1 of 1997 Deep Blue vs. Kasparov re-match in New York City, New York. Gift of Monty Newborn, Collection of the Computer History Museum, 102645300.

Deep search as a dominant theme in AI was already in decline in the 1980s. Beginning in the 1960s, researchers such as Ed Feigenbaum of Stanford were creating so-called “expert systems,” in which a lot of expert human knowledge was put into AI programs in the form of if-then rules. Like earlier heuristic programs, these rules were pre-programmed into the software, but unlike those systems, they were separated out into “knowledge bases” from the logical parts of the program, the “inference engines.” Feigenbaum and other expert systems proponents insisted that “in the knowledge lies the power.” In other words, a large knowledge base would make up for the lack of sophisticated reasoning: more knowledge meant less search, and vice versa.

Expert systems spawned many commercial companies in the 1980s. Little of this activity affected chess programs, which at this time were turning the opposite direction: back to brute force search through the use of dedicated hardware. The leading chess machines of this type were Ken Thompson’s Belle from Bell Labs, and two separate projects from Carnegie Mellon, Hans Berliner’s HiTech and Feng-Hsiung Hsu and Murray Campbell’s Deep Thought, which later became IBM’s Deep Blue. By the time of Kasparov’s defeat, then, chess programs had already become largely irrelevant to the greater field of AI, even if they provided some good PR.

More worrisome, however, was that by the 1990s, the symbolic AI project based on Newell and Simon’s physical symbol system hypothesis was under attack. Critics, such as the philosopher Hubert Dreyfus, had questioned the symbolic AI project as early as the 1960s, on the basis that the philosophical assumption of mind-body separation that symbolic AI rested on was incorrect and out of date. 20th century philosophers such as Martin Heidegger had insisted that human thought could not be separated from the experience of the body or from one’s immediate cultural surroundings.

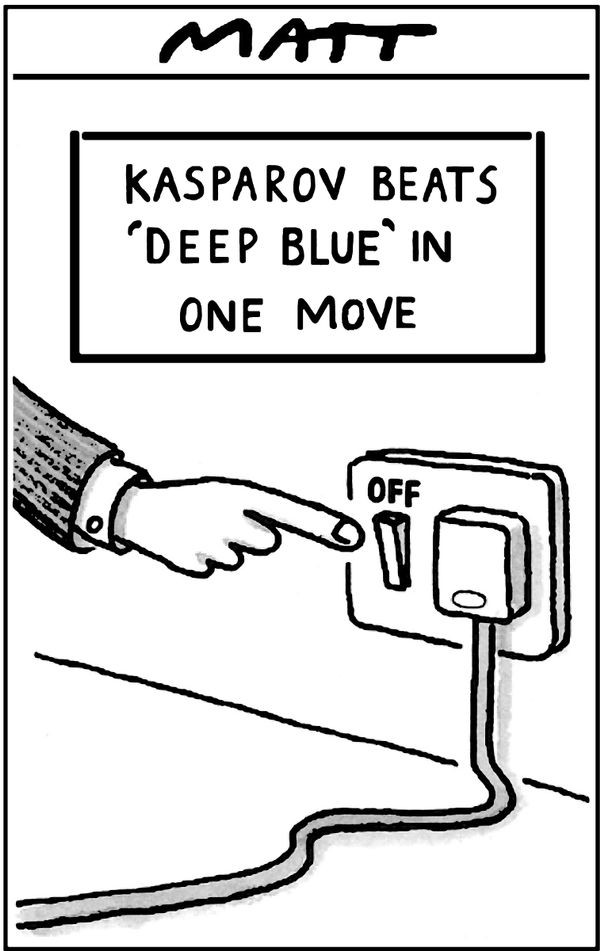

The reaction to Dreyfus’ critiques from AI researchers was savage (though Dreyfus was himself was not very diplomatic), with leading AI figures threatening journals if they published anything from him. They gloated when Dreyfus, who was not a particularly good chess player, was beaten by Richard Greenblatt’s chess program MacHack. Yet success at chess did not disprove Dreyfus’ critiques. In fact, it was the very fact that chess programs such as Deep Blue used brute force search that made it irrelevant in the larger project of creating general AI. The drama of Kasparov’s stunning defeat may have been hyped as a landmark in Machines triumphing over Man, but in reality it was a triumph of the Deep Blue engineers over one chess player. And Deep Blue’s creators did not claim the computer was intelligent. If the building was on fire, they said, Kasparov would be smart enough to run out but the machine would still sit there. And though in earlier years AI pioneer John McCarthy had thought chess a central problem of AI, after Deep Blue he criticized chess as not having developed any new theories for how human intelligence might be imitated.

The popular media portrayed the 1997 re-match between World Chess Champion Garry Kasparov and IBM’s Deep Blue custom supercomputer as a battle between man and machine. The cover of Newsweek proclaimed it “The Brain’s Last Stand.” This view exaggerated the the power of the computer and minimized the human effort behind the machine itself.

© Telegraph Group Unlimited.

By the 1990s, researchers were taking Dreyfus’ critiques seriously and imagining new forms of AI, such as those that emphasized having a body, like the robots of Rodney Brooks,4 or those that dealt with emotions. And as we shall see in Part 2, in the 2000s a completely different tradition of AI, called machine learning, would start to replace symbolic AI as the way forward. Machine learning would perform feats that symbolic AI had never been able to tackle better than humans, such as recognize faces or understand human speech. This would also apply to a game that had been infeasible to play competitively using heuristic search: Go.

Nevertheless, despite search losing its luster as the central technique of AI, it has never lost its usefulness in computer science more broadly. There has been much progress in innovating better search algorithms over the years to solve problems optimally and efficiently. Being such a fundamental technique, the creation of decision trees and searching through them is so widespread that it is likely impossible to get an accurate accounting of all the programs that use it today.

Search plays some role in any information retrieval task, from querying a database to searching the Web. The A* search algorithm, which was first invented for SRI’s robot Shakey, is commonly used for route-finding in autonomous vehicles and GPS apps. And even today, game playing AI programs that use machine learning utilize some form of search, even if it is no longer the most interesting component. However, like other techniques that used to be considered “AI,” today search may be seen merely as just another basic computer technique, no more intelligent than the next program. This illustrates a historical pattern in the development of AI: once it becomes commonplace and automatic, humans no longer consider it “intelligence.” In years past, when one said “AI,” search was probably involved. When a person says “AI” today, however, they usually mean symbolic AI’s successor, machine learning.

1. Nathan Ensmenger, “Is Chess the Drosophila of Artificial Intelligence? A Social History of an Algorithm,” Social Studies of Science 42, no. 1 (February 2012): 22, https://doi.org/10.1177/0306312711424596.

2. Kai-Fu Lee, AI Superpowers: China, Silicon Valley, and the New World Order. (Boston; New York: Houghton Mifflin Harcourt, 2019), 1–5.

3. Stuart J. Russell and Peter Norvig, Artificial Intelligence: A Modern Approach, 3rd ed., Prentice Hall Series in Artificial Intelligence (Upper Saddle River, NJ: Pentice Hall, 2010), 110.

4. Rodney A. Brooks, “Elephants Don’t Play Chess,” Robotics and Autonomous Systems, Designing Autonomous Agents, 6, no. 1 (June 1, 1990): 3–15, https://doi.org/10.1016/S0921-8890(05)80025-9.

![]()