Stephen Hawking, 1942−2018. Image: howitworksdaily.com.

On March 13, 2018, the world lost one of the greatest theoretical physicists who ever lived. Stephen Hawking had a profound effect on our understanding of how the universe works and the rare talent to bring these theories to the masses. Without Hawking, it’s doubtful that so many would be aware of concepts such as black holes, cosmic inflation, or quantum dynamics. In addition to his far-reaching research, he was a popular science writer and perhaps the most widely recognized figure representing the sciences in the period between the Sagan and DeGrasse-Tyson epochs. His book, A Brief History of Time, was a smash hit, one of the most widely read scientific books ever written. He was also one of the most widely awarded figures in history, ascending in 1979 to the Lucasian Professorship in Mathematics at Cambridge, the same position held by Sir Isaac Newton and Charles Babbage. He was elected Fellow of the Royal Society in 1974 and was later made a Commander of the Order of the British Empire in 1982. In 2009 Hawking was awarded the US Presidential Medal of Freedom by President Obama. All that, and he appeared in episodes of Star Trek: The Next Generation, The Big Bang Theory, and as a character on The Simpsons.

Hawking suffered from amyotrophic lateral sclerosis, also known as ALS or Lou Gehrig’s disease. The disease would eventually paralyze him, leaving him confined to a wheelchair for more than 50 years. A bout of pneumonia in the mid-1980s made him unable to speak on his own. For such a public figure, this was a significant difficulty, one that led Hawking to a long-lasting and now widely recognized solution: voice synthesis.

Research into voice synthesis actually predates electronic computing. The legend of the “brazen head,” a magical or mechanical bust that could speak simple words, dates back to at least the 11th century, while Wolfgang von Kempelen’s speaking machine mimicked speech as far back as 1769 using a bellows system. Work in the 19th century attempted to mimic speech through a variety of methods using bellows and percussive elements. It was through Bell Labs’ Vocoder that electronic speech synthesis first became possible. Using the Vocoder concept he had begun working on in 1928, Homer Dudley developed the Voder, a keyboard-based voice synthesis system he displayed at the 1939 World’s Fair in New York.

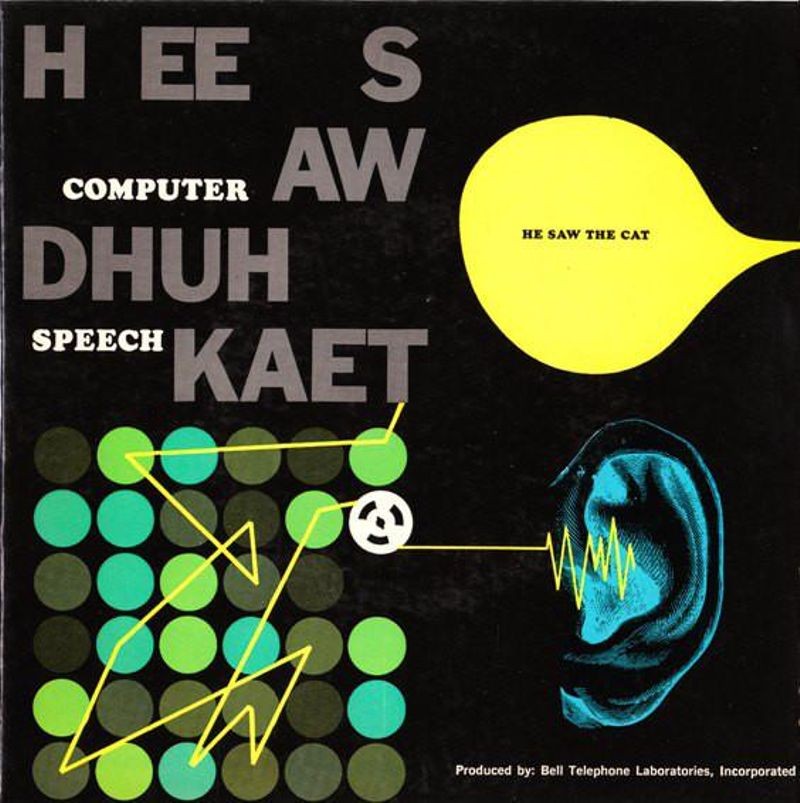

He Saw the Cat album cover

Throughout the 1950s and 1960s, Bell Labs remained a leading institution for research into computer speech. Max Matthews and his team developed a speech system later used to sing the iconic “Daisy Belle” for Stanley Kubrick’s 2001: A Space Odyssey. They even released a record of much of their speech synthesis work called He Saw the Cat. By the late 1970s, miniaturization and the microprocessor led to new smaller, portable speech synthesis systems. Dr. Ray Kurzweil developed his Kurzweil Reading System, which became popular in organizations providing services for the blind, though it was not widely adopted for individual use as the cost and technical demands of the system were very high.

At MIT, Senior Researcher Dennis Klatt had been working on speech synthesis techniques since the mid-1960s. He was a student of the history of artificial speech, collected many recordings of early systems, and in parallel, developed his own. His research focused on creating synthesized voices that felt more natural than those that researchers at Bell Labs and other institutions had created. While earlier voices had been intelligible, they sounded “electronic,” and the synthesis of the female voice in particular never yielded strong results. Klatt spent a great deal of time looking into not only how speech is made by the body, but also how it is perceived by the listener. This is an important point, because whether or not the user can understand the words and phrases they are using, it is equally important that the listener is able to interact with those sounds in a natural way to allow for effective communication.

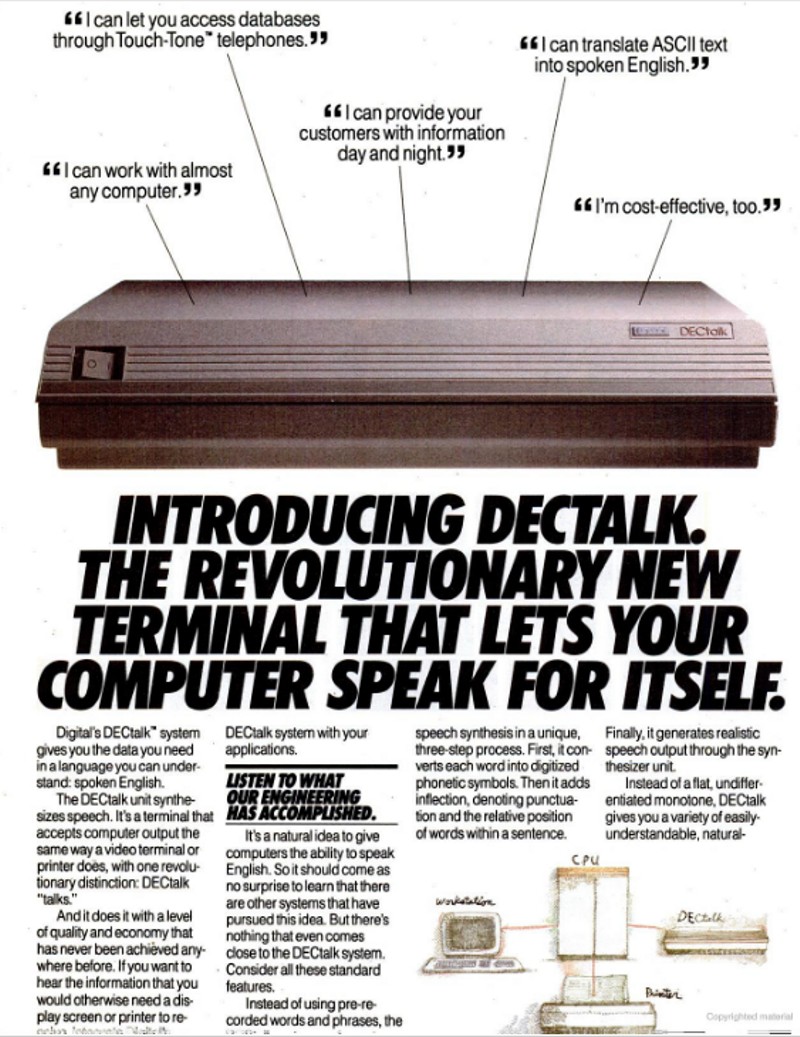

DECtalk advertisement, 1984

Klatt developed a source filter algorithm that he called both KlattTalk and MITalk (pronounced “My-Talk”), which was then licensed to Digital Equipment Corporation (DEC), who released it as DECtalk. Early DECtalk marketing targeted corporations, and even the first ads noted its potential for use by the vision or speech impaired. “It can give a vision-impaired person an effective, economical way to work with computers. And it can give a speech-impaired person a way to verbalize his or her thoughts in person or over the phone.” Groups like the National Weather Service and National Institute for the Blind, as well as several phone companies, began using DECtalk in various applications. DECtalk could produce eight different voices, with “Perfect Paul,” based around Klatt’s own voice, the default for the initial DECtalk terminals.

By the late 1970s, ALS had taken much of Hawking’s ability to speak clearly, and treatment for pneumonia in 1985 made it impossible for him to speak on his own. Initially, Hawking and his team developed a partner-assisted method to communicate. He would indicate letters on a spelling card by raising his eyebrows. This was slow and inexact, and considering the subjects Hawking spoke on regularly, was not practical.

In 1986, Hawking was given a software package called the “Equalizer,” which had been developed by Walter Woltosz as a way to assist his mother-in-law, who suffered from ALS, in her communication. The initial version of the system allowed Hawking to communicate using his thumbs to enter simple commands, selecting words from a database of roughly 3,000 words and phrases, or selecting letters to spell words not contained in the database.

At first, the method required a desktop Apple II computer, and later the husband of one of his caregivers developed a way to bring the computer on-board his wheelchair. “I can communicate better now than before I lost my voice,” Hawking said.

The voice synthesis was handled by a Speech Plus CallText 5010 text-to-speech synthesizer built into the back of Hawking’s wheelchair. The voice used by the CallText was based around Klatt’s MITalk, just like DECtalk, but had only a single voice and had been modified and improved by Speech Plus over a 10-year span. A crisp, American-accented voice, it was a clear and well-paced speech pattern that Hawking liked, despite the availability of other voices and synthesis options as time went on.

Stephen Hawking interacting with the ACAT system. Image: itpro.co.uk.

Over the years, Hawking began to lose his ability to control his thumbs. This posed a serious challenge, as it made it incredibly difficult, and later impossible, to use the system that had been developed for him in the 1980s. In 1997, he turned to Intel to help develop a new wheelchair and voice control system. The new system was ACAT—Assistive Context-Aware Toolkit. To allow Hawking to speak, ACAT used a tablet PC connected to the chair and an infrared sensor located in his glasses. The sensor would detect minute movements of a cheek muscle, allowing him to select words, letters, and phrases using a predictive text method developed by the company SwiftKey. The system allowed Hawking to continue with his work and even make Skype calls. Periodic upgrades to the chair helped compensate for the increasing levels of difficulty Hawking had with muscle control, but one thing stayed the same. His voice.

Until nearly the end of his life, Hawking’s preferred voice was the one synthesized by the CallText 5010. It was only in 2014 that Hawking’s original CallText 5010 synthesizers needed to be replaced. New techniques and voices were tried, but Hawking vetoed their use. The reason—Hawking liked the way the CallText sounded. Almost two decades after Speech Plus had gone out of business, he wanted a replacement. Hawking’s assistants contacted Eric Dorsey, who had worked on the original CallText system, to resurrect the original. When that proved too difficult, a replacement was created. The team created a software emulation of the CallText 5010 based around a Raspberry Pi computer. The voice may have sounded slightly different due to the speakers and other hardware being used to pronounce the actual synthesized sounds, but it was the CallText voice, Dennis Klatt’s synthesized voice, that brought sound back to Stephen Hawking’s genius.