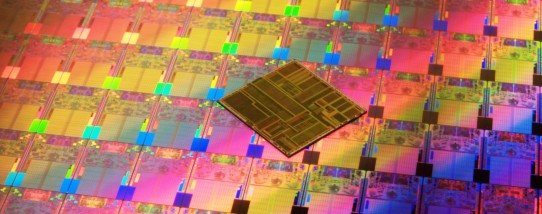

Intel Pentium microprocessor die and wafer

The microprocessor is hailed as one of the most significant engineering milestones of all time. The lack of a generally agreed definition of the term has supported many claims to be the inventor of the microprocessor. The original use of the word “microprocessor” described a computer that employed a microprogrammed architecture—a technique first described by Maurice Wilkes in 1951. Viatron Computer Systems used the term microprocessor to describe its compact System 21 machines for small business use in 1968. Its modern usage is an abbreviation of micro-processing unit (MPU), the silicon device in a computer that performs all the essential logical operations of a computing system. Popular media stories call it a “computer-on-a-chip.” This article describes a chronology of early approaches to integrating the primary building blocks of a computer on to fewer and fewer microelectronic chips, culminating in the concept of the microprocessor.

A general-purpose computer is made up of three basic functional blocks. The central processing unit (CPU), which combines arithmetic and control logic functions; a storage unit for storing programs and data; and I/O units (UART, DMA controller, timer/counters, device controllers, etc.) that interface to external devices such as monitors, printers, modems, etc. The first transistorized computer, the Manchester University TC, appeared in 1953. Ed Sack and other Westinghouse engineers described an early attempt to integrate multiple transistors on a silicon chip to fulfill the major functions of a CPU in a 1964 IEEE paper. They stated that “Techniques have been developed for the interconnection of a large number of gates on an integrated-circuit wafer to achieve multiple-bit logic functions on a single slice of silicon. . . . Ultimately, the achievement of a significant portion of a computer arithmetic function on a single wafer appears entirely feasible.”1

At that time most small computer systems were built from many standard integrated circuit (IC) logic chips, such as the Texas Instruments SN7400 family, mounted on several printed circuit boards (PCBs). As these ICs increased in density, as forecast by Moore’s Law from a few logic gates per chip (SSI—small scale integration) to tens of gates per chip (MSI—Medium Scale Integration), fewer boards were required to build a computer. Minicomputer manufacturer Data General reduced the CPU of its Nova 16-bit minicomputer down to a single PCB by the end of the decade.

A major step in meeting the Westinghouse engineers’ goal of putting a CPU on a single chip took place as IC manufacturing processes transitioned from bipolar to metal oxide Semiconductor (MOS) technology. By the mid-1960s, MOS enabled large scale integration (LSI) chips that could accommodate hundreds of logic gates. Designers of consumer digital products where small size was an advantage—such as in calculators and watches—developed custom LSI chips. In 1965, calculator manufacturer Victor Comptometer contracted with General Microelectronics Inc. (GMe) to design 23 custom ICs for its first MOS-based electronic calculator. By 1969 Rockwell Microelectronics had reduced the chip count to four devices for Sharp’s first portable machine. Mostek and TI introduced single chip solutions in 1971.

By the early 1970s, small LSI-based computer systems emerged and were called microcomputers. Developers of these machines pursued the same techniques used by calculator designers to reduce the number of chips required to make up a CPU by creating more highly integrated LSI ICs. These were known as microcomputer chipsets—by using all of the LSO chips together, a computer system could be built. Each new step along the curve of Moore’s Law, reduced the number of chips required to implement the CPU, eventually leading to a single-chip product we know today as the microprocessor.

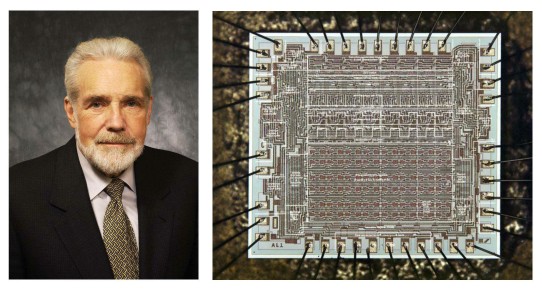

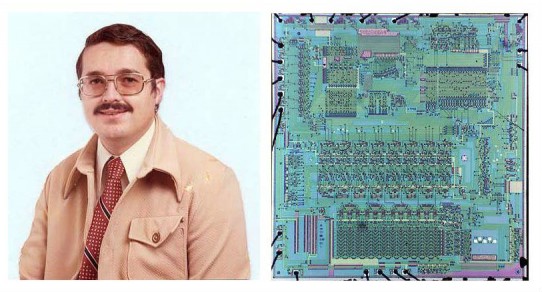

Fairchild Semiconductor began the development of standardized MOS computer system building blocks in 1966. The 3804, its first complete CPU processor bit slice, featured instruction decoding, a parallel four-bit ALU, registers, full condition code generation, and the first use of an I/O bus. Designer Lee Boysel noted that it would not be considered a microprocessor because it lacked internal multi-state sequencing capability, but it was an important milestone in establishing the architectural characteristics of future microprocessors.2 Boysel began work on the AL1, an 8-bit CPU bit slice for use in low-cost computer terminals at Fairchild in 1968. After founding Four Phase Systems Inc., he completed the design and demonstrated working chips in April 1969. A single terminal configuration employed one AL1 device: a multi-terminal server used three.

Lee Boysel and the AL 1 chip

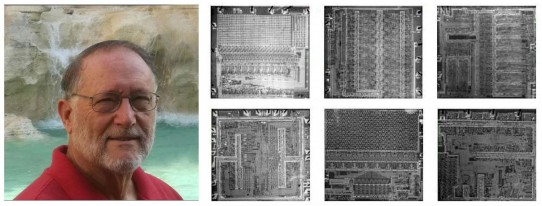

After Boysel left the company, Fairchild Semiconductor continued to invest in this area with the PPS-25 (Programmed Processor System), a set of 4-bit programmable chips introduced in 1971. Considered a “microprocessor” by some users,3 it found favor in scientific applications. Working for Garrett AiResearch Corp under contract from Grumman Aircraft, beginning in 1968 Steve Geller and Ray Holt designed a highly integrated microcomputer chip set, designated MP944, for the Central Air Data Computer (CADC) in the US Navy F14A “Tomcat” fighter. The processor unit comprised several arithmetic and control chips; the CPU, PMD (parallel divider), PMU (parallel multiplier), and SLU (steering logic unit). American Microsystems Inc. manufactured the first complete chip set in 1970.4

Ray Holt and the MP944 chip set

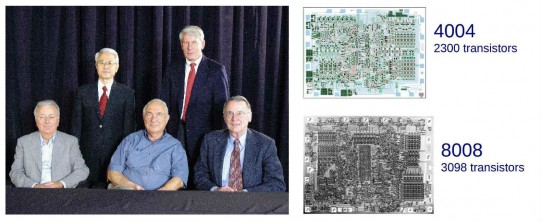

Intel’s first effort in this product category, the MCS-4 Micro Computer Set, was conceived as the most efficient way of producing a set of calculator chips. Created in January 1971 by a team of logic architects and silicon engineers—Federico Faggin, Marcian (Ted) Hoff, Stanley Mazor, and Masatoshi Shima—for Japanese calculator manufacturer Busicom, the centerpiece of the four-chip set was the 4004, initially described as a 4-bit microprogrammable CPU. A later data sheet renames it as a “single chip 4-bit microprocessor.”

Intel MCS-4 and MCS-8 design team and CPU chips

Using a new silicon-gate MOS technology, Faggin and Shima were able to squeeze 2,300 transistors onto the 4004 CPU device, one of the densest chips fabricated to date. This level of complexity incorporated more of the essential logical elements of a processor onto a single chip than prior solutions. These included a program counter, instruction decode/control logic, the ALU, data registers, and the data path between those elements. In addition to calculators, the programmable features of the 4004 enabled applications in peripherals, terminals, process controllers and test and measuring systems. While sales of the chip set were modest, the project launched Intel into a profitable new business opportunity.

The origin of Intel’s next processor, the 8008, is murky. In an interview with Robert Schaller, Vic Poor, vice president of R&D at Computer Terminal Corporation (CTC), contends that he approached Intel in 1969 with the idea of developing a single-chip CPU for the Datapoint 2200 Programmable Terminal. Schaller also reports Stan Mazor’s claim that Poor actually requested a special-purpose memory stack and Intel responded with a proposal to integrate the entire CPU. In a 2004 Computer History Museum oral history, Poor changed his position and corroborated Mazor’s version.5

Whatever the facts, Intel signed a contract with CTC in early 1970 to develop an 8-bit processor. Begun by Hal Feeney and completed, after extensive delays due to other priorities, by Federico Faggin, working units of the 8008 were delivered to the customer in late 1971 and followed by a public announcement in February 1972. Texas Instruments initially responded to the CTC application with a three-chip solution. Presented with an early copy of the Intel specification, TI aborted the original approach and assigned engineer Gary Boone to design a competing single chip 8-bit processor. Designated the TMX1795, first units were delivered to CTC in mid-1971, several months before Intel’s 8008. As the original specification contained an error in one of the instructions, later corrected by Intel, the devices were non-functional in the application.

Gary Boone and Texas Instruments TMX1795 chip

Although CTC ultimately chose not to use either version of the processor, the experience paid-off handsomely for both companies. For Intel, the 8008 led to the highly successfully 8080 processor and ultimately the entire x86 family of microprocessors, the most successful in history. Boone’s design concepts applied to single-chip calculator and microcontrollers made TI a leader in those markets for many years.

Presented with this convoluted history, how should historians assign credit for the invention of the microprocessor? Ultimately it depends on the definition of the word as expressed by different experts in the field. According to technology analyst Nick Treddenick, “I have looked at [Boysel’s] AL1 design from the papers written about it through the circuit diagrams and discussions with Lee Boysel and I believe it to be the first microprocessor in a commercial system.” In a CHM video recorded in 2010, Gordon Bell supported his opinion. Notably, Boysel makes no such claim.

In 1973 Intel marketing manager Hank Smith described a microcomputer as “a general-purpose computer composed of standard LSI components that are built around a CPU. The CPU (or microprocessor) . . . is contained on a single chip or a small number of chips.” Ray Holt argues that based on Smith’s comment, his MP944 custom chip set should qualify as the first microprocessor. Opinions for (Russel Fish) and against (David Patterson) are published on Holt’s web site. Smith’s multi-chip definition was quickly supplanted with popular usage emerging in the technical press that required most or all of the elements of a processor, as embodied in the Intel 4004, to be integrated onto a single chip. While Intel is happy to accept this version, recent company literature avoids the controversy by referring to the device as “Intel’s first microprocessor.” Observers, such as Ken Shirriff,6 who contend that the 4004 was not a general-purpose computer but the forerunner of today’s ubiquitous microcontrollers will point to the 1795/8008 8-bit architectures as representing the first true microprocessors.

Who won this particular race—first to silicon or first to meet the customer specification— is in the eye of the beholder? The microcontroller adds additional functionality to a basic processor core to make a more efficient solution for a specific application, from automobile engine controllers to toys and appliances. A microcontroller combines one or more processors with memory and input/output peripheral devices on a single chip. It can be tailored for low cost, low power, and/or small size for portable battery-operated devices. This segment of the market has its own cast of inventors and claimants. One of best documented is an inference case brought by Texas Instruments against a patent granted to Gilbert Hyatt in 1990 for work beginning in 1968. The patent describing a Single Chip Integrated Circuit Computer Architecture was eventually invalidated as it was never implemented and was not implementable with the technology available at the time of the invention, but not before Hyatt had collected millions in royalties.

Most historians who follow the tangled threads underlying the origins of the microprocessor conclude that it was simply an idea whose time had come. Throughout the decade of the 1960s, numerous semiconductor manufacturers contributed to an ever-increasing number of transistors that could be integrated onto a single silicon chip. Correspondingly many government funded and commercial computer designers were seeking to reduce the number of chips in a system. The eventual implementation of the major functions of a CPU on a chip was both inevitable and obvious.